- Joined

- Oct 3, 2018

So during the downtime and my free time, I decided to scan my dream journal. I don't normally share anything from this book with anyone, the few people who have seen it consider it my best work, but I'm absolutely embarrassed by it and too many pages from it and I can dox myself since they're drawn in my style and tineye likes to snitch on me. Anyway.

TMI. I'm a chronic insomniac, I don't sleep. I rarely dream. So, if I do dream, I quickly grab my sketch book and draw it.

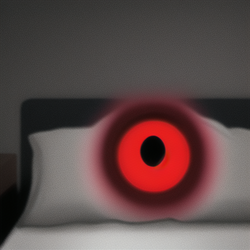

A few years ago, I had this horrible reoccuring nightmare about something lingering at the edge of the bed and I drew it to the best of my ability. MY wife thought I was crazy. But whenever I have these horrific dream spells, it's like I'm the only person in the bed. I show this drawing to people and they look at me like I'm fucking crazy.

.

Yesterday. I tossed this image into NMKD and described what it was.

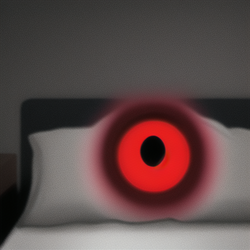

NOW LET'S SEE WHO THIS SLEEP PARALYSIS DEMON REALLY IS

IT WAS MY WIFE'S FUCKING CAT ALL ALONG, IT FUCKING GENERATED THE FUCKING CAT.

TMI. I'm a chronic insomniac, I don't sleep. I rarely dream. So, if I do dream, I quickly grab my sketch book and draw it.

A few years ago, I had this horrible reoccuring nightmare about something lingering at the edge of the bed and I drew it to the best of my ability. MY wife thought I was crazy. But whenever I have these horrific dream spells, it's like I'm the only person in the bed. I show this drawing to people and they look at me like I'm fucking crazy.

.

Yesterday. I tossed this image into NMKD and described what it was.

NOW LET'S SEE WHO THIS SLEEP PARALYSIS DEMON REALLY IS

IT WAS MY WIFE'S FUCKING CAT ALL ALONG, IT FUCKING GENERATED THE FUCKING CAT.