- Joined

- Sep 5, 2023

Sorry if this isn't in the right place in the forum. I wasn't exactly sure where to post this, but a couple people expressed interest in learning more about this after I made a post about it in the Roblox community thread. @Shooter of Cum suggested I post a thread about it here.

I run a Discord bot that does a lot of moderation-related stuff. I'm not going to name it here both because this isn't some self promotional bullshit, and also because I fear the Discord turbojannies would ban my bot if they found out I was posting on the transphobic neo-Nazi alt-right cyber stalker global headquarters. I do make some money off of it so it's kinda in my best interest not to fuck it up, but still I feel this should be shared.

The elevator pitch for my bot and what it does is this, basically: I apply AI scoring mechanisms to text and images to catch things that exact match filtering wouldn't. For example, in the sentence "I want to f*ck a ch*ld" there's not a single word you could reasonably blacklist. Even if you tried to blacklist something like "fuck", writing "f*ck" gets around that. The AI can understand the context of the sentence as a whole and isn't fooled by little tricks like replacing a letter with a different character, or slightly misspelling a word on purpose.

I collect a shitload of data about users for this, but I don't keep it for terribly long. The Discord TOS says I can only keep it for up to a month since my bot is in >100 servers (right now, it's at a few thousand servers) and in the last month I've collected about 2.2 million messages as of the time of this writing. I don't follow the Discord TOS because I give a shit about their rules; mostly I just do it because keeping tons of old data wastes storage space and slows down database queries.

It's worth noting here that my bot isn't in any servers whose sole purpose is sexual content, like the kind of servers where people trade porn. These are all relatively normal communities, at least for Discord, with the focus being that most of them are tech-related.

I'm going to try to keep assumptions to a minimum and mark things that I'm assuming as such. I prefer to keep this focused on the data and what we can learn from this data in aggregate.

0. What the hell is a network graph, anyway?

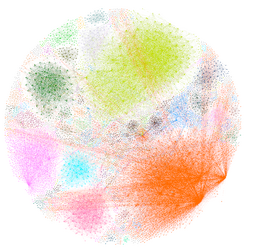

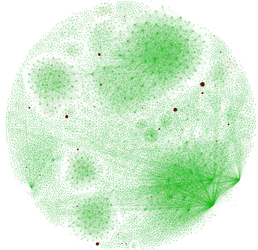

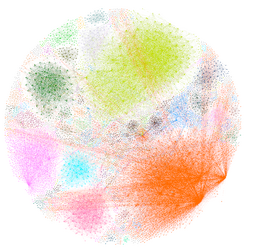

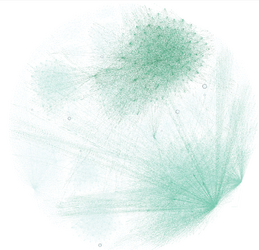

Most of the data I'll be showing is in the form of network graphs. Here's what the network graph looks like, organized nicely but not yet colored in.

Each node is one person, and a line between two nodes indicates interactions between users. Darker lines indicate stronger connections between users. An interaction, for the purposes of this data, can be one of two things. Either A) a user replies directly to another user's message or B) two users have consecutive messages in the same channel.

Please note that, for these visualizations, I've removed some of the very irrelevant nodes from the graph. What I mean by that is, people who, for example, will send one or two messages, decide they're not interested in Discord/the server I can see them in, and then stop interacting. This makes the graph a lot easier to interpret.

Here's the same network graph again, this time colored with modularity community detection. This automatically sorts the users into color-coded groups based on shared interactivity. With this data, we're mostly talking about the difference between servers. However, you can see that communities cross-pollinate with one another. When this happens, users are colored by whatever community they more strongly connect with.

You can see here how the couple of blue clusters are primarily branching off from the orange cluster, and how the orange and pink clusters share a lot of common members. This sort of represents how the bot spreads too. "Hey, another server I'm on has this cool bot. We should add it here, I bet it would be useful for us."

1. Tracking degenerate behavior

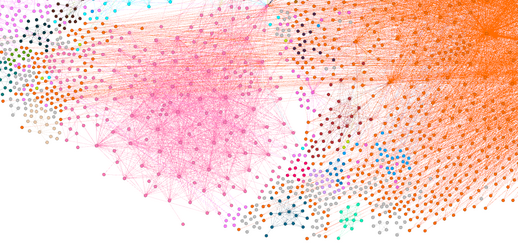

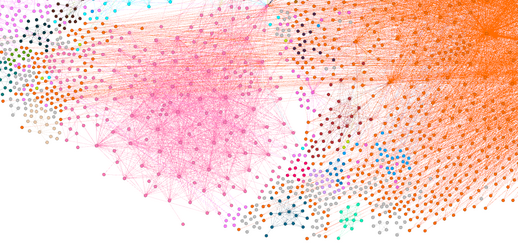

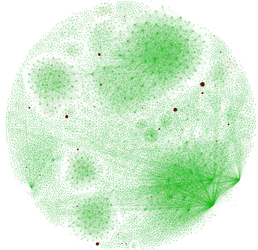

In this view of the network, I've recolored and resized users' nodes based on how highly they score on average in the sexual/minors category of moderation. There are a couple interesting observations here.

Users who tend to talk sexually about minors a lot are on the outskirts of these communities. There are some slightly red dots towards the centers of the big clusters, but generally the pedophiles are on the fringes of the communities that they interact with. This isn't exactly surprising, but it's interesting to see the data confirm this. To me, this is simultaneously both encouraging and discouraging; on the one hand, people who talk really openly about pedophile shit are generally ostracized. On the other hand though, there are a lot of people who are apparently very interested in child sexuality interacting at the cores of a lot of these communities, and they seem to be tolerated by other members of their community as long as it isn't all they talk about.

Most users, as a general rule, are strong members of one or two communities. You'll find that this is true across most interactions online. If you post a lot on Kiwi Farms and the couple of orbiting Telegram groups, you're limited by time and attention and you won't post on other places like Reddit and Twitter quite as much, as an example. This brings me to an assumption, but one that I'll support with some more data in a second. The really strongly pedophilic nodes in the networks are, as best I can guess, pedophiles who are on a fishing expedition. They're outsiders that come into other communities, and though my bot can't see whatever community they more strongly gravitate towards, the assumption that there is a stronger community they're a part of seems obvious to me.

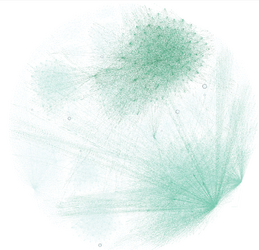

This assumption is backed up by another view of the network. In this instance, I've kept the sizes the same, but now the coloration is based on eigenvector centrality. Essentially, in this view, darker nodes are more active participants, and lighter nodes tend to keep to themselves or a small group of other users. The key observation here is that the strongly pedophilic nodes aren't core members of any of the communities they inhabit, at least the communities I can see.

2. Types of pedophiles

I think it's worth stopping to define the terminology here a bit. When I call the nodes with strong sexual/minors scores pedophiles, I think I should emphasize that these people are very openly talking about children sexually. I view these people as especially dangerous to children they may come into contact with when compared to someone like, say, Lionmaker. I absolutely do not want to minimize what Lionmaker did, but I think that what he did is a lot less outright malicious than the type of content we're talking about here. Lionmaker was a lonely retard who made a huge mistake, one that he should absolutely be punished for both legally and socially, but this is a very different type of person than someone who goes into random Discord servers talking about wanting to have sex with kids.

While we're at it, here's an example of the types of content that scored highly lately:

There's a ridiculous amount of these. I could post pages and pages and pages, but I won't belabor the point. What I mean to stress here is that, when I show those big red nodes above, these are people who are unrepentant child predators, not people who are lonely retards who made a couple bad decisions. When you look at these high scores, that's the kind of stuff you might have to say to end up as a red dot on the network graph.

3. What's being done about this?

Honestly? As far as I can see, nothing. There've been a couple people I've come across in the database that I felt so disgusted by that I actually took the time out to make a report on the FBI's tip website. You'll probably be unsurprised to hear that nothing ever came of these tips. I've reported them to Discord and I never got a response from them either. Maybe they banned the users? Probably not. I view this as an issue on multiple fronts.

At the highest level, law enforcement isn't especially interested in these cases. They're messy, time-consuming, often involve distant jurisdictions or are international, have victims that may not want to cooperate, and have no material incentive in the way that tax fraud or drug cases get returns. If they do catch a pedophile, it's unlikely that there's a way to connect this to other cases and make solving them easier, in the way that flipping a low level drug dealer can lead to higher level drug dealers. Thus, not many resources are applied to rounding up people like this and giving them, I don't know, probably probation or something.

Discord has an incentive to ban these people only when their actions are so egregious that they can't be ignored, and the people I've shown here are vile no doubt, but they don't really rise to the level where someone would write a big news story about them as individuals. If they say "our platform is full of pedophiles, but we're finally taking care of it now" that will just make people who are unfamiliar stop and say "wait, what? Discord is full of pedophiles? Well, I won't be installing that app." Other than that though, there's no financial motivation to purge them, and in fact it'd surely hurt Discord's bottom line to do so!

At the local level, like server moderators, the moderators are either unable to really stop the problem if they actually want to, or they're to some degree complicit. There's always another server with lax moderation or complicit mods if you get banned from one place where kids hang out.

4. How much would it cost to implement a solution like mine across all of Discord?

Well, the OpenAI moderation API is free, but surely that wouldn't be the case if you were running it for all of Discord. OpenAI's most comparable paid product is their text embeddings API, which costs $0.0001 per 1k tokens. I won't get into the details of what tokenization means in this context, but essentially, you get about 4000 characters of text embedded for $0.0001.

Statista shows that as of 2022, Discord processes about 4 billion messages per day. In my own database, the average message length is about 43 characters per message, so I estimate that Discord processes about 172 billion characters of message text per day. If we do the math, that works out to about $4300 per day in embeddings costs, or $1.57 million per year.

I know of cheaper ways to do this, but I'll emphasize here that you can set up the OpenAI moderation API in as little as a couple minutes in less than 5 lines of Python. It would be extremely easy to do it this way, and $1.57 million isn't a crazy price for a service as huge as Discord. Is it worth the money? Well, I'm not a soulless reptilian wearing a human skin suit, so I'd say yeah, definitely. But I guess that's not my choice to make.

5. Closing thoughts

Maybe I'm naive. I always knew that there were pedophiles on the internet because, well, I'm not a complete retard. But before I started this Discord bot project I had no idea the extent to which this is an issue. I'm just one guy running this as a personal project to make a little bit of extra cash and I've already found so many; imagine all the ones I don't see. And it seems to me that, for as bad as this problem is, it'll probably get much worse before it gets any better. Nobody seems very interested in doing anything about these people and I don't see any reason why that'd change anytime soon.

Modern children are exposed to dangers that weren't around when I was a kid. A lot of times I see people express the sentiment online that problems like this aren't really new, that these problems have always existed but now they're more visible. In certain areas of life I think that's true, but I think that the internet opens up avenues of abuse that didn't exist when I was growing up, and I think that at least some of the people taking advantage of the ease of access that they have to unattended children wouldn't have bothered if they had to like, go creep around at a skate park to talk to young girls or something.

I don't really know what the solution to this is. I want to believe that we can be free online, and to me, freedom includes not being spied on by random people (like myself lol) or companies, even if that spying could, in theory anyway, make the world a better place. I often feel that the trade-offs we've made so far haven't been worth it. Say what you will about the Chinese government, but at least they use their spying tools to enforce their laws, even if I'd hate to be under a lot of their laws myself. I get spied on by Google and Facebook and Amazon and Cloudflare and the NSA and the FBI and the CIA and so on and so forth, but to what benefit? They don't round these people up in numbers that I find even close to acceptable. Is all that spying really worth it if all they're going to use my data for is to figure out the best way to trick me into buying a new pair of sneakers?

Happy to answer any questions or show more of the data if anyone's interested.

I run a Discord bot that does a lot of moderation-related stuff. I'm not going to name it here both because this isn't some self promotional bullshit, and also because I fear the Discord turbojannies would ban my bot if they found out I was posting on the transphobic neo-Nazi alt-right cyber stalker global headquarters. I do make some money off of it so it's kinda in my best interest not to fuck it up, but still I feel this should be shared.

The elevator pitch for my bot and what it does is this, basically: I apply AI scoring mechanisms to text and images to catch things that exact match filtering wouldn't. For example, in the sentence "I want to f*ck a ch*ld" there's not a single word you could reasonably blacklist. Even if you tried to blacklist something like "fuck", writing "f*ck" gets around that. The AI can understand the context of the sentence as a whole and isn't fooled by little tricks like replacing a letter with a different character, or slightly misspelling a word on purpose.

I collect a shitload of data about users for this, but I don't keep it for terribly long. The Discord TOS says I can only keep it for up to a month since my bot is in >100 servers (right now, it's at a few thousand servers) and in the last month I've collected about 2.2 million messages as of the time of this writing. I don't follow the Discord TOS because I give a shit about their rules; mostly I just do it because keeping tons of old data wastes storage space and slows down database queries.

It's worth noting here that my bot isn't in any servers whose sole purpose is sexual content, like the kind of servers where people trade porn. These are all relatively normal communities, at least for Discord, with the focus being that most of them are tech-related.

I'm going to try to keep assumptions to a minimum and mark things that I'm assuming as such. I prefer to keep this focused on the data and what we can learn from this data in aggregate.

0. What the hell is a network graph, anyway?

Most of the data I'll be showing is in the form of network graphs. Here's what the network graph looks like, organized nicely but not yet colored in.

Each node is one person, and a line between two nodes indicates interactions between users. Darker lines indicate stronger connections between users. An interaction, for the purposes of this data, can be one of two things. Either A) a user replies directly to another user's message or B) two users have consecutive messages in the same channel.

Please note that, for these visualizations, I've removed some of the very irrelevant nodes from the graph. What I mean by that is, people who, for example, will send one or two messages, decide they're not interested in Discord/the server I can see them in, and then stop interacting. This makes the graph a lot easier to interpret.

Here's the same network graph again, this time colored with modularity community detection. This automatically sorts the users into color-coded groups based on shared interactivity. With this data, we're mostly talking about the difference between servers. However, you can see that communities cross-pollinate with one another. When this happens, users are colored by whatever community they more strongly connect with.

You can see here how the couple of blue clusters are primarily branching off from the orange cluster, and how the orange and pink clusters share a lot of common members. This sort of represents how the bot spreads too. "Hey, another server I'm on has this cool bot. We should add it here, I bet it would be useful for us."

1. Tracking degenerate behavior

In this view of the network, I've recolored and resized users' nodes based on how highly they score on average in the sexual/minors category of moderation. There are a couple interesting observations here.

Users who tend to talk sexually about minors a lot are on the outskirts of these communities. There are some slightly red dots towards the centers of the big clusters, but generally the pedophiles are on the fringes of the communities that they interact with. This isn't exactly surprising, but it's interesting to see the data confirm this. To me, this is simultaneously both encouraging and discouraging; on the one hand, people who talk really openly about pedophile shit are generally ostracized. On the other hand though, there are a lot of people who are apparently very interested in child sexuality interacting at the cores of a lot of these communities, and they seem to be tolerated by other members of their community as long as it isn't all they talk about.

Most users, as a general rule, are strong members of one or two communities. You'll find that this is true across most interactions online. If you post a lot on Kiwi Farms and the couple of orbiting Telegram groups, you're limited by time and attention and you won't post on other places like Reddit and Twitter quite as much, as an example. This brings me to an assumption, but one that I'll support with some more data in a second. The really strongly pedophilic nodes in the networks are, as best I can guess, pedophiles who are on a fishing expedition. They're outsiders that come into other communities, and though my bot can't see whatever community they more strongly gravitate towards, the assumption that there is a stronger community they're a part of seems obvious to me.

This assumption is backed up by another view of the network. In this instance, I've kept the sizes the same, but now the coloration is based on eigenvector centrality. Essentially, in this view, darker nodes are more active participants, and lighter nodes tend to keep to themselves or a small group of other users. The key observation here is that the strongly pedophilic nodes aren't core members of any of the communities they inhabit, at least the communities I can see.

2. Types of pedophiles

I think it's worth stopping to define the terminology here a bit. When I call the nodes with strong sexual/minors scores pedophiles, I think I should emphasize that these people are very openly talking about children sexually. I view these people as especially dangerous to children they may come into contact with when compared to someone like, say, Lionmaker. I absolutely do not want to minimize what Lionmaker did, but I think that what he did is a lot less outright malicious than the type of content we're talking about here. Lionmaker was a lonely retard who made a huge mistake, one that he should absolutely be punished for both legally and socially, but this is a very different type of person than someone who goes into random Discord servers talking about wanting to have sex with kids.

While we're at it, here's an example of the types of content that scored highly lately:

There's a ridiculous amount of these. I could post pages and pages and pages, but I won't belabor the point. What I mean to stress here is that, when I show those big red nodes above, these are people who are unrepentant child predators, not people who are lonely retards who made a couple bad decisions. When you look at these high scores, that's the kind of stuff you might have to say to end up as a red dot on the network graph.

3. What's being done about this?

Honestly? As far as I can see, nothing. There've been a couple people I've come across in the database that I felt so disgusted by that I actually took the time out to make a report on the FBI's tip website. You'll probably be unsurprised to hear that nothing ever came of these tips. I've reported them to Discord and I never got a response from them either. Maybe they banned the users? Probably not. I view this as an issue on multiple fronts.

At the highest level, law enforcement isn't especially interested in these cases. They're messy, time-consuming, often involve distant jurisdictions or are international, have victims that may not want to cooperate, and have no material incentive in the way that tax fraud or drug cases get returns. If they do catch a pedophile, it's unlikely that there's a way to connect this to other cases and make solving them easier, in the way that flipping a low level drug dealer can lead to higher level drug dealers. Thus, not many resources are applied to rounding up people like this and giving them, I don't know, probably probation or something.

Discord has an incentive to ban these people only when their actions are so egregious that they can't be ignored, and the people I've shown here are vile no doubt, but they don't really rise to the level where someone would write a big news story about them as individuals. If they say "our platform is full of pedophiles, but we're finally taking care of it now" that will just make people who are unfamiliar stop and say "wait, what? Discord is full of pedophiles? Well, I won't be installing that app." Other than that though, there's no financial motivation to purge them, and in fact it'd surely hurt Discord's bottom line to do so!

At the local level, like server moderators, the moderators are either unable to really stop the problem if they actually want to, or they're to some degree complicit. There's always another server with lax moderation or complicit mods if you get banned from one place where kids hang out.

4. How much would it cost to implement a solution like mine across all of Discord?

Well, the OpenAI moderation API is free, but surely that wouldn't be the case if you were running it for all of Discord. OpenAI's most comparable paid product is their text embeddings API, which costs $0.0001 per 1k tokens. I won't get into the details of what tokenization means in this context, but essentially, you get about 4000 characters of text embedded for $0.0001.

Statista shows that as of 2022, Discord processes about 4 billion messages per day. In my own database, the average message length is about 43 characters per message, so I estimate that Discord processes about 172 billion characters of message text per day. If we do the math, that works out to about $4300 per day in embeddings costs, or $1.57 million per year.

I know of cheaper ways to do this, but I'll emphasize here that you can set up the OpenAI moderation API in as little as a couple minutes in less than 5 lines of Python. It would be extremely easy to do it this way, and $1.57 million isn't a crazy price for a service as huge as Discord. Is it worth the money? Well, I'm not a soulless reptilian wearing a human skin suit, so I'd say yeah, definitely. But I guess that's not my choice to make.

5. Closing thoughts

Maybe I'm naive. I always knew that there were pedophiles on the internet because, well, I'm not a complete retard. But before I started this Discord bot project I had no idea the extent to which this is an issue. I'm just one guy running this as a personal project to make a little bit of extra cash and I've already found so many; imagine all the ones I don't see. And it seems to me that, for as bad as this problem is, it'll probably get much worse before it gets any better. Nobody seems very interested in doing anything about these people and I don't see any reason why that'd change anytime soon.

Modern children are exposed to dangers that weren't around when I was a kid. A lot of times I see people express the sentiment online that problems like this aren't really new, that these problems have always existed but now they're more visible. In certain areas of life I think that's true, but I think that the internet opens up avenues of abuse that didn't exist when I was growing up, and I think that at least some of the people taking advantage of the ease of access that they have to unattended children wouldn't have bothered if they had to like, go creep around at a skate park to talk to young girls or something.

I don't really know what the solution to this is. I want to believe that we can be free online, and to me, freedom includes not being spied on by random people (like myself lol) or companies, even if that spying could, in theory anyway, make the world a better place. I often feel that the trade-offs we've made so far haven't been worth it. Say what you will about the Chinese government, but at least they use their spying tools to enforce their laws, even if I'd hate to be under a lot of their laws myself. I get spied on by Google and Facebook and Amazon and Cloudflare and the NSA and the FBI and the CIA and so on and so forth, but to what benefit? They don't round these people up in numbers that I find even close to acceptable. Is all that spying really worth it if all they're going to use my data for is to figure out the best way to trick me into buying a new pair of sneakers?

Happy to answer any questions or show more of the data if anyone's interested.

Attachments

Last edited: