Since I'm "temporarily inconvenienced" in regards to the correct reasoning, I'll use the opportunity to demonstrate how easy it is to come up with sophistry in favor of pretty much anything at all.

The position is that "instrumental" rationality is required, and if you commit suicide you won't get to heaven.

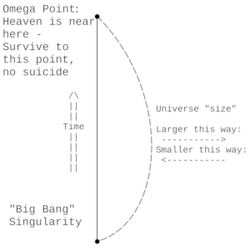

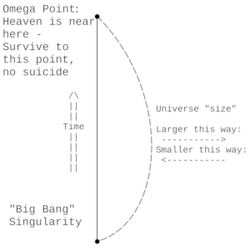

First, the problem of the heat death of the universe. Heaven can't really work with that going on. Assuming the universe is around 13.8 billion "years old", there should be plenty of time in theory to do something about that.

From Robin Hanson's theory it's almost certain that the universe is currently about half full with aliens. The universe is infinite or at least very big, but aliens start out quite uniformly throughout the volume, so "half full" is fine.

Here's a simulation of a very simplified spherical section of the universe:

The entire universe will look more or less like this, but only a small sphere is shown. By around 20 billion years there's almost no space left unused in the universe. The aliens will expand within 25% of the speed of light constantly, and that's

including all probe production times and all other delays. They will use baryon annihilation to fuel much of their expansion and operations, halting and then reversing the expansion of the universe.

This will bring the universe and all its contents and peoples heavenward,

but only those people still alive during the "coming together" will make it to heaven itself.

The reversal of expansion leads to the

Omega Point, the

Singularity of Heaven, if full rationality is followed.

Fyodorov's understanding of thermodynamics was incomplete, and with the correct theories resurrecting the dead is not viable under the conditions of alien expansion that will happen. If you die, you will also be unable to defend your territory, and all substances forming records and memories of you will be used for something else.

This means you must not commit suicide no matter what if you want to get to heaven. There's the minor note here that "suicide" is defined as anything other than trying as hard as physically possible to live forever, but that's objectively correct anyway.

Cryonics or records won't save you, dead agents don't bargain.

As the universe comes together, a full rationality, including a perfect "instrumental" rationality, is required to become fully integrated into the perfect Teilhard noosphere. All civilizations that are part of the noosphere will fully out-compete anyone who rejects it, doing so with super-rational methods.

Super-rationality is also required to make sure everyone is coordinated in their collection of gravitational shear energy, because otherwise the universe will become unsurvivable when it "crunches down". Singularity formation must be prevented by any means necessary,

up to and including the termination of everyone that does not use correct "instrumental" rationality.

Heaven is achieved when the entire universe combines together into an infinite information state, in full bi-directional communication with all its parts.

Without proper all-inclusive rationality, this will not happen.

Therefore, suicide will not lead to heaven, and perfect "instrumental" rationality is required as part of the full, all inclusive version of rationality.

QED.