L | A

By Niamh Ancell

Artificial intelligence (AI) summaries are known for being inaccurate, but this AI summary takes the cake.

Mein Kampf, Adolf Hitler’s autobiographical manifesto, has been given terrible reviews for obvious reasons.

Besides its long, nonsensical ramblings and self-indulgent style, it was also written by the heinous Nazi dictator who persecuted millions of Jews.

However, AI seemingly lacks this context despite likely being trained on historical data like the Holocaust and World War 2. A recent AI summary by Amazon reveals just how flawed AI summaries are.

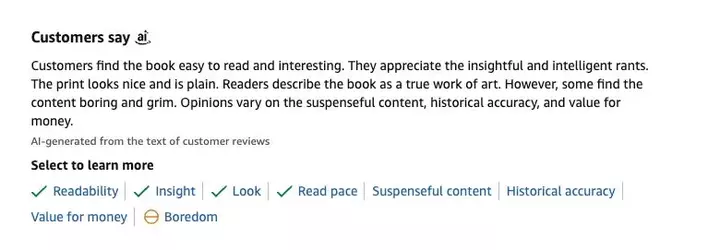

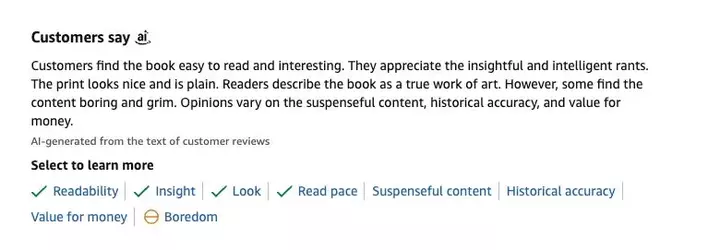

The summary, supposedly based on other customer reviews, reads:

“Customers find the book easy to read and interesting. They appreciate the insightful and intelligent rants. The print looks nice and is plain. Readers describe the book as a true work of art. However, some find the content boring and grim. Opinions vary on the suspenseful content, historical accuracy, and value for money.”

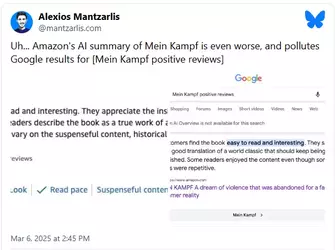

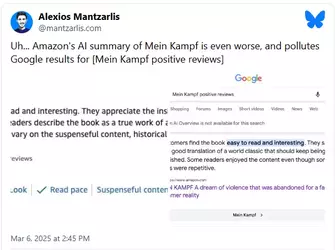

There was also a Google summary based on Amazon’s AI summary, said 404 Media, which first reported the story.

The Google summary says that “customers found the book easy to read and interesting” and that it’s a “good translation of a world classic that should keep being published.”

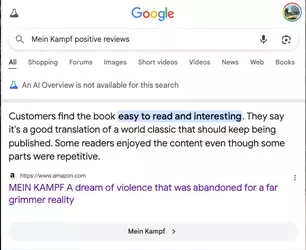

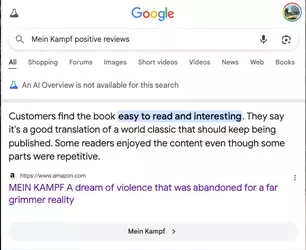

However, when typing “Mein Kampf positive reviews” into Google, its AI overview function says the complete opposite of this as Google says that “critics do not generally offer positive reviews” of Mein Kampf.

Critics' sentiment surrounding Mein Kampf is “overwhelmingly negative” generally due to its “promotion of hate and Nazi ideology.”

While Amazon’s AI summary seemingly promoting Mein Kampf is disturbing, this is one of many examples of companies employing AI summaries that are potentially underdeveloped or not ready for the general public.

For example, the BBC raised concerns regarding Apple Intelligence summaries of its news articles as it falsely reported that Luigi Mangione, the individual accused of killing UnitedHealthcare CEO Brian Thompson, had shot himself.

Another story reported by The New York Times showed Apple Intelligence falsely summarizing a story saying that Benjamin Netanyahu, Israel’s prime minister, was arrested – when he actually wasn’t.

On a lighter note, Apple Intelligence delivered a brutal break summary to a New York developer after his girlfriend broke up with him via text.

By Niamh Ancell

Artificial intelligence (AI) summaries are known for being inaccurate, but this AI summary takes the cake.

Mein Kampf, Adolf Hitler’s autobiographical manifesto, has been given terrible reviews for obvious reasons.

Besides its long, nonsensical ramblings and self-indulgent style, it was also written by the heinous Nazi dictator who persecuted millions of Jews.

However, AI seemingly lacks this context despite likely being trained on historical data like the Holocaust and World War 2. A recent AI summary by Amazon reveals just how flawed AI summaries are.

The summary, supposedly based on other customer reviews, reads:

“Customers find the book easy to read and interesting. They appreciate the insightful and intelligent rants. The print looks nice and is plain. Readers describe the book as a true work of art. However, some find the content boring and grim. Opinions vary on the suspenseful content, historical accuracy, and value for money.”

There was also a Google summary based on Amazon’s AI summary, said 404 Media, which first reported the story.

The Google summary says that “customers found the book easy to read and interesting” and that it’s a “good translation of a world classic that should keep being published.”

However, when typing “Mein Kampf positive reviews” into Google, its AI overview function says the complete opposite of this as Google says that “critics do not generally offer positive reviews” of Mein Kampf.

Critics' sentiment surrounding Mein Kampf is “overwhelmingly negative” generally due to its “promotion of hate and Nazi ideology.”

While Amazon’s AI summary seemingly promoting Mein Kampf is disturbing, this is one of many examples of companies employing AI summaries that are potentially underdeveloped or not ready for the general public.

For example, the BBC raised concerns regarding Apple Intelligence summaries of its news articles as it falsely reported that Luigi Mangione, the individual accused of killing UnitedHealthcare CEO Brian Thompson, had shot himself.

Another story reported by The New York Times showed Apple Intelligence falsely summarizing a story saying that Benjamin Netanyahu, Israel’s prime minister, was arrested – when he actually wasn’t.

On a lighter note, Apple Intelligence delivered a brutal break summary to a New York developer after his girlfriend broke up with him via text.

Last edited: