I guess the use for cards anymore will be AI, things that really need that extra computational horsepower

Consumer-level AI runs on the CPU. You can't assume the presence of a dGPU for a mass-market application, which is why AMD, Intel, Apple, and Qualcomm are

all putting NPUs on their CPUs.

There is a fundamental rule of computer architecture that it is

always more efficient to put everything on the same die if you

can. In the 1980s, your floating-point unit, if you had one, was on a separate die from your CPU. Back in the day, if you wanted to do floating-point math (i.e. calculate with decimals), you had to buy a separate accelerator like this. It cost over $1000 in today's money. Today, this is on the CPU die.

If you wanted sound, you needed a card like this. Today, virtually every motherboard has integral sound. It's not on the CPU, but integral to the mobo is the next best thing.

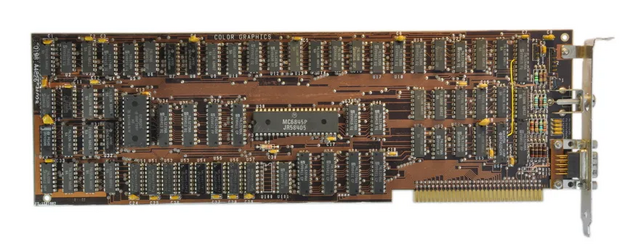

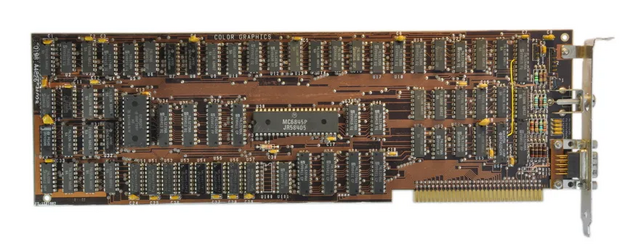

Any graphical output at all required a video card. This is a CGA video card, and the graphics it could output. For years now, mainstream CPUs have had integral GPUs capable of far better graphics.

If you wanted to use the internet, you needed a card like this. Today, pretty much every motherboard has integral ethernet that's zillions of times faster than this 14.4 modem.

You want a quad-core machine? Be prepared to shell out to put four Pentium CPUs on the same board. Multiple cores on the same die? Now that's madman talk!

The reason I expect 3D accelerator cards to die is every single other accelerator card is dead or relegated to a meaningless niche. At some point, the tech is good enough that paying big money for an expensive add-on is just not something consumers want. The most logical place for the GPU to end up is on the CPU rather than the motherboard.