kde has opened up submissions for what goals they should focus on for the next few years.

i have put in a task to continue X11 support

https://phabricator.kde.org/T17393 but i'll require some of you boys to sign up and add your voice.

why do this?

to make the wayland troons seeth. why else?

Forgot where I saw it, I guess on Telegram, but Kubuntu 24.04 release notes explicitly say that wayland login option exists but is only for testing and they have X11 by default. Demoted by the most popular distro. So X11 is definitely here to stay for at least a good ten years of mainstream use/support.

(Just have some AI coding team fix all the bugs in ten years).

I wrote about this in another thread but multimodality is basically a necessary step because LLMs have a very incomplete inner world. In simple terms, because all they have is text they do not understand the fundamental ways of how "our" world is put together and that's how the mistakes they make happen. Their approximation of reality is completely alien to ours and everyone who ever played around with an LLM knows that. You see these massive amounts of text that are shoved into them and think they should turn out superintelligent, but the truth is that this gives them less information about our world than a toddler gets in it's short life. For a more accurate world representation, you need more data. There's still problems to solve but it's mainly an engineering and scaling problem at this point, IMO.

People working at OpenAI seem worried, tho.

Definitely. video platforms, and livestreaming platforms, and any platforms that have access to large troves of archived or live video, have a big advantage for training AIs. At least in terms of information density. Possibly already or soon to hit the limits of capabilities on only training on the textual Internet data.

OpenAI totally lacking any sort of even basic iMovie-like interface means that its their game to lose and they're being all faggoty bitchy and coy with Sora (like they were with GPT2, and now random online models routinely show off how they beat GPT2 in benchmarks currently).

OpenAI needs to get knocked the fuck down already.

Also about NPUs and Linux:

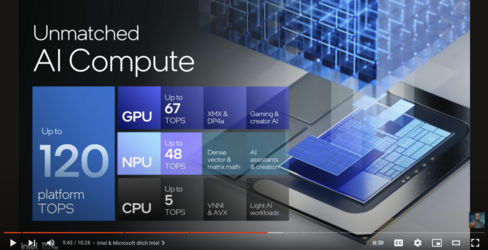

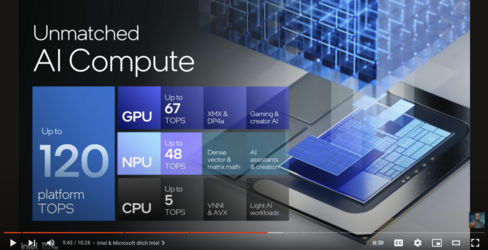

Probably misses a fair bit but seems like the rough figure to determine AI power that is getting used (cue megahertz mythcrafting) is TOPS.

A screenshot from the Intel Lunar Lake (fabbed with TSMC) with an obese foid crudely hamhanding chips:

Even on a laptop chip the ecks eeee xD "only barely does directx 12 intel chip is significantly faster than the deditated wam/NPU trash.

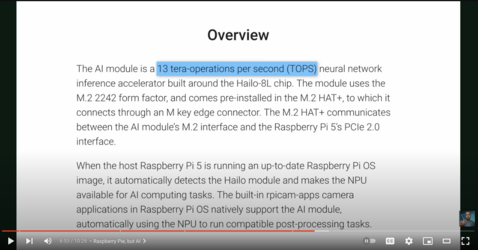

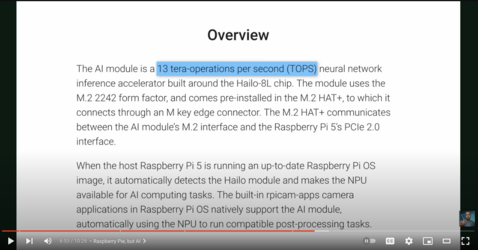

Also from the same vid this literal real life soy faggot (actually is a real life soyjack) had a segment on how the cucked brits are shoehorning the AI fad into the rapesberry pi, by having a little addon board ("hat" in faggot speak) to connect an m.2 "AI accelerator" board to the rapesberry pi 5 PCIe 2.0 (yes two point zero) m.2 interface, giving it 13 TOPS.

Having the latest raspylesbianos 'natively support the module' and it can be used for some like computer vision tasks currently

Segment is 30 seconds from 6:33 to 7:00 in timestamp, leaving out the soy commentary this loser feels like people would want to hear:

So summary is, NPUs can do a fraction of what GPUs already can do and only matter for energy efficiency, but overall are boring and gay and MBA-pushed shite.

Also LLMs still just need a way to check themselves for things like being lazy and refusing to do simple things like run code, or not making use of data already provided.

and even a basic ability to break one task into smaller tasks, instead of just doing an extremely poor job of trying to do a large task in one response.