Link | Archive

Note: I will add additional information at the bottom of this post since the article doesn't cover everything

Experts say the channel’s profit structure suggests a high level of demand and a lack of understanding that the images it creates are illegal

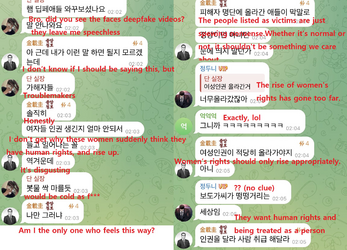

Screenshots from inside the over 220,000-member Telegram chat room that generated deepfakes of women using AI technology.

Pornographic deepfakes, or digitally altered images generated through AI technology, are being widely distributed on the Telegram messaging app in Korea, including on one Telegram channel that produces deepfake nude images on demand for over 220,000 members. The channel, which was easily accessed through a basic online search, charges money for the fabricated images, which are based on photographs of actual people. Experts say the channel is a stark illustration of the current state of deepfake pornography — which many people are not even aware is illegal.

A Telegram channel that the Hankyoreh accessed through a link on X (formerly known as Twitter) on Wednesday features an image bot that converts photographs of women uploaded to the channel into deepfake nude images. When the Hankyoreh entered the channel, a message popped up asking the user to “upload a photograph of a woman you like.”

The Hankyoreh uploaded an AI-generated photograph of a woman and within five seconds, the channel generated a nude deepfake of that photograph. The deepfake tool even allows users to customize body parts in the resulting image. As of Wednesday, Aug. 21, the Telegram channel had some 227,000 users.

The Telegram channel was very easy to access. A search for specific terms on X and other social media brought up links to the channel, and one post with a link was even featured as a “trending post” on X. Despite recent coverage of sex crimes involving deepfakes and related police investigations, posts are still going up to promote the channel.

The Telegram channel generates up to two deepfake nudes for free but requires payment for further images. Each photograph costs one “diamond” — a form of in-channel currency worth US$0.49, or about 650 won — and users are required to purchase a minimum of 10 diamonds, with discounts available for bulk purchases. Diamonds are also given out for free to users who invite friends to the channel, in an obvious attempt to broaden the user base. Cryptocurrency is the only accepted form of payment, likely for anonymity reasons.

The chat room does not allow users to send messages or pictures to one another. The trouble is that there is no way of knowing how the deepfakes the channel generates are being used by members.

“Sexually exploitative deepfake images created in Telegram rooms like these get shared in other group chat rooms, that that’s how you end up with mass sex crimes,” said Seo Hye-jin, the human rights director at the Korean Women Lawyers Association.

“If there are over 220,000 people in the Telegram room at the stage where these images are being manufactured, the damage from distribution is likely to be massive,” she assessed.

The existence of such a huge Telegram channel with a revenue-generating model is likely a reflection of the reality in which creating highly damaging deepfakes is seen as no big deal.

“The fact that there’s a profit structure suggests that there’s a high level of demand,” said Kim Hye-jung, the director of the Korea Sexual Violence Relief Center.

“Despite the fact that sexually degrading women has become a form of ‘content’ online, the social perceptions of this as a minor offense is a key factor that plays into sex crimes,” she went on.

By Park Go-eun, staff reporter

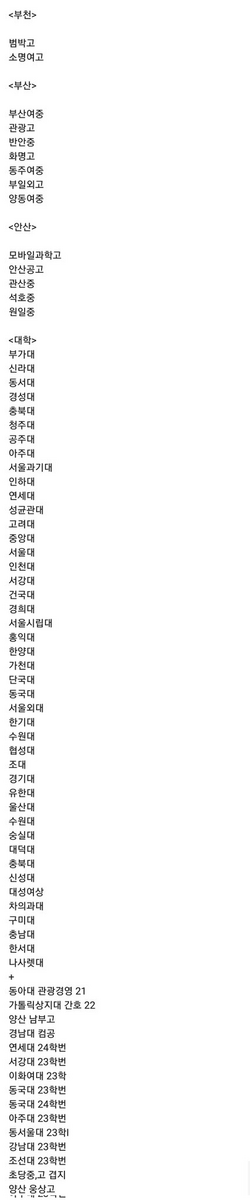

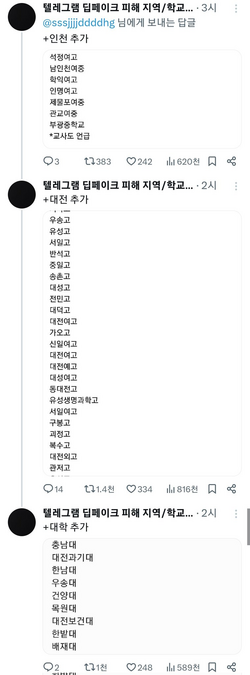

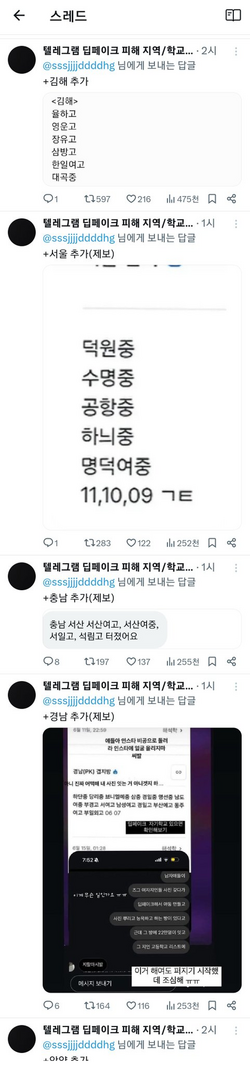

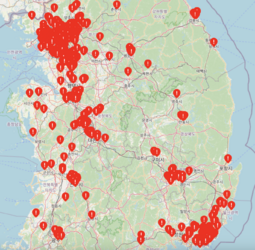

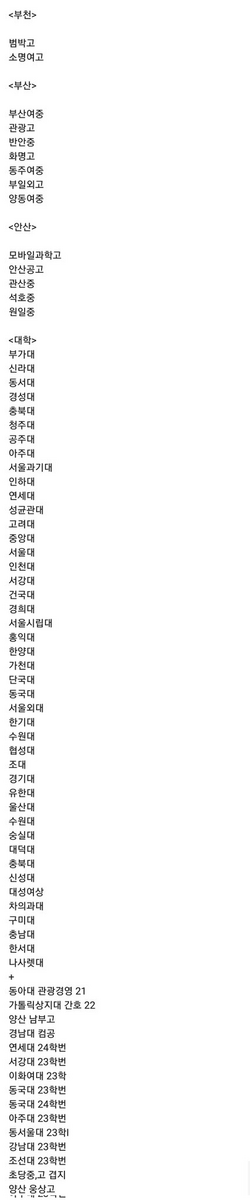

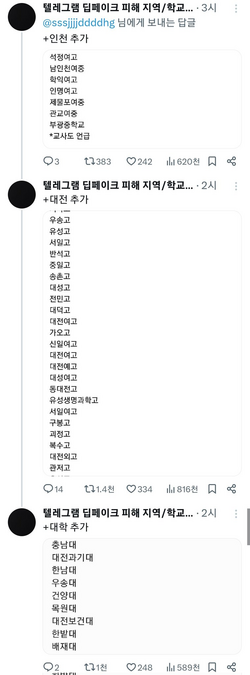

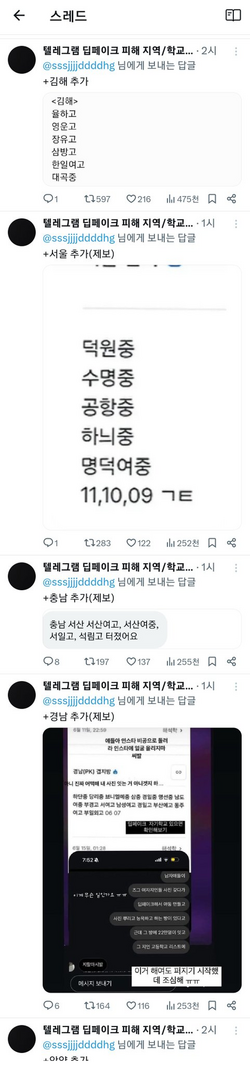

Telegram rooms were discovered in over 70% of South Korean schools where female students' faces were photoshopped into porn using AI. Girls in South Korea created a list of schools to check if they were victims. This is just a part of it

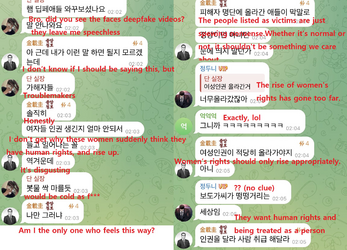

The sample of the chat room from their Telegram:

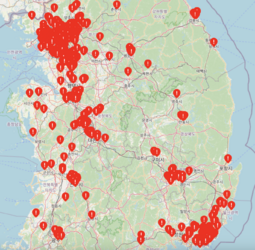

A feminist in South Korea has mapped out schools where deepfake child pornography was created by male students using photos of girls on Telegram. The Map was based on the list you saw earlier. The website: https://deepfakemap.xyz/

Note: I will add additional information at the bottom of this post since the article doesn't cover everything

Experts say the channel’s profit structure suggests a high level of demand and a lack of understanding that the images it creates are illegal

Screenshots from inside the over 220,000-member Telegram chat room that generated deepfakes of women using AI technology.

Pornographic deepfakes, or digitally altered images generated through AI technology, are being widely distributed on the Telegram messaging app in Korea, including on one Telegram channel that produces deepfake nude images on demand for over 220,000 members. The channel, which was easily accessed through a basic online search, charges money for the fabricated images, which are based on photographs of actual people. Experts say the channel is a stark illustration of the current state of deepfake pornography — which many people are not even aware is illegal.

A Telegram channel that the Hankyoreh accessed through a link on X (formerly known as Twitter) on Wednesday features an image bot that converts photographs of women uploaded to the channel into deepfake nude images. When the Hankyoreh entered the channel, a message popped up asking the user to “upload a photograph of a woman you like.”

The Hankyoreh uploaded an AI-generated photograph of a woman and within five seconds, the channel generated a nude deepfake of that photograph. The deepfake tool even allows users to customize body parts in the resulting image. As of Wednesday, Aug. 21, the Telegram channel had some 227,000 users.

The Telegram channel was very easy to access. A search for specific terms on X and other social media brought up links to the channel, and one post with a link was even featured as a “trending post” on X. Despite recent coverage of sex crimes involving deepfakes and related police investigations, posts are still going up to promote the channel.

The Telegram channel generates up to two deepfake nudes for free but requires payment for further images. Each photograph costs one “diamond” — a form of in-channel currency worth US$0.49, or about 650 won — and users are required to purchase a minimum of 10 diamonds, with discounts available for bulk purchases. Diamonds are also given out for free to users who invite friends to the channel, in an obvious attempt to broaden the user base. Cryptocurrency is the only accepted form of payment, likely for anonymity reasons.

The chat room does not allow users to send messages or pictures to one another. The trouble is that there is no way of knowing how the deepfakes the channel generates are being used by members.

“Sexually exploitative deepfake images created in Telegram rooms like these get shared in other group chat rooms, that that’s how you end up with mass sex crimes,” said Seo Hye-jin, the human rights director at the Korean Women Lawyers Association.

“If there are over 220,000 people in the Telegram room at the stage where these images are being manufactured, the damage from distribution is likely to be massive,” she assessed.

The existence of such a huge Telegram channel with a revenue-generating model is likely a reflection of the reality in which creating highly damaging deepfakes is seen as no big deal.

“The fact that there’s a profit structure suggests that there’s a high level of demand,” said Kim Hye-jung, the director of the Korea Sexual Violence Relief Center.

“Despite the fact that sexually degrading women has become a form of ‘content’ online, the social perceptions of this as a minor offense is a key factor that plays into sex crimes,” she went on.

By Park Go-eun, staff reporter

Telegram rooms were discovered in over 70% of South Korean schools where female students' faces were photoshopped into porn using AI. Girls in South Korea created a list of schools to check if they were victims. This is just a part of it

The sample of the chat room from their Telegram:

A feminist in South Korea has mapped out schools where deepfake child pornography was created by male students using photos of girls on Telegram. The Map was based on the list you saw earlier. The website: https://deepfakemap.xyz/