Honestly the based thing to do legislation wise would be for the US to just mandate the most recent datasets be made open source, host them on a government server, and allow anyone with the money/processing power to make models accordingly. Then just spend money updating thr data sets. Big tech pulled up the ladder on data scraping specifically so everyone is stuck with gay neutered models and cannot accumulate the data needed to make new ones anymore.That's not true at all, nearly all machine learning algorithm is trained off of web scraped images from resources like LAION. Culture revolving anything AI basically ignored intellectual property.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

Style variation

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AI Derangement Syndrome / Anti-AI artists / Pro-AI technocultists / AI "debate" communities - The Natural Retardation in the Artificial Intelligence communities

- Thread starter gagabobo1997

- Start date

-

🐕 I am attempting to get the site runnning as fast as possible. If you are experiencing slow page load times, please report it.

- Joined

- Jul 12, 2021

This didn't happen.When OpenAI and the rest of them argue in court that it's impossible to make any of this stuff work without legally questionable scraping

Responding to the idea that they could just train on public domain works, OpenAI said that they couldn't create "today's leading models" without training on copyrighted data. Which is obviously true, you can't build a modern, useful, top-tier model only with 100 year old data.

The projects you've been involved with also trained on copyrighted data. You just obtained full permission to use it first. It was still copyrighted data.

- Joined

- Jan 31, 2022

Nah, honestly, learning is closer to the truth than a collage. I've seen a lot of artists say that it's a collage but that to me just shows how sometimes you can be incredibly convincing and well-spoken while also being completely wrong.It's not quite a collage, but a collage is closer to the truth than "it learns and gets inspiration just like a human does." Information from the training set gets embedded in the neural network parameters, which is why all these AIs are capable of reproducing copyrighted works. That is what is at issue in all the IP lawsuits pending.

There's no issue with AI upscaling technology because it wasn't trained by scraping the internet.

If it were collaging, then the training data would need to be retained so that it could be queried and copied from. But a model like SDXL is orders of magnitude smaller in filesize than the training data set used to train it, way more than any compression algorithm could handle, even if we somehow took a compression algorithm from 50 years in the future and bought it back in time for this one purpose.

In technical speak, you're not storing the original data, you're storing the weights between the nodes of a neural network. That is new data that has been generated from observing the training data, and from a law standpoint, that's also why saying AI is plagiarism doesn't really work (how can something be plagiarism if it retains literally none of the original data?).

In a less technical way of speaking though and speaking of the process, it's literally just replicating the process by which very young children learn to speak for the first time. Shit loads of data combined with positive and negative reinforcement. It's why young kids shows are so repetitive and why kids will constantly repeat the same few words when they've started to speak, they may not even know what that word means but they know that in certain circumstances, when they say it, they get a positive response.

As for copyrighted works being copied. That's almost always either due to unusual edge-cases which are actually highly undesirable (hence why it has the name "overfitting", it's a negative behaviour that is specifically labelled), or it is simply due to the abundance of a that within the dataset (AIs generate Mona Lisa pretty much one-to-one because there's a lot of images of the Mona Lisa on the internet). This also has the interesting thing that AI does tend to replicate Shutterstock watermarks because that's also so common within the algorithm, and it usually attempts to add signatures (though the model almost never copies a specific signature, it basically just scribbles in a specific place in a specific size).

- Joined

- Jul 12, 2021

There might be something to be said for the difference between infringing on a specific image vs. infringing on a character, or on the exclusive right to create derivative works. This is why fan art can still be infringement even if the artist draws the character doing something new which was never expressed by the original creators.As for copyrighted works being copied. That's almost always either due to unusual edge-cases which are actually highly undesirable (hence why it has the name "overfitting", it's a negative behaviour that is specifically labelled), or it is simply due to the abundance of a that within the dataset (AIs generate Mona Lisa pretty much one-to-one because there's a lot of images of the Mona Lisa on the internet).

A model might not be overfit on a specific image of the Simpsons, but with many thousands of images of them, it can learn them well enough to draw them in various poses and scenarios. I don't know that this would be considered overfitting, it's not like a specific image is "fried" into the model...the ability to learn what a Simpsons character is is the whole point of the model's ability to generalize. If it can make Abraham Lincoln frying an egg, and that doesn't mean Lincoln is overfit, then what if it can also make Bart Simpson frying an egg?

I would say that misuse of the model as a tool is still the user's fault, though. You ask for the Simpsons, you get them, you choose how to use the resulting image in a way that draws the ire of the copyright holders.

- Joined

- Feb 16, 2021

Me too, since social media algorithms already made it basically impossible to get a foothold and build a name for yourself as a real artist unless you were already established like Artgerm or Frank Cho, or are willing to pay money to boost your posts (which is still 50:50 on delivering meaningful gains). If I can't succeed on hard work and talent, then burn the whole thing down.I'm pro AI art replacing actual artists

Actual artists had legitimate grievances, but I've seen far too many of them willing to jump on the harassment bandwagon and ruin lives over retarded shit

Why would I want to deal with a group so mentally ill when I could just ask a machine to do it and get no sass?

Plus I've noticed the people who bitch the loudest and hardest about AI usually suck at drawing.

Bagger 293

kiwifarms.net

- Joined

- Apr 24, 2022

Yes this is the whitepill on AI. God willing it will be so ubiquitous that everything in its domain will be made worthless and nobody will trust anything they see, hear or read on the public-facing internet for fear it's actually from AI and not a real person.Soon, and I hope this'll happen, most forms of digital art will become effectively worthless while the entirely physical will thrive

Of course, the conjugate blackpill to that is that this doesn't happen and most people continue to just take in the same slop as the internet already is, but at a rate greatly accelerated by AI's capabilities. Boomers I can already see going this way unfortunately.

- Joined

- Aug 14, 2022

Already saw AI generated christmas cards among my older relatives, or AI generated pizzeria ads. This has spread like a Los Angeles wildfire on Facebook and there's no turning back.Yes this is the whitepill on AI. God willing it will be so ubiquitous that everything in its domain will be made worthless and nobody will trust anything they see, hear or read on the public-facing internet for fear it's actually from AI and not a real person.

Of course, the conjugate blackpill to that is that this doesn't happen and most people continue to just take in the same slop as the internet already is, but at a rate greatly accelerated by AI's capabilities. Boomers I can already see going this way unfortunately.

Bagger 293

kiwifarms.net

- Joined

- Apr 24, 2022

I forgot the name and had to look it up but wasn't this AI-advertised awful boomer bait willy wonka thing fairly infamous for a while? Some people are probably stupid or unaware enough to keep being fooled but you would hope after some time it becomes common wisdom (again) not to trust anything you see online.Already saw AI generated christmas cards among my older relatives, or AI generated pizzeria ads. This has spread like a Los Angeles wildfire on Facebook and there's no turning back.

It would be easy to complain the adverts are soulless AI art but really it's not like regular ads are that much better; I think these are just more honestly targeted at aged facebook users. Could be where some of the rage about this stuff comes from, it demonstrates too clearly the awful taste of some people.

I also laughed a little too much when I saw this in the article. I wonder if he got the idea for it from an AI prompt. So bizarre.

Attachments

- Joined

- Jan 31, 2022

Fan art is basically always IP infringement according to the law, it's just they don't enforce it most of the time. But according to the underlying theory of IP law, there is nothing to prevent Sony from saying "you are never allowed to draw Spiderman again", you can't even make the argument of fair use. We've already seen stuff like this in cases like when a family was rejected from putting Spiderman on their dead kid's grave.There might be something to be said for the difference between infringing on a specific image vs. infringing on a character, or on the exclusive right to create derivative works. This is why fan art can still be infringement even if the artist draws the character doing something new which was never expressed by the original creators.

This is why I don't buy into arguments against AI that rely on the concept of "intellectual property". Since you're trying to have your cake and eat it too. It's asking for extremely strict enforcement of your IP that exists because technically you infringed upon another person's IP, that's a ridiculous stance.

Personally, I treat IP as if it doesn't exist because I don't think it should exist. It's just "I had this idea first and now I own it and I should have control to make sure others don't think the same idea I had". Fuck that, copyright and patents should be abolished.

- Joined

- May 24, 2018

Do your part, for every whiny post about AI, produce 3 images in whatever slop generator you prefer.At this point I support AI solely to see these people get replaced by it. Skynet can't come soon enough

Total Artfag death.

Twitter freaks don't know because they are hacks who would not know the first thing about doing art, they all have extremely rigid styles because it's all they know how to do and can't get out of that box, show them something outside of it and their brain breaks.Twitter freaks wouldn’t know because they don’t have the autistic attention to detail.

Twitter freaks never learned the fundamentals, they can't spot a stylistic choice because their brain is unable to process it as such.

- Joined

- Nov 15, 2021

Yes, it did. Google argued in court that their business model requires scraping without paying. All of the big named in AI have made some variation of the argument that obtaining permission would be prohibitively expensive, therefore they shouldn't have to do it.This didn't happen.

In technical speak, you're not storing the original data, you're storing the weights between the nodes of a neural network.

No compression algorithm stores the original data. With a JPEG, you're not storing the original data, you're storing discrete cosine transform weights. There's lots of research literature showing how NN training is a form of compression, and compression was one of the earliest proposed applications back in I think the 90s.

It's axiomatic in computers that a computer program that can reliably reproduce a piece of data is equivalent to storing that data. It doesn't matter how complex or esoteric the storage scheme is. If your computer program reproduces an image on command, it is storing the image. Obfuscating how it is stored does not change the fact that it is stored.

ChatGPT reproduces copyrighted code bases with just a few prompts. It's not an edge case.As for copyrighted works being copied. That's almost always either due to unusual edge-cases

- Joined

- Jul 12, 2021

Are you talking about the case where the judge nuked the plaintiffs' claims for being a confused mass of wild allegations?Yes, it did. Google argued in court that their business model requires scraping without paying.

Never mind that scraping by itself has been ruled and reaffirmed to be legal, and then the process of training a model with that data doesn't infringe on anything, since the model doesn't store images. They SHOULDN'T have to pay when they haven't done anything illegal.

You're essentially arguing that an artist looking up a reference online should be required to pay whoever made that reference, even if they don't do anything to infringe on that image.

Are you arguing that a 6 GB model "stores" an infinite number of images, due to the nature of latent space and the ability to seed your output?It's axiomatic in computers that a computer program that can reliably reproduce a piece of data is equivalent to storing that data.

You can type "cat wearing a tuxedo" at seed 123 on a specific hardware and software configuration and always get the same image. And so is image 124, and 125, on and on into infinity. All "compressed and stored" within the model.

Likewise, you might as well argue that Microsoft Paint "stores" every possible image, because if you just input a complex enough seed (one that involves clicking on each pixel to manually draw the image), voila, you've got whatever image you want. You just need the right inputs to reproduce it.

Last edited:

- Joined

- Nov 15, 2021

it's not like a specific image is "fried" into the model

Typically, some fraction of the training set can be pulled back out of any model, often imperfectly, if you know how it was tokenized. The public-facing AIs don't make this information public, and further obfuscate things via randomization, but it's not too hard to get an idea of what specific images are embedded in the data with the right prompts, e.g. most album covers are baked in. If you knew the tokenization scheme and could disable randomization, you could pull out the album cover reliably. As it is, it comes out slightly differently each time with the exact same prompt:

I would say that misuse of the model as a tool is still the user's fault, though. You ask for the Simpsons, you get them, you choose how to use the resulting image in a way that draws the ire of the copyright holders.

The fundamental issue is not that you, random Kiwifarms user Runch, generated some code that has snippets of my code base in it using ChatGPT. The fundamental issue is that ChatGPT took my code base without my permission and is making money from it by selling a laundered version of it to you. It's not just that they scraped content and are using it for their own academic curiosity. They scraped the content, built a commercial product out of it, charge people to use it, and don't want to pay anybody whose content they used. OpenAI's response is that if it had to pay me for my code instead of just taking it, they wouldn't be able to make money, because their expenses would be too high.

Artists are stupid people, which is why they make stupid arguments, but this is really what it comes down to: Should Google and Microsoft be able to make money by harvesting and selling your content without paying you, or should they have to get your permission first? If Microsoft wins against the plaintiffs, then effectively, you own nothing. The tech giants own everything. If you put something online, they can take it and monetize it, and you are owned nothing.

Are you arguing that a 6 GB model "stores" an infinite number of images, due to the nature of latent space and the ability to seed your output?

No, because storage requires two sides, inputting the thing to be stored, and pulling it back out with the right command. The images that are stored are the ones from the training set that can be pulled back out in a recognizable form.

Likewise, you might as well argue that Microsoft Paint "stores" every possible image

You can store any image by inputting it into Paint first.

Last edited:

- Joined

- Jul 12, 2021

Every possible image can be "compressed" as a series of steps. How many bytes does it take to represent "open Photoshop, create a new canvas 100x100, select the fill bucket tool, select the color blue, click at 50x50 to fill the canvas with blue?"You do not seriously think that inputting an entire image, pixel by pixel, is the same as inputing 48 bytes, and reliably getting an album cover back out.

This is just a prompt, a password for getting a specific image from the software. It is your argument that since these simple inputs can be repeated by anyone, the 100x100 blue canvas is stored within Photoshop. If someone had managed to copyrighted it, Photoshop would be infringing on it (rather than the user who followed the steps).

How many bytes of instructions would it take to reliably infringe on a simple album cover like Dark Side of the Moon in Photoshop? Remember, we don't have to duplicate it exactly, just enough to violate copyright by confusing potential consumers. Photoshop already contains all the pieces necessary in order to infringe on it. It's an inherent capability of Photoshop that you can input a short "password" and get an infringing image of Dark Side of the Moon...and apparently, this is Photoshop's responsibility.

At what point is the command itself considered an ultra-compressed form of the image, and the user is simply asking the software to decompress something they brought with them?No, because storage requires two sides, inputting the thing to be stored, and pulling it back out with the right command. The images that are stored are the ones from the training set that can be pulled back out in a recognizable form.

Stable Diffusion doesn't contain an image of "a random penguin wearing a hockey mask" or "Mona Lisa wearing a hockey mask." As a user, I'm providing compressed information and asking the model to expand it out for me.

Last edited:

Endangered Species List

kiwifarms.net

- Joined

- Feb 19, 2024

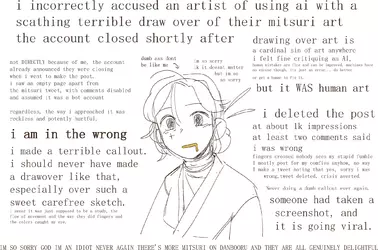

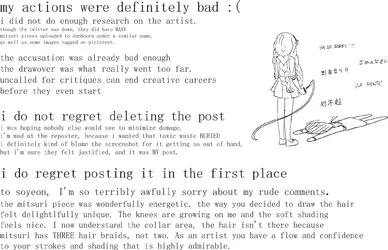

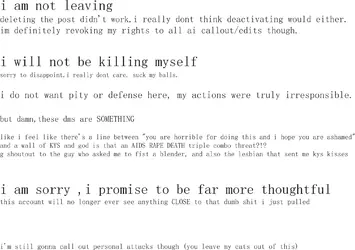

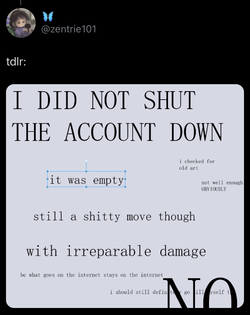

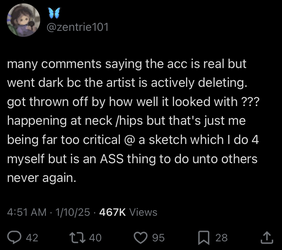

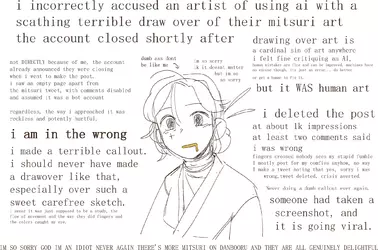

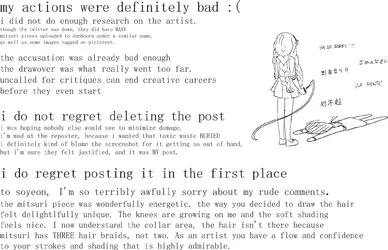

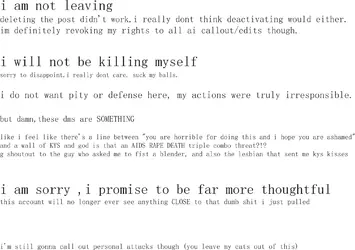

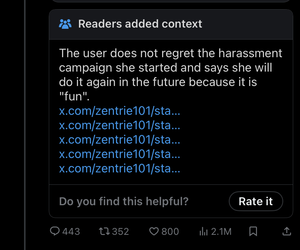

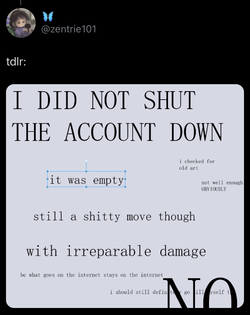

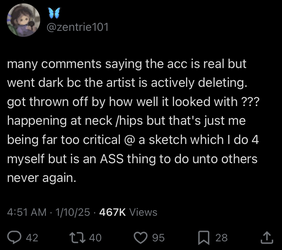

She definitely posted her OC so that haters would do this and also make additional “revenge” art featuring her OC. She already had plenty of art that people could do a fake AI analysis for, but she purposely redirected them to her self-insert design and with all the possible angles they might need in order to copy it.Amusingly, they're never going to be able to post art again without the replies calling it AI.

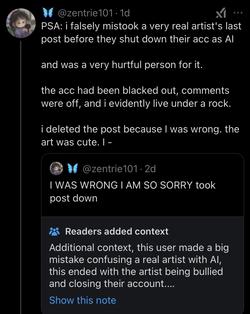

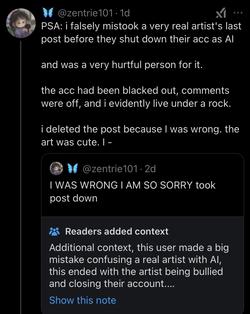

The original poster is being dragged through the fucking coals right now. Apparently the jp artist had already announced their account deletion earlier that day, before the drama, and was actively deleting posts (common for jp artists when leaving a platform) and zentrie101 just took that as confirmation that the artist had been using AI and was trying to cover their tracks.

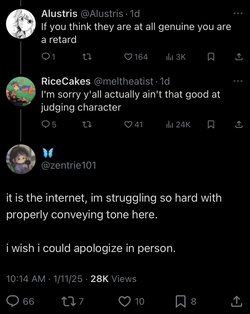

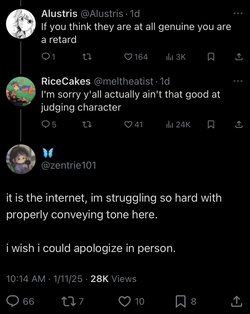

It seems that at this point their preform actively self-flagellating “apologies” are pissing people off more than the original false accusation. God, I fucking love this kind of retarded drama that comes out of the chronically online cesspools.

https://x.com/zentrie101/status/1878065174155853934?s=46

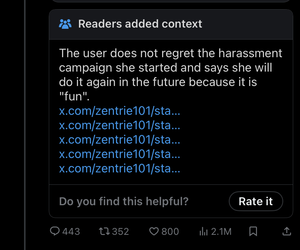

The community notes are a mess

“It’s the bots’ fault that I’m like this”

The community notes are a mess

“It’s the bots’ fault that I’m like this”

She’s still yapping about it by the way

She has started to post in Japanese because people called her out for not actually apologizing to the artist and instead kind of just apologizing to the internet. (This is now her pinned post.)

EDIT

Last edited:

- Joined

- Nov 15, 2021

I'm going to cut to the chase. What we are seeing with AI will result in a change to the internet as fundamental as social media. If Google and Microsoft win their class action suits (and I suspect they will), then the price of entry to the internet is that every single thing you post online belongs to the tech giants. They can harvest it, repackage it, and sell it, and they don't need your permission or to pay you.

The horse is out of the barn regarding everything that was already online, but in the future, expect professionals to be much more reticent to put anything in public-facing repositories like GitHub, DeviantArt, and so on. You will see far more of the internet gated behind paywalls or otherwise protected so that Microsoft doesn't get it.

The horse is out of the barn regarding everything that was already online, but in the future, expect professionals to be much more reticent to put anything in public-facing repositories like GitHub, DeviantArt, and so on. You will see far more of the internet gated behind paywalls or otherwise protected so that Microsoft doesn't get it.

- Joined

- Jul 12, 2021

Direct infringement is still a thing. If an AI model takes your artwork and repackages and sells it in such a way that someone else manages to draw it out as an overfit image, and you discover that they've used your image in a practical sense, you can still sue. Big companies know that it's not worth suing random individuals (see fan art allowed to persist), but individuals may find value in suing companies who carelessly used AI and happened to reproduce their image. This mostly benefits the little guy.I'm going to cut to the chase. What we are seeing with AI will result in a change to the internet as fundamental as social media. If Google and Microsoft win their class action suits (and I suspect they will), then the price of entry to the internet is that every single thing you post online belongs to the tech giants. They can harvest it, repackage it, and sell it, and they don't need your permission or to pay you.

If however the AI's repackaging of your artwork doesn't result in infringement, then this was always allowed. Artists have been borrowing from each other forever, and I'm sure some have gotten really steamed that they see a clear inspiration but can't do anything about it.

It's correct that a simple login wall terms of use can protect against scraping (see HiQ vs. LinkedIn) but it's still just too beneficial for individuals to make their works as public as possible. You have to market yourself to get any work, and people hate making accounts just to see that work.The horse is out of the barn regarding everything that was already online, but in the future, expect professionals to be much more reticent to put anything in public-facing repositories like GitHub, DeviantArt, and so on. You will see far more of the internet gated behind paywalls or otherwise protected so that Microsoft doesn't get it.

- Joined

- Mar 20, 2024

Well yeah, it's the old "Everything before my artistic period is just boring boomer shit, and everything after is slop" take. These fags are overwhelmingly digital "artists" who cannot do anything other than pedo bait "anumu ugu wholesome" bullshit using the latest and greatest photoshop version with a full-on version control system built in, layering, and editable software brushes.I know I'm preaching to the choir, but it really baffles me that digital artists conveniently forget (or are too young to know) that traditional artists had the same issues with digital art once it became accessible. Every argument against AI can be applied to traditional vs digital art.

Codeniggers, I propose : The digital hymen.

Imagine a bare tool that has a few common brushes, no undo, no layers, no arbitrary opacity, and a displacement map which makes touch-ups visible in the third dimension, making the experience of working with it as realistic as it is working on real canvas. The file format needs to be encrypted to make reverse-engineering difficult, preventing forgery done using aformentioned tools. The image is assigned a unique identifier, and is visible for all.

A quality piece made with such a tool is worthy of being refered to as an art piece. It shows the skill of the creator, rather than the skill of the programmer who implemented shortcuts. It is no lesser than the traditional art, which lives and dies on the fine motor skills of real artists.

^This is the only way I could ever respect "digital art". Unfortunately, it will never be a thing as the artfags are of the mindset that the door closes behind them, and remains open until they pass through.

Finally, I keep repeating myself when I say this, but I am certain that many witch hunters know that the accused person is not using AI, and are just trying to get rid of competition. Despite claiming to be commies, most "digital artists" would make Ronald Reagan blush with how unashamedly capitalist their actions are.

Shaneequa

kiwifarms.net

- Joined

- Oct 11, 2020

It's so hard to pick a side on the AI "debate" because on one side you side with pretentious furry twitter artists on the other you side with corpos. Can we come up with a solution that fucks over both these people?

Slurred

kiwifarms.net

- Joined

- Jan 29, 2022

I'd be in favor of a GPL-type legal situation where corporations are required to make the weights of models trained with copyrighted data available for free to everyone. As an added bonus, this will also cause a meltdown among the AI safetyists.It's so hard to pick a side on the AI "debate" because on one side you side with pretentious furry twitter artists on the other you side with corpos. Can we come up with a solution that fucks over both these people?