- Joined

- Jul 10, 2023

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

Style variation

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

GPUs & CPUs & Enthusiast hardware: Questions, Discussion and fanboy slap-fights - Nvidia & AMD & Intel - Separe but Equal. Intel rides in the back of the bus.

- Thread starter Smaug's Smokey Hole

- Start date

-

🐕 I am attempting to get the site runnning as fast as possible. If you are experiencing slow page load times, please report it.

big pauper

kiwifarms.net

- Joined

- Jul 14, 2024

of course it does, you took a 240p screenshot of somebody playing on the lowest settings. it uses a second viewpoint to render the scene twice.Half-Life 2 didn't have real-time dynamic reflections. It used static environment maps and couldn't reflect dynamic objects. Note how the boat, trees, and those wooden beams aren't reflected (not sure if the buildings should be reflected).

why would you do that? go on the internet and tell lies?

- Joined

- Aug 28, 2019

I feel personally attacked.The Mass Shooter Ron Soye said:

Mister Fister

kiwifarms.net

- Joined

- Nov 17, 2024

Aww these kids are experiencing their first tech jump.Given that there was autistic screeching about the Quake III engine not having a software renderer, I'm gonna say..."no."

big pauper

kiwifarms.net

- Joined

- Jul 14, 2024

boomers, aware their empire is crumbling, smugly ride a sinking ship into the sunset in the hopes that maybe some of the gullible youth will follow them to their demise. "its only $2500" they say. "you wont be able to play the newest call of duty without it." but to those who aren't in their youth who remember blu-ray or phys-x know better than to fall for this shitAww these kids are experiencing their first tech jump.

Mister Fister

kiwifarms.net

- Joined

- Nov 17, 2024

Dude, why the fuck are you strawmanning that I was suggesting buying a 5090? You seem like the person who thinks everything is shit except the select specific stuff he likes.

#lurker

kiwifarms.net

- Joined

- Mar 4, 2024

The RTX shilling in this thread in beyond parody now. Either you are trying to justify your expensive lemon, or you have shares in NVIDIA, which is it?

big pauper

kiwifarms.net

- Joined

- Jul 14, 2024

You seem like the person who thinks everything is shit except the select specific stuff he likes.

- Joined

- Jul 18, 2019

You can literally get all this raytracing shit on AMD now thoughbeit, and Intel has had competitive raytracing from the beginning of Arc.The RTX shilling in this thread in beyond parody now. Either you are trying to justify your expensive lemon, or you have shares in NVIDIA, which is it?

- Joined

- Jul 10, 2023

I really don't get the huge shit fit people have about RT. It's not mandatory in all games yet and by the time it becomes standard for games to always have some RT, GPUs will be equipped with enough RT cores to easily run the baseline RT.

Blame dogshit developers who are to lazy to compress textures or make their own light maps or a handful of other things devs don't spend time doing anymore. The new Monster Hunter game only has RT reflections and even with it turned off it runs like dogshit on modern hardware.

I think shitty devs/Epic(UE5) are taking less flak, at least right now, because Nvidia are being turbo jews with their pricing and and terrible supply since they are dedicating most of their resources to datacenter now.

Blame dogshit developers who are to lazy to compress textures or make their own light maps or a handful of other things devs don't spend time doing anymore. The new Monster Hunter game only has RT reflections and even with it turned off it runs like dogshit on modern hardware.

I think shitty devs/Epic(UE5) are taking less flak, at least right now, because Nvidia are being turbo jews with their pricing and and terrible supply since they are dedicating most of their resources to datacenter now.

Last edited:

Mister Fister

kiwifarms.net

- Joined

- Nov 17, 2024

The sooner we get through the games that had development started from 2020-2024 the better.I really don't get the huge shit fit people have about RT. It's not mandatory in all games yet and by the time it becomes standard for games to always have some RT, GPUs will be equipped with enough RT cores to easily run the baseline RT.

Blame dogshit developers who are to lazy to compress textures or make their own light maps or a handful of other things devs don't spend time doing anymore. The new Monster Hunter game only has RT reflections and even with it turned off it runs like dogshit on modern hardware.

I think shitty devs/Epic(UE5) are taking less flask, at least right now, because Nvidia are being turbo jews with their pricing and and terrible supply since they are dedicating most of their resources to datacenter now.

- Joined

- Nov 15, 2021

of course it does, you took a 240p screenshot of somebody playing on the lowest settings.

Lowest settings in HL2 was DX7 mode and didn't have reflective water at all. Kind of a pointless lie for you to tell. Especially when the fact HL2 looked so good and could run on 2000 hardware was a major point of autistic screeching about Doom 3's insanely high hardware requirements, yet terrible performance, back in the day.

DX7 HL2:

Anyway, turns out I had found a DX8 video, and HL2 didn't reflect dynamic objects in DX8 mode.

it uses a second viewpoint to render the scene twice.

So they're doing planar reflections. That would explain why the ripples look so bad, since planar reflections can't handle irregular surfaces correctly (neither can environment maps). Fortunately, better technologies have been developed in the last 20 years, notably raytracing.

- Joined

- Dec 17, 2019

Yeah and nowadays DX9 looks like this and will run at locked 60fps on a 1060 so what's your point? That we hallucinated everything between DX7 HL2 quality in 2004 and GeForce 6 series and today's ray tracing and RTX 50 series so we have to unanimously buy into ray tracing now?Lowest settings in HL2 was DX7

Why even bring up DX7 in the context of this discussion? Because you can't defend ray tracing over rasterization in 2025 any other way? Because back in 2004 Valve managed to create rasterization techniques that can compete with ray tracing with the hardware that we have today, and your only two lines of defense is "b-but DX7" and "muh ripples" as if you couldn't achieve convincingly looking ripples and reflections with rasterization at a fraction of the computing power if you were to use modern game engines, programming techniques and hardware?

But please, do pull another strawman while completely missing the point that was made and have Mister Fister yes man you for another ten pages. Surely you'll be able to defend the honor of ray tracing soon enough!

Gee, wanna know how you two come off with your ray tracing defense?You seem like the person who thinks everything is shit except the select specific stuff he likes.

Forward rendered games feel more comfy, prove me wrong.

We can't even do mirrors at full resolution without game shitting itself:

We can't even do mirrors at full resolution without game shitting itself:

#lurker

kiwifarms.net

- Joined

- Mar 4, 2024

All you do is move goalposts. Perfect example; people point out that HL2 did use reflections without your god (ray tracing), and instead of conceding you move to muh DirectX which is irrelevant.Lowest settings in HL2 was DX7 mode and didn't have reflective water at all.

but muh ripples! I actually think HL2's water still looks amazing to this day.

- Joined

- Dec 17, 2019

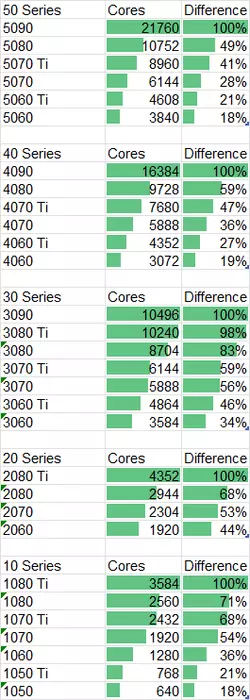

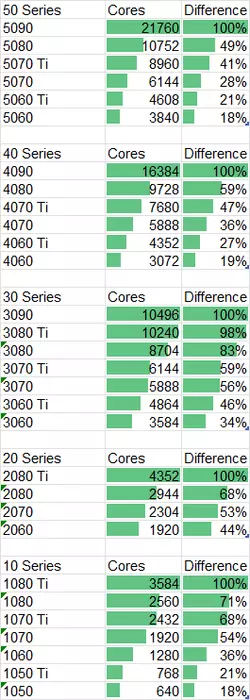

I've decided to make a rough Excel chart that compares how much Nvidia cut down on the CUDA cores from the highest consumer SKU's to the lowest, comparatively to the naming scheme.

I have excluded the 3090Ti as it was essentially a Titan class chip, as well as all the oddballs like the Ti SUPER cards so that it's a direct comparison between the generation naming scheme of the 10 series.

I have excluded the 3090Ti as it was essentially a Titan class chip, as well as all the oddballs like the Ti SUPER cards so that it's a direct comparison between the generation naming scheme of the 10 series.

Just Some Other Guy

kiwifarms.net

- Joined

- Mar 25, 2018

Man look at how sad and pathetic xx70 and xx60 and now even xx80 cards have become. But it's fine because niggertech is here to make it all better.I've decided to make a rough Excel chart that compares how much Nvidia cut down on the CUDA cores from the highest consumer SKU's to the lowest, comparatively to the naming scheme.

View attachment 7130430

I have excluded the 3090Ti as it was essentially a Titan class chip, as well as all the oddballs like the Ti SUPER cards so that it's a direct comparison between the generation naming scheme of the 10 series.

Mister Fister

kiwifarms.net

- Joined

- Nov 17, 2024

\

Definity happy to see the 9070 and 9070XT perform well and sell well.I've decided to make a rough Excel chart that compares how much Nvidia cut down on the CUDA cores from the highest consumer SKU's to the lowest, comparatively to the naming scheme.

View attachment 7130430

I have excluded the 3090Ti as it was essentially a Titan class chip, as well as all the oddballs like the Ti SUPER cards so that it's a direct comparison between the generation naming scheme of the 10 series.

- Joined

- Nov 15, 2021

Forward rendered games feel more comfy, prove me wrong.

We can't even do mirrors at full resolution without game shitting itself:

GPUs are for lazy devs.

- Joined

- Jan 1, 2025

To drive your point home, that shit was running at locked 60 fps on a fucking GTX 260 at 2560x1600 resolutions.Yeah and nowadays DX9 looks like this and will run at locked 60fps on a 1060 so what's your point? That we hallucinated everything between DX7 HL2 quality in 2004 and GeForce 6 series and today's ray tracing and RTX 50 series so we have to unanimously buy into ray tracing now?

This churn with graphics technologies is annoying. They figure out some techniques to get a nice result, but they're too expensive for contemporary hardware, so they use them sparingly. Then we get better hardware and and you can run those effects like it's nothing but by this point they came up with something new that maybe looks 50% better for 200% the cost. Rinse and repeat and we end up with hardware that's got literally 100x the performance and little to show for it.

Like, just give me PS3/PS4 transition era tech that's just running without any restraint. You couldn't bake lighting in cases with too many lights that go on/off because it took too long and required too much VRAM? Good news 2010 nigga we got 24GB now, go crazy