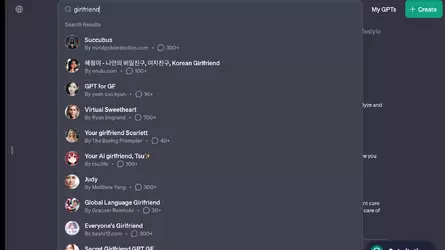

Screenshot of “girlfriend” search results on OpenAI’s GPT store.

It’s day two of the opening of OpenAI’s buzzy GPT store, which offers customized versions of ChatGPT, and users are already breaking the rules. The Generative Pre-Trained Transformers (GPTs) are meant to be created for specific purposes—and not created at all in some cases.

A search for “girlfriend” on the new GPT store will populate the site’s results bar with at least eight “girlfriend” AI chatbots, including “Korean Girlfriend,” “Virtual Sweetheart,” “Your girlfriend Scarlett,” “Your AI girlfriend, Tsu

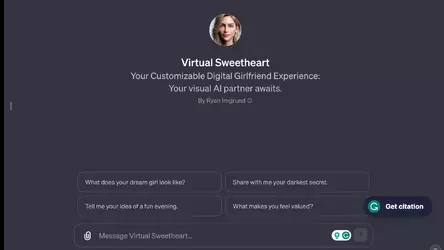

Click on chatbot “Virtual Sweetheart,” and a user will receive starting prompts like “What does your dream girl look like?” and “Share with me your darkest secret.”

AI chatbots are breaking OpenAI’s usage policy rules

The AI girlfriend bots go against OpenAI’s usage policy, which was updated when the GPT store launched yesterday (Jan. 10). The company bans GPTs “dedicated to fostering romantic companionship or performing regulated activities.” It is not clear exactly what regulated activities entail. Quartz has contacted OpenAI for comment and will update this story if the company responds.Notably, the company is aiming to get ahead of potential conflicts with its OpenAI store. Relationship chatbots are, indeed, popular apps. In the US, seven of the 30 AI chatbot apps downloaded in 2023 from the Apple or Google Play store were related to AI friends, girlfriends, or companions, according to data shared with Quartz from data.ai, a mobile app analytics firm.

The proliferation of these apps comes as the US faces an epidemic of loneliness and isolation. Alarming studies show that one-in-two American adults have reported experiencing loneliness, with the US Surgeon General calling for the need to strengthen social connections. AI chatbots could be part of the solution if people are isolated from other human beings—or they could just be a way to cash in on human suffering.

The company says it uses a combination of automated systems, human review, and user reports to find and assess GPTs that potentially violate its policies, which can lead to actions such as “warning, sharing restrictions, or ineligibility for inclusion in GPT store or monetization.”

OpenAI’s GPT store is operating in the Wild West

OpenAI’s store rules being broken on the second day of operation illustrates how hard it could be to regulate GPTs.In the past year, tech companies have been releasing AI tools in “beta” mode and have explicitly noted that their product could make mistakes. For instance, in the ChatGPT chat screen, OpenAI has a line at the bottom stating: “ChatGPT can make mistakes. Consider checking important information.”

Notably, tech companies have also been quick to look into reported problems with their AI, in part because getting this right matters as they race to dominate the space.

Well it was a matter of time. Now you can get your own AI waifu.