- Joined

- Aug 19, 2020

The patterns of the stars, their meanings, and what physical utility we can divine from them have fascinated our ancestors for time immemorial. From Aztec and Mayan temples, perfectly astronomically aligned, independently from the star charts of the western and eastern old worlds, to the sailing methods of global explorers, the logic of the stars has enthralled us for millennia. The first known creation of the computer, a mechanism of gears that ran through the zodiac our ancestors divined, called the Antikythera Mechanism would be able to predict eclipses and be used to keep a finer track on events. It's speculated, based on the man's known research, that Hipparchus of Rhodes was the craftsman of such a mechanism, or at least played a roll in it's creation. This creation being the foundation for the mechanism of the timepiece and clocktower alike.

Later on, after empires and nations would fall and rise, the Arabs, in their quest for sacred knowledge, and endless supplies of coffee, would create both the concept of advanced algebra and the Zairja a logarithmic journal based on the concept of synchronicity and eternal recurrence. It has been suggested that Catalan-Majorcan mathematician Ramon Llull became familiar with the Zairja in his travels and studies of Arab culture, and used it as a prototype for his invention of the Ars Magna. The Ars Magna being one of the works of Ramon that would be come to known as the foundation of computation theory.

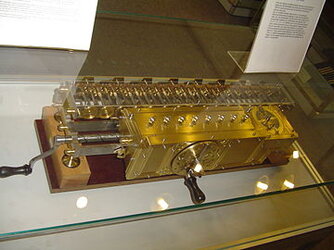

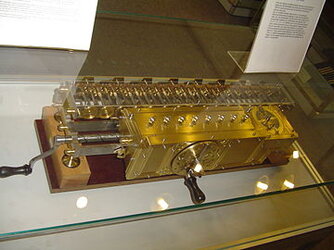

Boiling down from this rather outlandish chart even further we find Gottfried Leibniz's Calculus Ratiocinator, which is almost a form of proto-AI but moreso the theoretical anticipator of the mathematically-set algebra of logic itself. We can see the concepts combining into a sort of esoteric watch, but we known now we were seeing the skeleton of the modern computer come into being during this time in the duchy of Saxxony during the 17th century. If only they knew then how far their ideas and inventions could and would be pushed. As well, I implore you to study Leibniz's works, the man was a real big-brain individual.

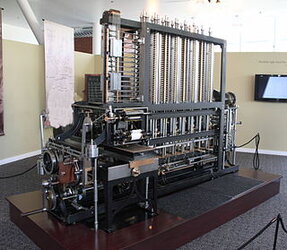

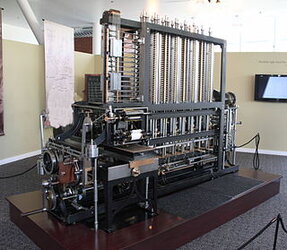

After being refined further through concepts of logic and arithmetic such as Begriffsschrift and the Dewey Decimal System, it would eventually be compiled into an even greater form, the foundation for the complex computers we would come to use every day, Charles Babbage's Difference Engine. The principle of a difference engine is Newton's method of divided differences. It's an absolutely genius invention, but, running off of polynomial calculation, it's calculation ability was still complex and not without redundancies. Through minds like mathematician David Hilbert, we would reduce computational math down to simpler and more secure archetypes. However, it would be us cracking into deeper and deeper realms of microscopic control that would unlock the real-estate available to really let binary code, something all life can be boiled down to through our genes, through the very presence or absence of matter in a given space, allow infinite potential for creation.

Well, now you're caught up, next time you're computer's beeping and booping, give it a little appreciation for it's history.

Later on, after empires and nations would fall and rise, the Arabs, in their quest for sacred knowledge, and endless supplies of coffee, would create both the concept of advanced algebra and the Zairja a logarithmic journal based on the concept of synchronicity and eternal recurrence. It has been suggested that Catalan-Majorcan mathematician Ramon Llull became familiar with the Zairja in his travels and studies of Arab culture, and used it as a prototype for his invention of the Ars Magna. The Ars Magna being one of the works of Ramon that would be come to known as the foundation of computation theory.

Boiling down from this rather outlandish chart even further we find Gottfried Leibniz's Calculus Ratiocinator, which is almost a form of proto-AI but moreso the theoretical anticipator of the mathematically-set algebra of logic itself. We can see the concepts combining into a sort of esoteric watch, but we known now we were seeing the skeleton of the modern computer come into being during this time in the duchy of Saxxony during the 17th century. If only they knew then how far their ideas and inventions could and would be pushed. As well, I implore you to study Leibniz's works, the man was a real big-brain individual.

After being refined further through concepts of logic and arithmetic such as Begriffsschrift and the Dewey Decimal System, it would eventually be compiled into an even greater form, the foundation for the complex computers we would come to use every day, Charles Babbage's Difference Engine. The principle of a difference engine is Newton's method of divided differences. It's an absolutely genius invention, but, running off of polynomial calculation, it's calculation ability was still complex and not without redundancies. Through minds like mathematician David Hilbert, we would reduce computational math down to simpler and more secure archetypes. However, it would be us cracking into deeper and deeper realms of microscopic control that would unlock the real-estate available to really let binary code, something all life can be boiled down to through our genes, through the very presence or absence of matter in a given space, allow infinite potential for creation.

Well, now you're caught up, next time you're computer's beeping and booping, give it a little appreciation for it's history.

Last edited:

<To sneed or not to sneed, that is the question

<To sneed or not to sneed, that is the question