People don't get how insanely powerful hardware is now

What really drives this home to me every time is seeing small microcontrollers for 5-10 bucks who performance-wise run circles around the first few generations of "serious" computers I owned which cost thousands and were state of the art. I know my smartphone etc. is also more powerful than them but somehow, these MCUs really drive that home for me. They're even multicore now! The things we would've made if we had them back then. One of the first microcontrollers I programmed had 256 bytes (not kb) of RAM. These powerful MCUs are in such mundane everyday items, often doing simple tasks that are way below of what they're capable but making them more primitive or cutting features simply isn't worth it. Somehow that's even more crazy to me than smartphones.

Can't find anything about that IP704 chip besides that link.

It probably never left the concept/prototype stage is my guess. A lot of stuff also disappeared off the internet. All the big players stopped financing AI and things sorta fizzled out in the 90s with holdovers into the 00s. When you read about the history of AI you get the impression that people shouted "AI is over! Stop your work now!" from the rooftops on 31. Dec 1989, but it was really more of a gradual decline in interest and financing that already started in the 80s. The funny thing is that the goals in which these companies were financed by investors were often a very vague "make computers intelligent". Sounds familiar? That said, expert systems never really disappeared. They're still all over scientific papers and probably are still to this day represented in a lot of internal software tooling of corporations.

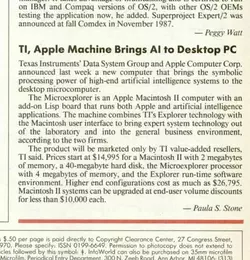

There were also offerings like the the TI Explorer, which basically was an AI/Lisp (these terms were interchangeable at that time, really) CPU card by TI.

(The picture is from some article about a guy putting together such a machine IIRC. I don't have a link on hand but I'm pretty sure you can find it easily when googling for these words, talk about this stuff is very rare, although this was a product that was actually delivered and people still own now, you can find pictures)

The reason this stuff is rare is because it didn't sell. Non-specialized systems got cheaper and faster. Even if you wanted to do expert systems, you simply didn't need this stuff.

The current AI iteration got much farther than that one ever did. We made a HUGE jump in NLP which has been the holy grail of computing since computers conceptually existed and had seen pretty much no usable progress until very recently. I can tell an LLM a story or a poem I wrote (so completely original text) and not only can it process it and summarize it, it can interpret meaning and subtext too and probably do a better job than the majority of humans on earth. It can then give me it's results in natural human language and even clarify or answer questions about it. That is huge. It's crazy how blasé people are about it or try to argue semantics while the actual results stare them in the eye.

crazy how Japan kept going thru the AI winter then completely missed the current AI train

This is pretty much true for most of the west. Nobody wants to hear it but all the good stuff and papers come out of China right now.

EDIT: Also found this on my harddrive, probably from the same article: