Article / Archive

Cloudflare, one of the top web and internet services businesses, is having trouble again. In this storm, Cloudflare Dashboard and its related application programming interfaces (API) have gone down. The silver lining to this trouble is that these issues are not affecting the serving of cached files via the Cloudflare Content Delivery Network (CDN) or the Cloudflare Edge security features. No, those had gone down last week.

On Oct. 30. Cloudflare had rolled out a failed update to its globally distributed key-value store, Workers KV. The result was that all of Cloudflare's services were down for 37 minutes. Today's problem isn't nearly as serious, but it's been going on for several hours.

As of 7:15 PM Eastern time, Cloudflare reported, that it was "seeing gradual improvement to affected services."

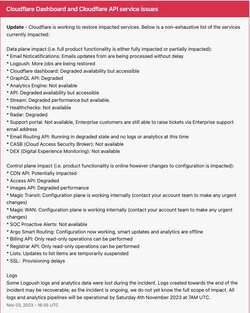

Still, it's bad enough. Cloudflare disclosed that the snags have affected a slew of products at the data plane and edge level. These include Logpush, WARP / Zero Trust device posture, Cloudflare dashboard, Cloudflare API, Stream API, Workers API, and Alert Notification System.

Other programs are still running, but you can't modify their settings. These are Magic Transit, Argo Smart Routing, Workers KV, WAF, Rate Limiting, Rules, WARP / Zero Trust Registration, Waiting Room, Load Balancing and Healthchecks, Cloudflare Pages, Zero Trust Gateway, DNS Authoritative and Secondary, Cloudflare Tunnel, Workers KV namespace operations, and Magic WAN.

Cloudflare failures are a big deal. As John Engates, Cloudflare's field CTO, recently tweeted, "Cloudflare processes about 26 million DNS queries every SECOND! Or 68 trillion/month. Plus, we blocked an average of 140 billion cyber threats daily in Q2'23."

The root cause of these problems is a data center power failure combined with a failure of services to switch over from data centers having trouble to those still functioning.

Late in the day, Cloudflare gave ZDNET a fuller explanation of what happened:

Trust me, Cloudflare really wants to fix this as soon as possible. Cloudflare's earning call is today.

-

By the way, unrelated, if you check cloudflarestatus.com right now, A LOT is affected. A LOT. Ouch.

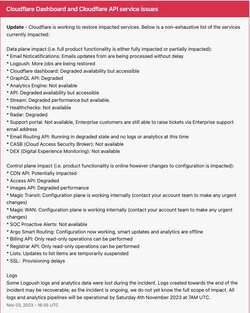

EDIT: better picture (thanks)

Cloudflare, one of the top web and internet services businesses, is having trouble again. In this storm, Cloudflare Dashboard and its related application programming interfaces (API) have gone down. The silver lining to this trouble is that these issues are not affecting the serving of cached files via the Cloudflare Content Delivery Network (CDN) or the Cloudflare Edge security features. No, those had gone down last week.

On Oct. 30. Cloudflare had rolled out a failed update to its globally distributed key-value store, Workers KV. The result was that all of Cloudflare's services were down for 37 minutes. Today's problem isn't nearly as serious, but it's been going on for several hours.

As of 7:15 PM Eastern time, Cloudflare reported, that it was "seeing gradual improvement to affected services."

Still, it's bad enough. Cloudflare disclosed that the snags have affected a slew of products at the data plane and edge level. These include Logpush, WARP / Zero Trust device posture, Cloudflare dashboard, Cloudflare API, Stream API, Workers API, and Alert Notification System.

Other programs are still running, but you can't modify their settings. These are Magic Transit, Argo Smart Routing, Workers KV, WAF, Rate Limiting, Rules, WARP / Zero Trust Registration, Waiting Room, Load Balancing and Healthchecks, Cloudflare Pages, Zero Trust Gateway, DNS Authoritative and Secondary, Cloudflare Tunnel, Workers KV namespace operations, and Magic WAN.

Cloudflare failures are a big deal. As John Engates, Cloudflare's field CTO, recently tweeted, "Cloudflare processes about 26 million DNS queries every SECOND! Or 68 trillion/month. Plus, we blocked an average of 140 billion cyber threats daily in Q2'23."

The root cause of these problems is a data center power failure combined with a failure of services to switch over from data centers having trouble to those still functioning.

Late in the day, Cloudflare gave ZDNET a fuller explanation of what happened:

Cloudflare is still working to resolve this problem. But, since the problem was with data center power outages rather than its software, solving it may be outside its control. Hang in there, folks. Fixing this may take a while.We operate in multiple redundant data centers in Oregon that power Cloudflare's control plane (dashboard, logging, etc). There was a regional power issue that impacted multiple facilities in the region. The facilities failed to generate power overnight. Then, this morning, there were multiple generator failures that took the facilities entirely offline. We have failed over to our disaster recovery facility and most of our services are restored. This data center outage impacted Cloudflare's dashboards and APIs, but it did not impact traffic flowing through our global network. We are working with our data center vendors to investigate the root cause of the regional power outage and generator failures. We expect to publish multiple blogs based on what we learn and can share those with you when they're live.

Trust me, Cloudflare really wants to fix this as soon as possible. Cloudflare's earning call is today.

-

By the way, unrelated, if you check cloudflarestatus.com right now, A LOT is affected. A LOT. Ouch.

EDIT: better picture (thanks)

Attachments

Last edited: