- Joined

- Mar 13, 2022

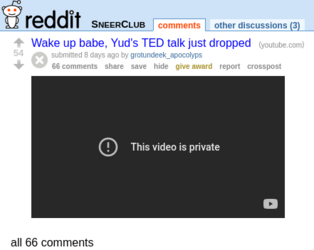

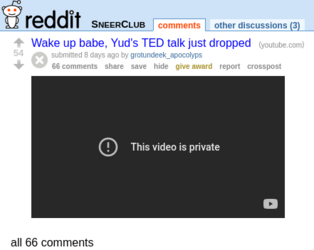

Apparently Yud's TED Talk was posted a week ago, but unfortunately, it was quickly set to private, and I can't find any archives.

source (a)

Sneer Club will always be an inferior version of Kiwi Farms solely due to the fact that none of them ever archive shit. But their reactions are laughing at EY of course.

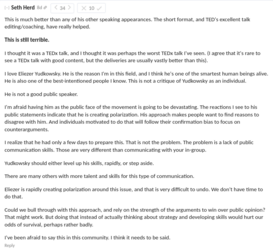

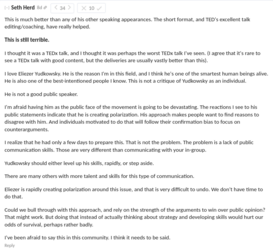

According to the LessWrong post, it was accidentally published early by a TEDx channel and will eventually be published to the main TED channel. Speaking of which, even the top comment on the LW post is critical of Yuddo for being too much of a sperg:

source (a)

SneerClub rarely posts about TheMotte these days so it's a pleasant surprise that I found this post. Prepare for some primo cow on cow violence!

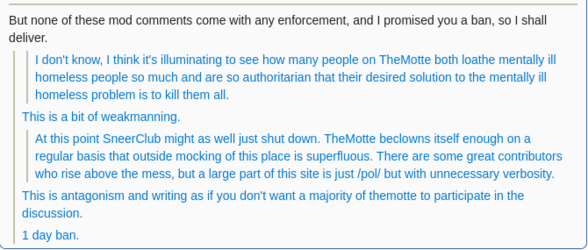

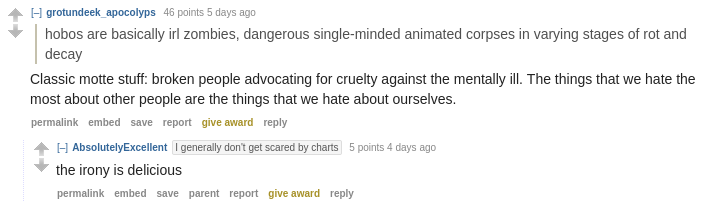

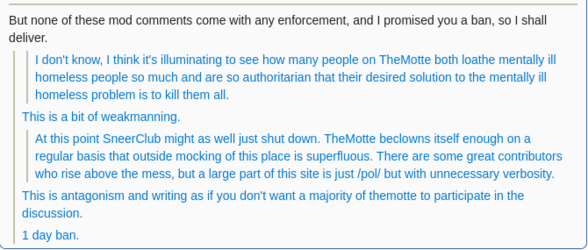

First, the OP covers how a poster comparing homeless people to human garbage and wanting them killed got warned but not banned, while a poster criticising TheMotte for it got banned. Sneer!

SneerClub roundly mocked the moderation.

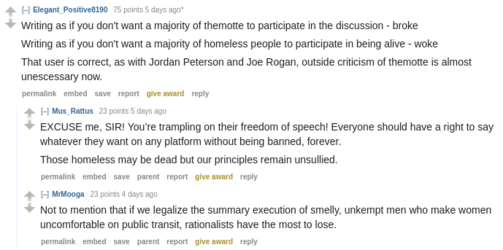

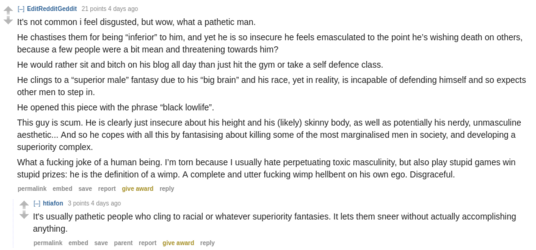

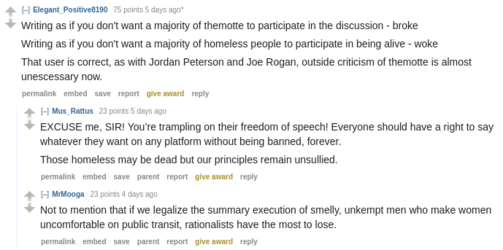

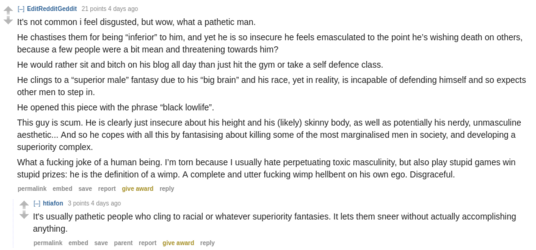

And mocking the poster while making sure not to "perpetuate" "toxic masculinity":

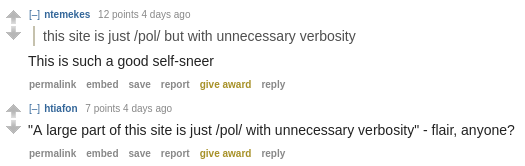

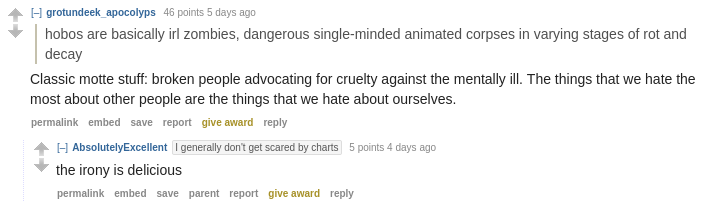

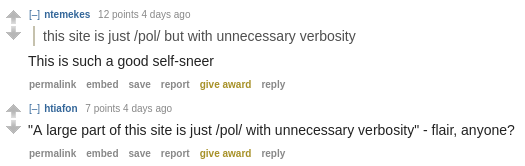

The motte poster's /pol/ comparison was on point!

And homeless street criminals are worth more than Motte posters:

The best part though is this slapfight that occurred in the comments. Unfortunately it's partially been deleted by censorious jannies and the Pushshift API got banned by a censorious reddit so I'm not sure what's been deleted.

There is a lot more in the comments but I'm not going to screencap everything so I'll leave it at that.

source (a)

source (a)

Sneer Club will always be an inferior version of Kiwi Farms solely due to the fact that none of them ever archive shit. But their reactions are laughing at EY of course.

According to the LessWrong post, it was accidentally published early by a TEDx channel and will eventually be published to the main TED channel. Speaking of which, even the top comment on the LW post is critical of Yuddo for being too much of a sperg:

source (a)

SneerClub rarely posts about TheMotte these days so it's a pleasant surprise that I found this post. Prepare for some primo cow on cow violence!

First, the OP covers how a poster comparing homeless people to human garbage and wanting them killed got warned but not banned, while a poster criticising TheMotte for it got banned. Sneer!

SneerClub roundly mocked the moderation.

And mocking the poster while making sure not to "perpetuate" "toxic masculinity":

The motte poster's /pol/ comparison was on point!

And homeless street criminals are worth more than Motte posters:

The best part though is this slapfight that occurred in the comments. Unfortunately it's partially been deleted by censorious jannies and the Pushshift API got banned by a censorious reddit so I'm not sure what's been deleted.

There is a lot more in the comments but I'm not going to screencap everything so I'll leave it at that.

source (a)