- Joined

- Nov 15, 2021

I’d still rather just save power by parking unused chiplets, than by having some of the cores be less powerful. AMD demonstrated that this can be done, and that it works rather well, with the 7900X3D and the 7950X3D.

You can put more compute power on a die and deliver more compute per watt using small, slow cores than big, fast cores. This is the principle behind GPUs. Big, fast cores are better at executing arbitrary code. So IMO hybrid was the right way to go.

E-core design may be the future

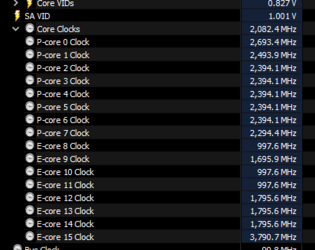

E-core design is the present. It's the future for at least the next two generations (Lunar Lake and Arrow Lake both have E-cores, and so does Zen 5, at least on laptops). IDK where you're getting any information that it doesn't work. Works for me:

Alright man, I relent. I was talking out of my ass and spun my personal taste as business analysis. They aren't making the desktop CPUs I want them to make.

I make the same mistake sometimes. Apparently, people are buying Sapphire Rapids. I think it's shit. But I guess it's just shit for what I want to do.