Huh? Some of these cpus are still extremely low wattage for what they do. That 7800X3D running at about 50w for top end gaming performance is pretty incredible. There's nothing eye-watering about it.

It's running at 50W because it's barely doing anything. Saturate the chip, and then tell me what the numbers are. The touted efficiency of AMD chips is nothing more than the fact that TSMC's N5 has higher density than N7 or Intel 7. I mean, good on AMD for spinning off GloFo and going to TSMC, but that was a good business decision.

The way AMD has been taking advantage of TSMC's manufacturing advantage over intel wins zero prizes for efficiency. They're taking the same, old, bloated x86_64 core designs, bloating them up even more, and driving the IPC up. That's not efficient design. We're at the point now in chip design where every additional stage added to a branch predictor, and every watt burned to push the IPC up, has insanely diminished returns.

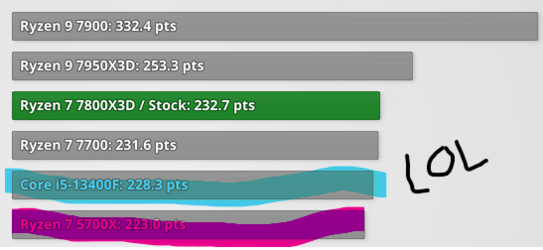

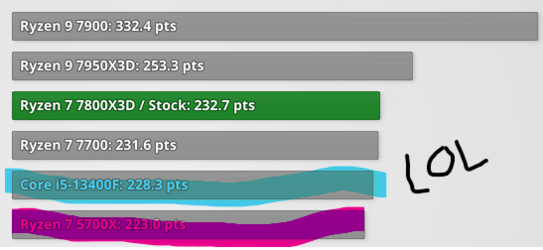

Let me illustrate, this is the Cinebench rating (which is more meaningful than gaming "frames per watt," since Cinebench actually keeps the CPU fairly busy):

What you are seeing here is that the rated multithread efficiency of a chip fabricated on TSMC's N5 node, is barely ahead of an intel chip fabbed on Intel 7, and another AMD chip fabbed on TSMC N7. In fact, 7800X3D is barely ahead of 7700, so it doesn't look like saving yourself some round trips to the DIMMs is really saving you a meaningful amount of power.

If AMD had efficient designs, in TechPowerUp's chart, all of the chips at the top should be from AMD. Zero intel chips should be anywhere in the running. Intel's core engineers are hobbled by their inferior manufacturing, so they've been forced to find new efficiencies just to keep the company alive. So the fact that we see so many of those BIG.little designs on the top half of the efficiency chart shows just how wasteful x86 cores had gotten for parallel workloads.

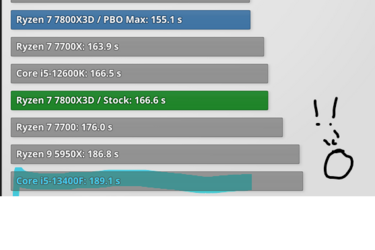

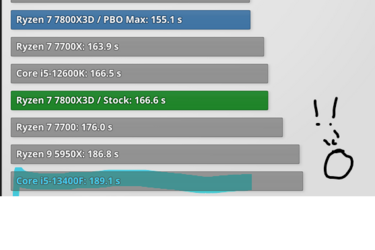

This is from the COMSOL physics chart. A $200 i5 rated at 65W fabbed on Intel 7 should not be anywhere

close to a Ryzen 7 fabbed on TSMC N5. Yeah, it's 15% slower. Point is, it shouldn't be. It should be getting its shit pushed in.

The only time modern cpus get anywhere near that is when you decide to go full OC/PBO and max out all the threads for work. Then they blow Apple shit out of the water.

There isn't an x86-based design in the same league as aarch64 when it comes to performance per watt, and there can't ever be, because an x86 chip will always take a hit to decode CISC instructions. And then Apple silicon is the best of the aarch64 designs out there, since you don't have to pay nearly as much energy to move data.

Yes, I hated the initial AM5 release where they just ran everything maxed out and said "nah, 95c is fine:, but then it turns out you can turn on eco mode in BIOS and have almost no real performance drop while cutting the power draw and temps significantly.

Right, because volting the shit out of a CPU so you can win online dick-measuring contests, which is what AMD is doing,

isn't very efficient. Great for selling chips, though. Any time I see some manufacturer bragging about the "efficiency" of a chip that draws enough power to heat a jacuzzi, I just roll my eyes.

My criticisms apply just as much to intel's i9-13900k, FWIW. Consumes more power than my entire laundry room to get something like a 10% uplift over the high-end AMD chips.