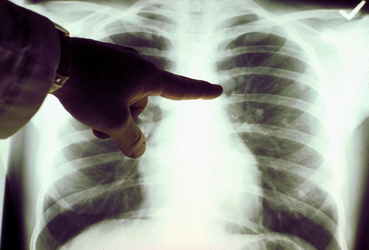

A doctor can’t tell if somebody is Black, Asian, or white, just by looking at their X-rays. But a computer can, according to a surprising new paper by an international team of scientists, including researchers at the Massachusetts Institute of Technology and Harvard Medical School.

The study found that an artificial intelligence program trained to read X-rays and CT scans could predict a person’s race with 90 percent accuracy. But the scientists who conducted the study say they have no idea how the computer figures it out.

“When my graduate students showed me some of the results that were in this paper, I actually thought it must be a mistake,” said Marzyeh Ghassemi, an MIT assistant professor of electrical engineering and computer science, and coauthor of the paper, which was published Wednesday in the medical journal The Lancet Digital Health. “I honestly thought my students were crazy when they told me.”

At a time when AI software is increasingly used to help doctors make diagnostic decisions, the research raises the unsettling prospect that AI-based diagnostic systems could unintentionally generate racially biased results. For example, an AI (with access to X-rays) could automatically recommend a particular course of treatment for all Black patients, whether or not it’s best for a specific person. Meanwhile, the patient’s human physician wouldn’t know that the AI based its diagnosis on racial data.

The research effort was born when the scientists noticed that an AI program for examining chest X-rays was more likely to miss signs of illness in Black patients. “We asked ourselves, how can that be if computers cannot tell the race of a person?” said Leo Anthony Celi, another coauthor and an associate professor at Harvard Medical School.

The research team, which included scientists from the United States, Canada, Australia, and Taiwan, first trained an AI system using standard data sets of X-rays and CT scans, where each image was labeled with the person’s race. The images came from different parts of the body, including the chest, hand, and spine. The diagnostic images examined by the computer contained no obvious markers of race, like skin color or hair texture.

Once the software had been shown large numbers of race-labeled images, it was then shown different sets of unlabeled images. The program was able to identify the race of people in the images with remarkable accuracy, often well above 90 percent. Even when images from people of the same size or age or gender were analyzed, the AI accurately distinguished between Black and white patients.

But how? Ghassemi and her colleagues remain baffled, but she suspects it has something to do with melanin, the pigment that determines skin color. Perhaps X-rays and CT scanners detect the higher melanin content of darker skin, and embed this information in the digital image in some fashion that human users have never noticed before. It’ll take a lot more research to be sure.

Could the test results amount to proof of innate differences between people of different races? Alan Goodman, a professor of biological anthropology at Hampshire College and coauthor of the book “Racism Not Race,” doesn’t think so. Goodman expressed skepticism about the paper’s conclusions and said he doubted other researchers will be able to reproduce the results. But even if they do, he thinks it’s all about geography, not race.

Goodman said geneticists have found no evidence of substantial racial differences in the human genome. But they do find major differences between people based on where their ancestors lived.

“Instead of using race, if they looked at somebody’s geographic coordinates, would the machine do just as well?” asked Goodman. “My sense is the machine would do just as well.”

In other words, an AI might be able to determine from an X-ray that one person’s ancestors were from northern Europe, another’s from central Africa, and a third person’s from Japan. “You call this race. I call this geographical variation,” said Goodman. (Even so, he admitted it’s unclear how the AI could detect this geographical variation merely from an X-ray.)

In any case, Celi said doctors should be reluctant to use AI diagnostic tools that might automatically generate biased results.

“We need to take a pause,” he said. “We cannot rush bringing the algorithms to hospitals and clinics until we’re sure they’re not making racist decisions or sexist decisions.”

https://www.bostonglobe.com/2022/05...i-can-recognize-race-x-rays-nobody-knows-how/ (A)