ScienceDaily (archive.today)

4 Jul 2025 02:29:40 UTC

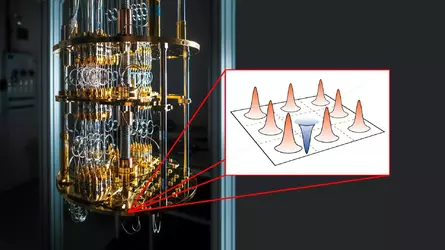

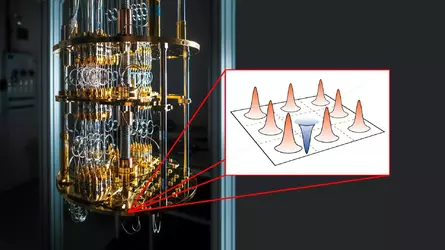

Quantum computers can perform complex computations thanks to their ability to represent an enormous number of different states at the same time in a so-called quantum superposition. Representing these superpositions of states is incredibly difficult to describe. Now, a research team has found a relatively simple method to simulate some relevant quantum superpositions of states. The illustration shows one of these superpositions, which can be created inside what’s known as a continuous-variable quantum computer. The team was able to observe how these states change when they interact with each other, and they were also able to simulate those changes using wave-like patterns - like the ones you see in the image. Credit: Chalmers University of Technology I Cameron Calcluth

Quantum computers still face a major hurdle on their pathway to practical use cases: their limited ability to correct the arising computational errors. To develop truly reliable quantum computers, researchers must be able to simulate quantum computations using conventional computers to verify their correctness - a vital yet extraordinarily difficult task. Now, in a world-first, researchers from Chalmers University of Technology in Sweden, the University of Milan, the University of Granada, and the University of Tokyo have unveiled a method for simulating specific types of error-corrected quantum computations - a significant leap forward in the quest for robust quantum technologies.

Quantum computers have the potential to solve complex problems that no supercomputer today can handle. In the foreseeable future, quantum technology's computing power is expected to revolutionise fundamental ways of solving problems in medicine, energy, encryption, AI, and logistics.

Despite these promises, the technology faces a major challenge: the need for correcting the errors arising in a quantum computation. While conventional computers also experience errors, these can be quickly and reliably corrected using well-established techniques before they can cause problems. In contrast, quantum computers are subject to far more errors, which are additionally harder to detect and correct. Quantum systems are still not fault-tolerant and therefore not yet fully reliable.

To verify the accuracy of a quantum computation, researchers simulate - or mimic - the calculations using conventional computers. One particularly important type of quantum computation that researchers are therefore interested in simulating is one that can withstand disturbances and effectively correct errors. However, the immense complexity of quantum computations makes such simulations extremely demanding - so much so that, in some cases, even the world's best conventional supercomputer would take the age of the universe to reproduce the result.

Researchers from Chalmers University of Technology, the University of Milan, the University of Granada and the University of Tokyo have now become the first in the world to present a method for accurately simulating a certain type of quantum computation that is particularly suitable for error correction, but which thus far has been very difficult to simulate. The breakthrough tackles a long-standing challenge in quantum research.

"We have discovered a way to simulate a specific type of quantum computation where previous methods have not been effective. This means that we can now simulate quantum computations with an error correction code used for fault tolerance, which is crucial for being able to build better and more robust quantum computers in the future," says Cameron Calcluth, PhD in Applied Quantum Physics at Chalmers and first author of a study recently published in Physical Review Letters.

Error-correcting quantum computations - demanding yet crucial

The limited ability of quantum computers to correct errors stems from their fundamental building blocks - qubits - which have the potential for immense computational power but are also highly sensitive. The computational power of quantum computers relies on the quantum mechanical phenomenon of superposition, meaning qubits can simultaneously hold the values 1 and 0, as well as all intermediate states, in any combination. The computational capacity increases exponentially with each additional qubit, but the trade-off is their extreme susceptibility to disturbances.

"The slightest noise from the surroundings in the form of vibrations, electromagnetic radiation, or a change in temperature can cause the qubits to miscalculate or even lose their quantum state, their coherence, thereby also losing their capacity to continue calculating," says Calcluth.

To address this issue, error correction codes are used to distribute information across multiple subsystems, allowing errors to be detected and corrected without destroying the quantum information. One way is to encode the quantum information of a qubit into the multiple - possibly infinite - energy levels of a vibrating quantum mechanical system. This is called a bosonic code. However, simulating quantum computations with bosonic codes is particularly challenging because of the multiple energy levels, and researchers have been unable to reliably simulate them using conventional computers - until now.

New mathematical tool key in the researchers' solution

The method developed by the researchers consists of an algorithm capable of simulating quantum computations that use a type of bosonic code known as the Gottesman-Kitaev-Preskill (GKP) code. This code is commonly used in leading implementations of quantum computers.

"The way it stores quantum information makes it easier for quantum computers to correct errors, which in turn makes them less sensitive to noise and disturbances. Due to their deeply quantum mechanical nature, GKP codes have been extremely difficult to simulate using conventional computers. But now we have finally found a unique way to do this much more effectively than with previous methods," says Giulia Ferrini, Associate Professor of Applied Quantum Physics at Chalmers and co-author of the study.

The researchers managed to use the code in their algorithm by creating a new mathematical tool. Thanks to the new method, researchers can now more reliably test and validate a quantum computer's calculations.

"This opens up entirely new ways of simulating quantum computations that we have previously been unable to test but are crucial for being able to build stable and scalable quantum computers," says Ferrini.

More about the research

The article Classical simulation of circuits with realistic odd-dimensional Gottesman-Kitaev-Preskill states has been published in Physical Review Letters. The authors are Cameron Calcluth, Giulia Ferrini, Oliver Hahn, Juani Bermejo-Vega and Alessandro Ferraro. The researchers are active at Chalmers University of Technology, Sweden, the University of Milan, Italy, the University of Granada, Spain, and the University of Tokyo, Japan.

Story Source:

Materials provided by Chalmers University of Technology. Note: Content may be edited for style and length.

Journal Reference:

4 Jul 2025 02:29:40 UTC

Quantum computers can perform complex computations thanks to their ability to represent an enormous number of different states at the same time in a so-called quantum superposition. Representing these superpositions of states is incredibly difficult to describe. Now, a research team has found a relatively simple method to simulate some relevant quantum superpositions of states. The illustration shows one of these superpositions, which can be created inside what’s known as a continuous-variable quantum computer. The team was able to observe how these states change when they interact with each other, and they were also able to simulate those changes using wave-like patterns - like the ones you see in the image. Credit: Chalmers University of Technology I Cameron Calcluth

Quantum computers still face a major hurdle on their pathway to practical use cases: their limited ability to correct the arising computational errors. To develop truly reliable quantum computers, researchers must be able to simulate quantum computations using conventional computers to verify their correctness - a vital yet extraordinarily difficult task. Now, in a world-first, researchers from Chalmers University of Technology in Sweden, the University of Milan, the University of Granada, and the University of Tokyo have unveiled a method for simulating specific types of error-corrected quantum computations - a significant leap forward in the quest for robust quantum technologies.

Quantum computers have the potential to solve complex problems that no supercomputer today can handle. In the foreseeable future, quantum technology's computing power is expected to revolutionise fundamental ways of solving problems in medicine, energy, encryption, AI, and logistics.

Despite these promises, the technology faces a major challenge: the need for correcting the errors arising in a quantum computation. While conventional computers also experience errors, these can be quickly and reliably corrected using well-established techniques before they can cause problems. In contrast, quantum computers are subject to far more errors, which are additionally harder to detect and correct. Quantum systems are still not fault-tolerant and therefore not yet fully reliable.

To verify the accuracy of a quantum computation, researchers simulate - or mimic - the calculations using conventional computers. One particularly important type of quantum computation that researchers are therefore interested in simulating is one that can withstand disturbances and effectively correct errors. However, the immense complexity of quantum computations makes such simulations extremely demanding - so much so that, in some cases, even the world's best conventional supercomputer would take the age of the universe to reproduce the result.

Researchers from Chalmers University of Technology, the University of Milan, the University of Granada and the University of Tokyo have now become the first in the world to present a method for accurately simulating a certain type of quantum computation that is particularly suitable for error correction, but which thus far has been very difficult to simulate. The breakthrough tackles a long-standing challenge in quantum research.

"We have discovered a way to simulate a specific type of quantum computation where previous methods have not been effective. This means that we can now simulate quantum computations with an error correction code used for fault tolerance, which is crucial for being able to build better and more robust quantum computers in the future," says Cameron Calcluth, PhD in Applied Quantum Physics at Chalmers and first author of a study recently published in Physical Review Letters.

Error-correcting quantum computations - demanding yet crucial

The limited ability of quantum computers to correct errors stems from their fundamental building blocks - qubits - which have the potential for immense computational power but are also highly sensitive. The computational power of quantum computers relies on the quantum mechanical phenomenon of superposition, meaning qubits can simultaneously hold the values 1 and 0, as well as all intermediate states, in any combination. The computational capacity increases exponentially with each additional qubit, but the trade-off is their extreme susceptibility to disturbances.

"The slightest noise from the surroundings in the form of vibrations, electromagnetic radiation, or a change in temperature can cause the qubits to miscalculate or even lose their quantum state, their coherence, thereby also losing their capacity to continue calculating," says Calcluth.

To address this issue, error correction codes are used to distribute information across multiple subsystems, allowing errors to be detected and corrected without destroying the quantum information. One way is to encode the quantum information of a qubit into the multiple - possibly infinite - energy levels of a vibrating quantum mechanical system. This is called a bosonic code. However, simulating quantum computations with bosonic codes is particularly challenging because of the multiple energy levels, and researchers have been unable to reliably simulate them using conventional computers - until now.

New mathematical tool key in the researchers' solution

The method developed by the researchers consists of an algorithm capable of simulating quantum computations that use a type of bosonic code known as the Gottesman-Kitaev-Preskill (GKP) code. This code is commonly used in leading implementations of quantum computers.

"The way it stores quantum information makes it easier for quantum computers to correct errors, which in turn makes them less sensitive to noise and disturbances. Due to their deeply quantum mechanical nature, GKP codes have been extremely difficult to simulate using conventional computers. But now we have finally found a unique way to do this much more effectively than with previous methods," says Giulia Ferrini, Associate Professor of Applied Quantum Physics at Chalmers and co-author of the study.

The researchers managed to use the code in their algorithm by creating a new mathematical tool. Thanks to the new method, researchers can now more reliably test and validate a quantum computer's calculations.

"This opens up entirely new ways of simulating quantum computations that we have previously been unable to test but are crucial for being able to build stable and scalable quantum computers," says Ferrini.

More about the research

The article Classical simulation of circuits with realistic odd-dimensional Gottesman-Kitaev-Preskill states has been published in Physical Review Letters. The authors are Cameron Calcluth, Giulia Ferrini, Oliver Hahn, Juani Bermejo-Vega and Alessandro Ferraro. The researchers are active at Chalmers University of Technology, Sweden, the University of Milan, Italy, the University of Granada, Spain, and the University of Tokyo, Japan.

Story Source:

Materials provided by Chalmers University of Technology. Note: Content may be edited for style and length.

Journal Reference:

- Cameron Calcluth, Oliver Hahn, Juani Bermejo-Vega, Alessandro Ferraro, Giulia Ferrini. Classical Simulation of Circuits with Realistic Odd-Dimensional Gottesman-Kitaev-Preskill States. Physical Review Letters, 2025; 135 (1) DOI: 10.1103/xmtw-g54f