frap

kiwifarms.net

- Joined

- Oct 22, 2021

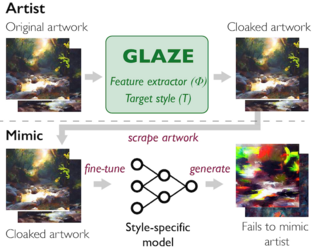

The AI arms race begins!

Glaze, a nonprofit masking program for art uploaded online, has been released: http://glaze.cs.uchicago.edu

Glaze, a nonprofit masking program for art uploaded online, has been released: http://glaze.cs.uchicago.edu

![Can you be fat and healthy [v6mMpE8AaA0]-00.05.11-7787.jpg Can you be fat and healthy [v6mMpE8AaA0]-00.05.11-7787.jpg](http://uploads.kiwifarms.st/data/attachments/4804/4804200-a25b76477523e065814916828da61a5d.jpg?hash=olt2R3Uj4G)

![Can you be fat and healthy [v6mMpE8AaA0]-00.05.11-7787-upscaled-krita.jpg Can you be fat and healthy [v6mMpE8AaA0]-00.05.11-7787-upscaled-krita.jpg](http://uploads.kiwifarms.st/data/attachments/4804/4804204-16e2f5523e11cc94bb7da7e5f0f88e57.jpg?hash=FuL1Uj4RzJ)