No Easy Way Out: the Effectiveness of Deplatforming an Extremist Forum to Suppress Hate and Harassment

Anh V. Vu

University of Cambridge

Cambridge Cybercrime Centre

anh.vu@cl.cam.ac.uk

Alice Hutchings

University of Cambridge

Cambridge Cybercrime Centre

alice.hutchings@cl.cam.ac.uk

Ross Anderson

University of Cambridge and University of Edinburgh

ross.anderson@cl.cam.ac.uk

Abstract - Legislators and policymakers worldwide are debating options for suppressing illegal, harmful and undesirable material online. Drawing on several quantitative data sources, we show that deplatforming an active community to suppress online hate and harassment, even with a substantial concerted effort involving several tech firms, can be hard. Our case study is the disruption of the largest and longest-running harassment forum Kiwi Farms in late 2022, which is probably the most extensive industry effort to date. Despite the active participation of a number of tech companies over several consecutive months, this campaign failed to shut down the forum and remove its objectionable content. While briefly raising public awareness, it led to rapid platform displacement and traffic fragmentation. Part of the activity decamped to Telegram, while traffic shifted from the primary domain to previously abandoned alternatives. The forum experienced intermittent outages for several weeks, after which the community leading the campaign lost interest, traffic was directed back to the main domain, users quickly returned, and the forum was back online and became even more connected. The forum members themselves stopped discussing the incident shortly thereafter, and the net effect was that forum activity, active users, threads, posts and traffic were all cut by about half. The disruption largely affected casual users (of whom roughly 87% left), while half the core members remained engaged. It also drew many newcomers, who exhibited increasing levels of toxicity during the first few weeks of participation. Deplatforming a community without a court order raises philosophical issues about censorship versus free speech; ethical and legal issues about the role of industry in online content moderation; and practical issues on the efficacy of private-sector versus government action. Deplatforming a dispersed community using a series of court orders against individual service providers appears unlikely to be very effective if the censor cannot incapacitate the key maintainers, whether by arresting them, enjoining them or otherwise deterring them.

1. Introduction

Online content is now prevalent, widely accessible, and influential in shaping public discourse. Yet while online places facilitate free speech, they do the same for hate speech [1], and the line between the two is often contested. Some cases of stalking, bullying, and doxxing such as Gamergate have had real-world consequences, including violent crime and political mobilisation [2]. Content moderation has become a critical function of tech companies, but also a political tussle space, since abusive accounts may affect online communities in significantly different ways [3]. Online social platforms employ various mechanisms, for example, artificial intelligence [4], to detect, moderate, and suppress objectionable content [5], including “hard” and “soft” techniques [6]. These range from reporting users of illegal content to the police, through deplatforming users breaking terms of service [7], to moderating legal but obnoxious content [8], which may involve actions such as flagging it with warnings, downranking it in recommendation algorithms [9], or preventing its being monetised through ads [10].

Deplatforming may mean blocking individual users, but sometimes the target is not a single bad actor, but a whole community, such as one involved in crime [11]. It can be undertaken by industry, as when Cloudflare, GoDaddy, Google and some other firms terminated service for the Daily Stormer after the Unite the Right rally in Virginia in 2017 [12] and for 8Chan in August 2019 [13]; or by law enforcement, as with the FBI taking down DDoS-for-hire services in 2018 [14, 15] and 2022 [16, 17], and seizing Raid Forums in 2022 [18]. Industry disruption has often been short-lived; both 8Chan and Daily Stormer re-emerged or relocated shortly after being disrupted. Police intervention is often slow and less effective, and its impact may also be temporary [11]. After the FBI terminated Silk Road [19], the online drug market fragmented among multiple smaller ones [20]. The seizure of Raid Forums [18] led to the emergence of its successors Breach Forums, Exposed Forums, and Onni Forums. Furthermore, the FBI takedowns of DDoS-for-hire services cut the attack volume significantly, yet the market recovered rapidly [14, 15].

Kiwi Farms is the largest and longest-running online harassment forum [21]. It is often associated with real-life trolling and doxxing campaigns against feminists, gay rights campaigners and minorities such as disabled, transgender, and autistic individuals; some have killed themselves after being harassed [22]. Despite being unpleasant and widely controversial, the forum has been online for a decade and had been shielded by Cloudflare’s DDoS protection for years. This came to an end following serious harassment by forum members of a Canadian trans activist, culminating in a swatting incident in August 2022. (This is when a harasser falsely reports a violent crime in progress at the victim’s home, resulting in the arrival of a special-weapons-and-tactics (SWAT) team to storm the premises, placing the victim and family at risk.) This resulted in a community-led campaign on Twitter to pressure Cloudflare and other tech firms to drop the forum [23]. This escalated quickly, generating significant social media attention and mainstream headlines. A series of tech firms then attempted to take the forum down; they included DDoS protection services, infrastructure providers, and even some Tier-1 networks [24, 25, 26, 27]. This extraordinary series of events lasted for a few months and was the most sustained effort to date to suppress an active online hate community. It is notable that tech firms gave in to public pressure in this case, while they have in the past resisted substantial pressure from governments.

Existing studies have investigated the efficacy of deplatforming social-media users [28, 29, 30, 31, 32, 33, 34], yet there has been limited research – both quantitative and qualitative – into the effectiveness of industry disruptions against standalone hate communities such as bulletin-board forums, which tend to be more resilient as the content can be fully backed up and restored by the admins. This paper investigates how well the industry – the entities offering digital infrastructure for online services such as hosting and domain providers, security and protection services, certificate authorities, and ISP networks – dealt with a hate and harassment site.

We outline the disruption landscape in §2, then describe our methods, datasets, and ethics in §3. Our ultimate goal is to evaluate the efficacy of the effort, and to understand the impacts and challenges of deplatforming as a means to suppress online hate and harassment. Our primary research questions are tackled in subsequent sections: the impact of deplatforming on the forum activity and traffic is assessed in §4; the changes in the behaviour of forum members when their gathering place is disrupted, as well as the effects on the forum operators and the community who started the campaign are examined in §5. We discuss the role of industry in tackling online harassment, censorship and content regulation, as well as legal, ethical, and policy implications of the incident in §6. Our data collection and analyses were approved by our institutional Ethics Review Board (ERB). Our data and scripts are available to academics on request.

Figure 1: Number of daily posts, threads, users; and the incidents affecting Kiwi Farms during its one-decade lifetime.

Figure 1: Number of daily posts, threads, users; and the incidents affecting Kiwi Farms during its one-decade lifetime.

Figure 2: Major incidents disrupting Kiwi Farms from September to December 2022. Green stars indicate the forum recovery.

Figure 2: Major incidents disrupting Kiwi Farms from September to December 2022. Green stars indicate the forum recovery.

2. Deplatforming and the Impacts

There is a complex ecosystem of online abuse that has been evolving for decades [35], where toxic content, surveillance, and content leakage are growing threats [36, 37]. While the number of personally targeted victims is relatively low, an increasing number of individuals, including children, are being exposed to online hate speech [38]. There can be a large grey area between criminal behaviour and socially acceptable behaviour online, just as in real life. And just as a pub landlord will throw out rowdy customers, so platforms have acceptable-use policies backed by content moderation [39], to enhance the user experience and protect advertising revenue [40].

Deplatforming refers to blocking, excluding or restricting individuals or groups from using online services, on the grounds that their activities are unlawful, or that they do not comply with the platform’s acceptable-use policy [7]. Various extremists and criminals have been exploiting online platforms for over thirty years, resulting in a complex ecosystem in which some harms are prohibited by the criminal law (such as terrorist radicalisation and child sex abuse material) while many others are blocked by platforms seeking to provide welcoming spaces for their users and advertisers. For a history and summary of current US legislative tussles and their possible side-effects, see Fishman [41]. The idea is that if a platform is used to disseminate abusive speech, removing the speech or indeed the speakers could restrict its spread, make it harder for hate groups to recruit, organise and coordinate, and ultimately protect individuals from mental and physical harm. Deplatforming can be done in various ways, ranging from limiting users’ access and restricting their activity for a time period, to suspending an account, or even stopping an entire group of users from using one or more services. For example, groups banned from major platforms can displace to other channels, whether smaller websites or messenger services [7].

Different countries draw the line between free speech and hate speech differently. For example, the USA allows the display of Nazi symbols while France and Germany do not [42]. Private firms offering ad-supported social networks generally operate much more restrictive rules, as their advertisers do not want their ads appearing alongside content that prospective customers are likely to find offensive. People wishing to generate and share such material therefore tend to congregate on smaller forums. Some argue that taking down such forums infringes on free speech and may lead to censorship of legitimate voices and dissenting opinions, especially if it is perceived as politically motivated. Others maintain that deplatforming is necessary to protect vulnerable communities from harm. Debates rage in multiple legislatures; as one example, the UK Online Safety Bill will enable the (politically-appointed) head of Ofcom, the UK broadcast regulator, to obtain court orders to shut down online places that are considered harmful [43]. This lead us to ask: how effective might such an order be?

2.1. Related Work

Most studies assessing the impact of deplatforming have worked with data on social networks. Deplatforming users may reduce activity and toxicity levels of relevant actors on Twitter [28] and Reddit [29, 30], limit the spread of conspiratorial disinformation on Facebook [31], reduce the engagement of peripheral members with hateful content [44], and minimise disinformation and extreme speech on YouTube [32]. But deplatforming has often made hate groups and individuals even more extreme, toxic and radicalised. They may view the disruption of their platform as an attack on their shared beliefs and values, and move to even more toxic places to continue spreading their message. There are many examples: the Reddit ban of r/incels in November 2017 led to the emergence of two standalone forums, incels.is and incels.net, which then grew rapidly; users banned from Twitter and Reddit exhibit higher levels of toxicity when migrating to Gab [33]; users migrated to their own standalone websites after getting banned from r/The_Donald expressed higher levels of toxicity and radicalisation, even though their posting activity on the new platform decreased [45, 46]; the ‘Great Deplatforming’ directed users to other less regulated, more extreme platforms [47]; the activity of many right-wing users moved to Telegram increased multi-fold after being banned on major social media [34]; users banned from Twitter are more active on Gettr [48]; communities migrated to Voat from Reddit can be more resilient [49]; and roughly half of QAnon users moved to Poal after the Voat shutdown [50]. Blocking can also be ineffective for technical and implementation reasons: removing Facebook content after a delay appears to have been ineffective and had limited impact due to the short cycle of users’ engagement [51].

The major limitation of focusing on social networks is that these platforms are often under the control of a single tech company and thus content can be permanently removed without effective backup and recovery. We instead examine deplatforming a standalone website involving a concerted effort on a much wider scale by a series of tech companies, including some big entities that handle a large amount of Internet traffic. Such standalone communities, for instance, websites and forums, may be more resilient as the admin has control of all the content, facilitating easy backups and restores. While existing studies measure changes in posting activity and the behaviours of actors when their place is disrupted, we also provide insights about other stakeholders such as the forum operators, the community leading the campaign, and the tech firms that attempted the takedown.

Previous work has documented the impacts of law enforcement and industry interventions on online cybercrime marketplaces [20], cryptocurrency market price [52], DDoS-for-hire services [14, 15], the Kelihos, Zeus, and Nitol botnets [53], and the well-known click fraud network ZeroAccess [54]; yet how effective a concerted effort of several tech firms can be in deplatforming an extreme and radicalised community remains unstudied.

2.2. The Kiwi Farms Disruption

Kiwi Farms had been growing steadily over a decade (see Figure 1) and had been under Cloudflare’s DDoS protection for some years. (Cloudflare’s service tries to detect suspicious patterns and drop malicious ones, only letting legitimate requests through.) An increase of roughly 50% in forum activity happened during the Covid-19 lockdown starting in March 2020, presumably as people were spending more time online. Prior interventions have resulted in the forum getting banned from Google Adsense, and from Mastercard, Visa and PayPal in 2016; from hundreds of VPS providers between 2014–2019 [55]; and from selling merchandise on the print-on-demand marketplace Redbubble in 2016. XenForo, a close-source forum platform, revoked its license in late 2021 [56]. DreamHost stopped its domain registration in July 2021 after a software developer killed himself after being harassed by the site’s users. This did not disrupt the forum as it was given 14 days to seek another registrar [57]. While these interventions may have had negative effects on its profit and loss account, they did not impact its activity overall. The only significant disruption in the forum’s history was between 22 January and 9 February 2017 (19 days), when the forum’s owner suspended it himself due to his family being harassed [58]. (Minor suspensions observed in our forum dataset are on 2 Feb 2013, 24 Jan 2016, 29 Sep 2017, and 11 Jan 2021, yet without any clear reasons.)

The disruption studied in this work was started by the online community in 2022. A malicious alarm was sent to the police in London, Ontario by a forum member on 5 August 2022, claiming that a Canadian trans activist had committed murders and was planning more, leading to her being swatted [23]. She and her family were then repeatedly tracked, doxxed, threatened, and generally harassed. In return, she launched a campaign on Twitter on 22 August 2022 under the hashtag #dropkiwifarms and planned a protest outside Cloudflare’s headquarters to pressure the company to deplatform the site [59]. This campaign generated lots of attention and mainstream headlines, which ultimately resulted in several tech firms trying to shut down the forum. This is the first time that the forum was completely inaccessible for an extended period due to an external action, with no activity on any online places including the dark web. It attempted to recover twice, but even when it eventually returned online, the overall activity was roughly halved.

The majority of actions taken to disrupt the forum occurred within the first two months of the campaign. Most of them were widely covered in the media and can be checked against public statements made by the industry and the forum admins’ announcements (see Figure 2). The forum came under a large DDoS attack on 23 August 2022, one day after the campaign started. It was then unavailable from 27 to 28 August 2022 due to ISP blackholing. Cloudflare terminated their DDoS prevention service on 3 September 2022 – just 12 days after the Twitter campaign started – due to an “unprecedented emergency and immediate threat to human life” [24]. The forum was still supported by DDoS-Guard (a Russian competitor to Cloudflare), but that firm also suspended service on 5 September 2022 [25]. The forum was still active on the dark web but this .onion site soon became inaccessible too. On 6 September 2022, hCaptcha dropped support; the forum was removed from the Internet Archive on the same day [60]. This left it under DiamWall’s DDoS protection and hosted on VanwaTech – a hosting provider describing themselves as neutral and non-censored [61]. On 15 September 2022, DiamWall terminated their protection [26] and the ‘.top’ domain provider also stopped support [27]. The forum was completely down from 19 to 26 September 2022 and from 23 to 29 October 2022. From 23 October 2022 onwards, several ISPs intermittently rejected announcements or blackholed routes to the forum due to violations of their acceptable use policy, including Voxility and Tier-1 providers such as Lumen, Arelion, GTT and Zayo. This is remarkable as there are only about 15 Tier-1 ISPs in the world. The forum admin devoted extensive effort to maintaining the infrastructure, fixing bugs, and providing guidance to users in response to password breaches. Eventually, by routing through other ISPs, Kiwi Farms was able to get back online on the clearnet and remain stable, particularly following its second recovery in October 2022.

Table I: Complete snapshots of public posts on Kiwi Farms and its primary competitor Lolcow Farm until 31 Dec 2022.

| Forums | No. posts | No. threads | No. active users |

|---|

| Lolcow Farm | 4 593 076 | 10 029 | Unavailable |

| Total | 14 742 776 | 58 285 | Unavailable |

3. Methods, Datasets, and Ethics

Our primary method is data-driven, with findings supported by quantitative evidence derived from multiple longitudinal data sources, which we collect on a regular basis. Where quantitative measurements require enrichment – as when analysing relevant public statements of tech firms directly involved in the disruption, and announcements made by the forum operators – we use qualitative content analysis.

3.1. Forum and Imageboard Discussions

Besides common mainstream social media channels like Facebook and Twitter, independent platforms such as xenForo (The xenForo Platform:

https://xenforo.com/) and Infinity (The Infinity Imageboard:

https://github.com/ctrlcctrlv/infinity/) have gained popularity as tools for building online communities. Despite being less visible and requiring more upkeep, these can offer greater resistance against external intervention as the operators have full control over the content and databases, thereby allowing easy backup and redeployment in case of disruption. These platforms typically share a hierarchical data structure ranging from bulletin boards down to threads linked to specific topics, each containing several posts. While facilitating free speech, these also increasingly nurture and disseminate hate and abusive speech. We have been scraping the two most active forums associated with online harassment for years due to their increasingly toxic content, as part of the ExtremeBB dataset [62]: Kiwi Farms and Lolcow Farm.

Our collection includes not only posts but also associated metadata such as posting time, user profiles, reactions, and levels of toxicity, identity attack and threat measured by the Google Perspective API as of January 2023. (Google Perspective API:

https://perspectiveapi.com/) Perspective API also offers other measures such as insult and profanity [63], but we exclude these due to lack of relevance to the aim of this paper. This API uses crowdsourced annotations for model training and substantially outperforms the alternatives [64]. We strive to ensure data completeness by designing our scrapers to visit all sub-forums, threads, and posts while keeping track of every single crawl’s progress to resume incrementally in case of any interruption. A summary of the forum discussion data is shown in Table I.

Kiwi Farms is built on xenForo, but the operators have been maintaining the forum by their own efforts since late 2021 when xenForo officially revoked their license. Our data covers the entire history of the forum from early January 2013 to the end of 2022 with 10.1M posts in 48.3k threads made by 59.2k active users, providing a full landscape through its evolution over time. While some extremist forums experienced fluctuating activity and rapid declines in recent years [62], Kiwi Farms has shown stable growth until being significantly disrupted in 2022 (see Figure 1). Our data precisely capture major reported suspensions, including those in 2017 and 2022.

The primary rival of Kiwi Farms is Lolcow Farm, an imageboard built on Infinity [65, 66]. While Kiwi Farms discussions are largely text-based, Lolcow Farm is centred on descriptive images. While Kiwi Farms users adopt pseudonyms, Lolcow Farm users mostly remain hidden under the unified ‘Anonymous’ handle. We gathered a complete snapshot of Lolcow Farm from its inception in June 2014 to the end of 2022, encompassing 4.6M posts made in 10.0k threads. Lolcow Farm has much fewer threads, but each typically contains lots of posts. This collection brings the total number of posts for both forums to 14.7M (and still growing). We exclude Lolcow, a smaller competitor to Kiwi Farms (also based on xenForo), as it vanished in mid-2022 and had less than 30k posts in total. As Lolcow Farm is now the largest competitor, analysing it lets us estimate platform displacement when Kiwi Farms was down.

3.2. Telegram Chats

During periods of inaccessibility, the activity level increased in the Telegram groups associated with Kiwi Farms. There are two channels: one is primarily used by the forum operators to disseminate announcements and updates, particularly about where and when the forum could be accessed; and one is adopted by the forum users mainly for normal discussions. Both channels permit public access, allowing people to join and view historical messages. We used Telethon (Telethon:

https://telethon.dev/) to collect a snapshot of these channels during their entire lifespan until the end of 2022, encompassing 525k messages, 298k replies, and associated metadata such as view counts and 356k emoji reactions made by 2 502 active users. The data is likely complete as our scraper is running in near real time, and messages with metadata are fully captured through the use of official Telegram APIs. As the forum operators are highly incentivised to keep users quickly informed, their announcements provide a reliable incident and response timeline.

3.3. Web Traffic and Search Trends Analytics

We found from announcements in the Telegram group that Kiwi Farms could be accessed through six major domains: the primary one is

kiwifarms.net and four alternatives are

kiwifarms.ru,

kiwifarms.top,

kiwifarms.is, and

kiwifarms.st, while a Pleroma decentralised web version is at

kiwifarms.cc. (Other domains include

kiwifarms.tw and

kiwifarms.hk, however they are either new or insignificant so their traffic data is trivial.) To investigate how users navigated across these domains when the forum experienced disruption, we analysed traffic analytics towards all six domains provided by Similarweb – the leading platform in the market providing insights and intelligence into web traffic and performance. (Similarweb:

https://similarweb.com/). Another popular web analytics is Semrush at

https://semrush.com/, but it does not offer daily statistics. Their reports aggregate anonymous statistics from multiple inputs, including their own analytic services, data sharing from ISPs and other measurement companies, data crawled from billions of websites, and device traffic data (both website and app) such as plugins, add-ons and pixel tracking. Their algorithm then extrapolates the substantial aggregated data to the entire Internet space. Their estimation therefore may not be completely precise, but reliably reflects trends at both global and country levels. To test that reliability, we deployed our own infrastructure to collect over 19M ground-truth traffic records over six months, grouped them into 30-minute sessions then compared with Similarweb visits. We find that while underestimating the amount of traffic due to how repeat pageviews are counted, Similarweb is able to capture trends with a strong positive linear relationship (Pearson correlation coefficient r=0.83). Our analysis in the next section also suggests a high correlation between the traffic data and the forum activity.

As Similarweb does not offer an academic license, we use a free trial account (A business subscription offers 6 months of historical data, but neither it nor the free trial provides access to longitudinal country-based records.) to access longitudinal web traffic and engagement data going back the past three months. This includes information about total visits, unique visitors, visit duration, pages per visit, bounce rate, and page views. It also provides figures on search activity, data for marketing such as visit sources (e.g., direct, search, email, social, referral, ads), and non-temporal insight into audience geography and demographics. These data, covering both desktop and mobile traffic, provide valuable perspectives. They span from July to December 2022, two months before and four months after the disruption; this time frame is sufficient as there was no significant industry intervention against the forum in the past (as shown in Figure

1), and the disruption campaign mostly ended after a few months (see §

4). In addition, we also collected search trends by countries and territories over time from Google Trends, covering the entire lifetime of the forum. Both of these datasets are likely to be complete as they were gathered directly from Similarweb and Google.

3.4. Tweets Made by the Online Community

The disruption campaign started on Twitter on 22 August 2022 with tweets posted under the hashtag #dropkiwifarms. We gathered the main tweets plus associated metadata, such as posting time and reactions (e.g., replies, retweets, likes, and quotes) using Snscrape, an open-source Python framework for social network scrapers. (Snscrape:

https://github.com/JustAnotherArchivist/snscrape/) As they use Twitter APIs as the underlying method, the data are likely to be complete. We collected 11 076 tweets made by 3 886 users, spanning the entire campaign period. This data helps us understand the community reaction throughout the campaign, when the industry took action, and when the forum recovered. There might be more related tweets without the hashtag #dropkiwifarms of which we are unaware, but scanning the whole Twitter space is infeasible. It is likely that the trend measured by our collection is representative as the campaign was congregated around this hashtag.

3.5. Data Licensing

Our datasets and scripts for data collection and analysis are available to academics, as well as an interactive web portal to assist those who lack technical skills to access our data [67]. However, as both researchers and actors such as forum members might be exposed to risk and harm [68], we decline to make our data publicly accessible. It is our standard practice at the Cambridge Cybercrime Centre to require our licensees to sign an agreement to prevent misuse, to ensure the data will be handled appropriately, and to keep us informed about research outcomes [69]. We have a long history of sharing such sensitive data, and robust procedures carefully crafted in conjunction with legal academics, university lawyers and specialist external counsel to enable data sharing across multiple jurisdictions.

3.6. Ethical Considerations

Our work was formally approved by our institutional Ethics Review Board (ERB) for data collection and analysis. Our datasets are collected on publicly available forums and channels, which are accessible to all. We collected the forum when it was hosted in the US; according to a 2022 US court case, scraping public data is legal [70]. Our scraping method does not violate any regulations and does not cause negative consequences to the targeted websites e.g., bandwidth congestion or denial of service. It would be impractical to send thousands of messages to gain consent from all forum and Telegram members; we assume they are aware that their activity on public online places will be widely accessible.

In contrast to some previous work on online forums, we name the investigated forums in this paper. Pseudonymising the forum name is pointless because of the high-profile campaign being studied. Thus, we avoid the pretence that the forum is not identifiable and shift the focus to accounting for the potential harms to both researchers and involved actors associated with our research. We designed our analysis to operate ethically and collectively by only presenting aggregated behaviours to avoid private and sensitive information of individuals being inferred. This is in accordance with the British Society of Criminology Statement on Ethics [71].

Researchers may be at risk and may experience various elevated digital threats when doing work on sensitive resources [68, 72]. Studying extremist forums may introduce a higher risk of retaliation than other forums, resulting in mental or physical harm. We have taken measures to minimise potential harm to researchers and involved actors when doing studies with human subjects and at-risk populations [73, 74]. For example, we consider options to anonymise authors’ names or use pseudonyms for any publication related to the project, including this paper, if necessary. We also refrain from directly looking at media, which may cause emotional harm; our scrapers thus only collect text while discarding images and videos. Although all datasets are widely accessible and can be gathered by the public, we refrain from scraping private and protected posts behind the login wall due to safety and legality concerns.

Figure 3: Normalised levels of global search and web traffic to Kiwi Farms. The red bubble indicates the Streisand effect.

Figure 4: Number of daily posts, threads, and active users on Kiwi Farms, its Telegram channels, and its primary competitor Lolcow Farm, as well as major disruptions and displacement between platforms. The red star indicates the Streisand effect.

4. The Impact on Forum Activity and Traffic

On 3 September 2022, Cloudflare discontinued its DDoS prevention service, which attracted major publicity. This intervention led to a sudden and significant increase in global search interest about Kiwi Farms with a seven-fold spike, along with the web traffic to the six major domains doubling on 4 September 2022 (see Figure 3). This phenomenon, known as the Streisand effect, might be caused by people’s curiosity about what happened to the platform, which is relatively rare but mainly seen with ‘freedom of speech’ issues [11]. It suggests that attempts at censorship may eventually end up being counterproductive [75]: disruptive effort aiming to reduce user interactions instead led to the unintended consequences of increased attention, despite lasting for only a few days before declining sharply.

We examine in detail the impacts of the disruption and the forum recovery on Kiwi Farms within 6 months from July to December 2022. This timeframe provides a sufficient understanding, as the campaign was mostly over and the forum was growing stably before the disruption. To assess the impacts, we separate the observed data points into the post-disruption period (first group) and pre-disruption period (second group), split by 3 September 2022. We then use the Mann–Whitney U test (as the samples are not paired and the data does not follow a normal distribution) to compare the difference between mean ranks of the two populations. The effect size – indicating the magnitude of the observed difference – is assessed by Cliff’s Delta, ranging [-1, 1].

4.1 The Impact of Major Disruptions

While some DDoS attacks were large enough to shut the forum down, their impact was temporary. For example, the DDoS attack on 23 August 2022 – which was probably associated with the Twitter campaign the previous day – led to a drop of roughly 35% in posting volume, yet the forum activity recovered the next day to a slightly higher level (see the first graph of Figure 4). The DDoS attack during Christmas 2022 was also short-lived. The ISP blackholing on 26 August 2022 was more critical, silencing the forum for two consecutive days, yet it again recovered quickly.

The most significant, long-lasting impact was caused by the substantial industry disruption that we analyse in this paper. While forum activity immediately dropped by around 20% after Cloudflare’s action on 3 September 2022, the forum was still online at

kiwifarms.ru, hosting the same content. Activity did not degrade significantly until DDoS-Guard’s action on 5 September 2022, which took down the Russian domain. By 18 September 2022, all domains were unavailable, including .onion (presumably their hosting was identified); forum activity dropped to zero and stayed there for a week. The operator managed to get the forum back online for the first time on 27 September 2022, after which it ran stably on both the dark web and clear web for roughly one month until Zayo – a Tier-1 ISP – blocked it on 23 October 2022. This led to another silent week before the forum eventually recovered a second time on 30 October 2022. It has been stable since then without serious downtime except for the ISP blackholing on 22 December 2022 which led to a 70% drop in activity.

In general, although the forum is now back online stably, hosted on 1776 Solutions – a company also founded by the forum’s owner – it has (at the moment) failed to bounce back to the pre-disruption level, with the number of active users and posting volume roughly halved. The concerted effort we analysed was much more effective than previous DDoS attacks, yet still could not silence the forum for long.

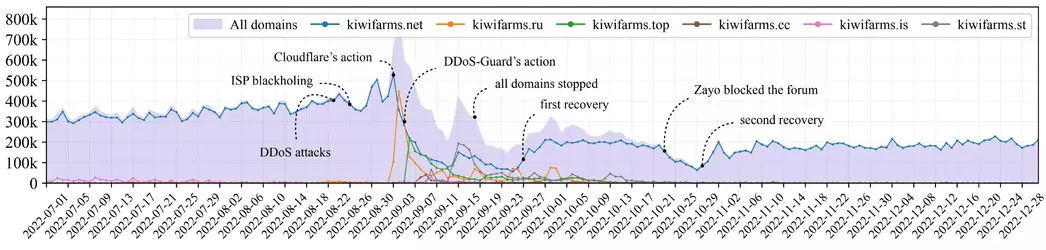

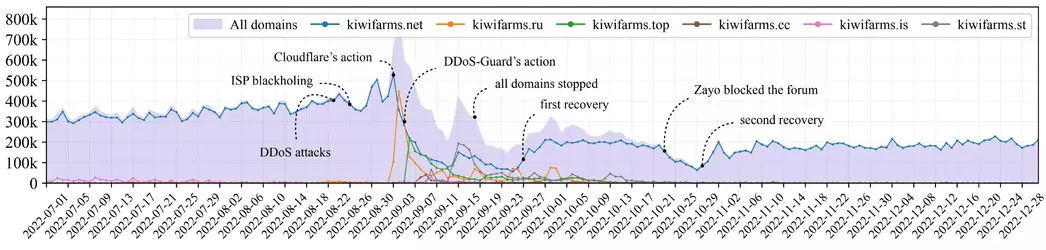

Figure 5: Number of daily estimated visits to Kiwi Farms and the fragmentation to previously abandoned domains. We see non-zero traffic to the primary domain when the forum was down, presumably Similarweb counted unsuccessful attempts.

Figure 5: Number of daily estimated visits to Kiwi Farms and the fragmentation to previously abandoned domains. We see non-zero traffic to the primary domain when the forum was down, presumably Similarweb counted unsuccessful attempts.

4.2. Platform Displacement

The natural behaviour of online communities when their usual gathering place becomes inaccessible is to seek alternative places or channels to continue their discussions. The second graph in Figure 4 illustrates an initial shift of forum activity to Telegram that occurred on 27 August 2022, right after the ISP blackholing. This was accompanied by thousands of emoji reactions on the admin’s announcements since commenting was not allowed at that time. Community reactions (e.g., replies, emojis) seem to have been consistent with the overall Telegram posting activity, which increased rapidly afterwards and even occasionally surpassed the forum’s activity, especially after the publicity given to the Cloudflare and DDoS-Guard actions. At some point, for instance in early October and November 2022, the total number of messages on Kiwi Farms and its Telegram channels significantly exceeded the pre-disruption posting volume on the forum. However, significant displacements only occurred when all domains were completely inaccessible on 18 September 2022, and again when Zayo blocked the second incarnation of the forum on 22 October 2022. The shift to the Telegram channels appears to be rapid yet rather temporary: Kiwi Farms users quickly returned to the primary forum when it became available, while discussion activity on the Telegram channels gradually declined.

There was no significant shift in activity from the forum to its primary competitor Lolcow Farm (see the third graph of Figure 4), however, there was an increase in posting on Lolcow Farm about the incident, indicating a minor change of discussion topic (see more in §5.4). It is unclear if these posting users migrated from Kiwi Farms, as Lolcow Farm do not use handles, making user counts unavailable. Lolcow Farm also experienced downtime on 17 and 18 September 2022 (the same day as Kiwi Farms) yet we have no reliable evidence to draw any convincing explanation. Another drop occurred around Christmas 2022 in sync with Kiwi Farms, perhaps because of the holiday. The activity of Lolcow Farm returned to its previous level quickly after these drops, suggesting that the campaign did not significantly impact Lolcow Farm or drive content between the rival ecosystems; the displacement we observed on Kiwi Farms was mostly ‘internal’ within its own ecosystem, rather than an ‘external’ shift to other forums. While the disruption impact on Kiwi Farms and its Telegram channels is highly significant with a very large effect size, it is not significant for Lolcow Farm (the effect size is small), see Table II.

Table II: The significance of the disruption in daily activities of Kiwi Farms, its Telegram channels, and Lolcow Farm.

| Platforms | Variables | Mann-Whitney U tests | δ |

|---|

| Kiwi Farms | # posts | U = 418.00, p < .0001 | -0.89 |

| # threads | U = 416.50, p < .0001 | -0.89 | |

| # users | U = 388.00, p <.0001 | -0.90 | |

| Kiwi Farms Telegram | # messages | U = 7461.50, p <.0001 | 0.94 |

| # replies | U = 6980.00, p <.0001 | 0.82 | |

| # emojis | U = 7201.00, p <.0001 | 0.88 | |

| # users | U = 7361.50, p <.0001 | 0.92 | |

| Lolcow Farm | # posts | U = 3349.00, p = .1540 | -0.13 |

| # threads | U = 3830.50, p = .9791 | -0.00 | |

Table III: The significance of the disruption in daily traffic to each individual domain of Kiwi Farms, and to all domains.

| Platforms | Variables | Mann-Whitney U tests | δ |

|---|

| kiwifarms.net | # visits | U = 99.00, p < .0001 | -0.97 |

| kiwifarms.ru | # visits | U = 3310.50, p = .1228 | -0.14 |

| kiwifarms.top | # visits | U = 6125.50, p < .0001 | 0.60 |

| kiwifarms.cc | # visits | U = 3670.00, p = .6221 | -0.04 |

| kiwifarms.is | # visits | U = 748.00, p < .0001 | -0.81 |

| kiwifarms.st | # visits | U = 6560.00, p < .0001 | 0.71 |

| All domains | # visits | U = 545.00, p < .0001 | -0.86 |

4.3. Traffic Fragmentation

Before Cloudflare’s action, traffic towards Kiwi Farms (measured by Similarweb) was relatively steady, mostly occupied by the primary domain. However, we see the Streisand effect with an immediate peak in traffic of around 50% more visits and 85% more visitors once the site was disrupted. The publicity given by the takedown presumably boosted awareness and attracted people to visit both the primary and alternative domains. Traffic to the primary domain was then significantly fragmented to other previously abandoned domains, resulting in the

kiwifarms.net accounting for less than 50% one day after Cloudflare’s intervention, as shown in Figure 5.

ollowing the unavailability of

kiwifarms.net, most traffic was directed to

kiwifarms.ru, which was under DDoS Guard’s protection (accounting for around 60% total traffic on 4 September 2022). The DDoS-Guard’s action on 5 September 2022 reduced traffic towards

kiwifarms.ru sharply, while traffic towards

kiwifarms.top peaked. The suspension of

kiwifarms.top on the following day led to increased traffic towards

kiwifarms.cc (a Pleroma decentralised web instance), but it only lasted for a couple of days before traffic shifted again to

kiwifarms.is. The seizure of

kiwifarms.is later led to the traffic shifting to

kiwifarms.st, but it was also short-lived.

The forum recovery on 27 September 2022 gradually directed almost all traffic back to the primary domain, and by 22 October 2022,

kiwifarms.net mostly accounted for all traffic, albeit at about half the volume. This effect is highly consistent with what has been found in our forum data, indicating a reliable pattern. Overall, our evidence suggests a clear traffic fragmentation across different domains of Kiwi Farms, in which people attempted to visit surviving domains when one was disrupted. While the observed fragmentation is clear, the impact on two domains is not significant enough when assessing the period as a whole. However, it is highly significant on the total traffic, notably the substantial drops of the primary domain

kiwifarms.net (see Table III).

5 The Impacts on Relevant Stakeholders

We have looked at the impacts of the disruption on Kiwi Farms itself. This section examines the effects on relevant stakeholders, including the harassed victim, the community leading the campaign, the industry, the forum operators, and active forum users who posted at least once. As our ethics approval does not allow the study of individuals, all measurements are conducted collectively on subsets of users. Besides quantitative evidence, we also qualitatively look at statements made by tech firms about the incident.

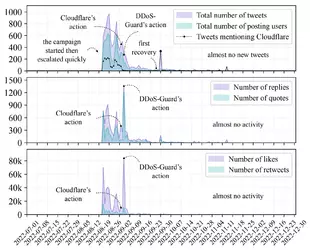

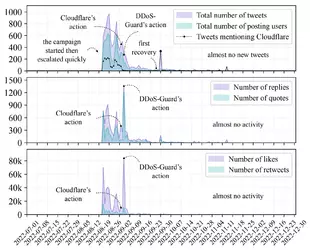

Figure 6: Number of daily tweets and reactions made by the community about the campaign. Figure scales are different.

Figure 6: Number of daily tweets and reactions made by the community about the campaign. Figure scales are different.

5.1. The Community that Started the Campaign

There were 3 886 users in the online community involved in starting the campaign. Of these, 1 670 users (42.97%) were responsible for around 80% of tweets. There was a sharp increase in tweets and reactions at the beginning (see Figure 6). The first peak was on 25 August 2022 with nearly 900 tweets by around 600 users. However, this dropped rapidly to less than 100 per day after a few weeks when Cloudflare and DDoS-Guard took action, and almost to zero two weeks later. The number of tweets specifically mentioning Cloudflare (such as their official account, as well as those for jobs, help, and developers) was around 200 in the beginning but decreased over time, and dropped to zero after they took action. This lasted for roughly one month until after the forum recovered: we see around 400 tweets mentioning Cloudflare, twice the previous peak, and accounting for almost all such tweets that day. However, these tweets appeared to be primarily associated with another campaign counted by the hashtag #stopdoghate, suggesting a short-lived outlier instead of a genuine peak.

The trans activist who launched the campaign was engaged at the beginning but then became much less active in posting new tweets, although she still replied to people. Her posting volume was, however, trivial compared to the overall numbers: she made only four tweets on the day the campaign started, the number then dropped quickly to only one on 4 September 2022 after Cloudflare took action, and zero thereafter. It suggests that although she sparked the campaign, she might not be the primary maintainer.

We see no notable peak of tweets after the forum was completely shut down, suggesting a clear loss of interest in pursuing the campaign, both from people posting tweets and people reacting to tweets. The community seemed to get bored quickly after a few weeks when they appeared to have gotten what they wanted – ‘Kiwi Farms is dead, and I am moving on to the next campaign’, tweeted the activist.

5.2. The Industry Responses

Unlike measurement of forum activity, there is no such quantitative data to cover the impact on industry actors, so we switch to qualitative analysis of public statements made by those who directly attempted to terminate the forum. We first compile a list of involved tech firms seen from the takedown incidents, then look at their websites, news, and blogs to spot their official statements if available. We repeated the search regularly, and the final list consists of four firms: Cloudflare, DDoS-Guard, DiamWall, and Harica. We then took a deductive approach to understand (1) their hosting policy, (2) their perspective on Kiwi Farms, and (3) their reactions to the community pressure.

Cloudflare stated their abuse policies on 31 August 2022 without directly mentioning the Twitter campaign [76]. In summary, the firm offers traffic proxy and DDoS protection to lots of (mostly non-paid) sites regardless of the content hosted, including Kiwi Farms. The firm maintains that abusive content alone is not an issue, and the forum – while immoral – still deserves the same protection as other customers, as long as it does not violate US law. Although Cloudflare are entitled to refuse business from Kiwi Farms, they initially took the view that doing so because of its content would create a bad precedent, leading to unintended consequences on content regulation and making things harder for Cloudflare. This could affect the whole Internet, as Cloudflare handles a large proportion of network traffic. They did not want to get involved in policing online content, but if they had to do it they would rather do so in response to a court order instead of popular opinion. The firm previously had dropped the neo-Nazi website Daily Stormer [12] and the extremist board 8Chan [13] because of their links with terrorist attacks and mass murders, and a false claim about Cloudflare’s secret support. They also claimed that dropping service for Kiwi Farms would not remove the hate content, but only slow it down for a while.

Nevertheless, Cloudflare did a U-turn a few days later on 3 September 2022, announcing that they would terminate service for Kiwi Farms [24]. They explained that the escalation of the pressure campaign led to users being more aggressive, which might lead to crime. They reached out to law enforcement in multiple jurisdictions regarding potential criminal acts, but as the legal process was too slow compared to the escalating threat, they made the decision alone [24]. They still claimed that following a legal process would be the correct policy, and denied that the decision was the direct result of community pressure. Cloudflare’s action also inadvertently led to the termination of a neo-Nazi group in New Zealand, as it was hosted by the same company as the forum [77].

DDoS-Guard’s statements about the incident told a similar story [25]. Although they can restrict access to their customers if they violate the acceptable use policy, content moderation is not their duty (except under a court order) so they do not need to determine whether every site they protect violates the law. DiamWall took the same line; they claimed that they are not responsible, and are unable to moderate content hosted on websites [26]. They also maintained that terminating services in response to public pressure is not good policy, but the case of Kiwi Farms was exceptional due to its ‘revolting’ content. They also noted that their actions could only delay things but not fix the root cause, as the forum could find another provider. DiamWall’s statement was removed afterwards without any clear explanations; it is now only accessible through online archives.

Unlike the three firms above, Harica – a Greek Authority providing certificates for .onion sites – took a different line. They confirmed their support for freedom of speech and stated that they will not censor any website, but are obligated to investigate complaints about websites violating the law, the Certificate Policy (CP) and the Certificate Practice Statement (CPS). After a review process, on 15 May 2023 they announced they would revoke the .onion certificates issued to Kiwi Farms due to concerns about harassment connecting to suicides. After setting a 3-day timeframe for Kiwi Farms to seek a new authority, their support team were targeted by various threats and harassment, but based on one ‘kind and polite’ message highlighting that Harica is one of the only two authorities issuing .onion certificates, they postponed the decision the day after and waited for further law enforcement investigation as Kiwi Farms has very limited alternatives to protect their site [78].

Whether blocking Kiwi Farms or not, it is understandable that infrastructure and certificate providers may not want to get involved in content regulation the way Facebook and Google have to, as moderation is complex, challenging, contentious and expensive [79].

5.3. The Forum Operators

Figure 7: Number of public announcements posted daily by the forum operators since the Telegram channel was created.

Figure 7: Number of public announcements posted daily by the forum operators since the Telegram channel was created.

The disruption of Kiwi Farms led to a cat-and-mouse game where tech firms tried to shut it down by various means while the forum operators tried to get it back up. We extract messages of the forum operators from a Telegram channel activated after the Twitter campaign, where they posted 107 announcements during the period, mostly about when and where the forum was back, the ongoing issues (e.g., DDoS attacks, industry blocks), and their plans to fix.

The admins were very active, for example, sending seven consecutive messages on 23 August 2022 that mostly concerned the large DDoS attack on that day, see Figure 7. The second peak was on 6 September 2022 after Cloudflare and DDoS-Guard’s withdrawal of service, mostly about forum availability. The number of announcements then gradually decreased, especially after the second recovery, with many days having no messages. A DDoS attack hitting the forum during Christmas 2022 caught the admins’ attention for a while. Their activity was inversely correlated with the forum’s stability; they were less active when the site was up and running stably or when there were no new incidents, for example, many announcements were posted in September, late October, and late December 2022, when the forum was under DDoS attacks and disruptions as shown in Figure 4.

We took a deductive approach based on the extracted announcements to comprehend the effort made by the forum operator to restore service. Kiwi Farms needed DDoS protection to hide its original IP address and evade cyberattacks, so the operators first switched their third-party DDoS protection to DDoS-Guard, then DiamWall, yet these firms also resigned their business. They then attempted to build an anti-bot mechanism themselves based on HAProxy – an open-source software to stop bots, spam, and DDoS using proof-of-work [80] – and claimed to be resilient to thousands of simultaneous connections. They also changed hosting providers to VanwaTech and eventually their own firm 1776 Solutions, and attempted to route their traffic through other ISPs. They were actively maintaining infrastructure, fixing bugs, and giving instructions to users to deal with their passwords when the forum experienced a breach. The operators’ effort seemed to be competent and consistent.

5.4 The Forum Members

People sharing the same passion naturally coalesce into communities, in which some key actors may play a crucial role in influencing the ecosystem [81, 82, 83]. We separate the pre-disruption and post-disruption by 3 September 2022, when Cloudflare took action. Kiwi Farms activity is highly skewed, with around 80% (We make use of the 80/20 rule – the Pareto principle [84].) of pre-disruption posts made by 8.96% most active users (5 159), while the remaining 20% posts were made by the 91.04% less active (52 430). There was around a 30% drop in the number of users after the disruption, as seen in Figure 4.

There were 1 571 new usernames after the disruption, which could be either newcomers or old members creating new accounts after losing access to old ones. Multi-platform users tend to pick similar pseudonyms on different platforms [85]; we believe returning users are also likely do that to preserve their reputation, so can be detected if their usernames are very similar and rare enough, although common handles are often picked up by multiple individuals [86]. While the similarity of two usernames can be determined by the Levenshtein distance, we use a n-gram model trained by the Reuters corpus [87] to estimate the rarity of usernames, considering one is rare if the highest probability observed is not greater than 1%. We found 5.31% such users among 1 571 new pseudonyms: 11 returning core actors (0.21% of core users), 72 returning casual actors (0.14% of casual users), while the rest 1 488 are newcomers. The estimation may overlook all-new usernames, yet we believe this number is relatively small as a mass password reset was mandated after the breach instead of account replacement.

We analyse the behaviour of those active after the disruption, namely the ‘core survivors’ (2 529, returning actors included), ‘casual survivors’ (6 915, returning actors included), and ‘newcomers’ (1 488). Around half of key users (49.02%) remained engaged, while only 13.19% of casual users stayed (86.95% has left). On average, before the disruption, each ‘core survivor’ posted 22.3 times more than each ‘casual survivor’ (1800.03 vs 80.82 posts), while their active period – between their first post and last post – was around 2.5 times longer (1306.94 vs 516.84 days).

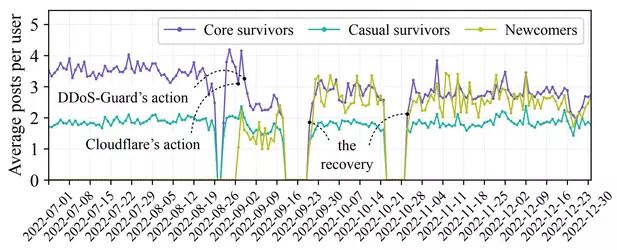

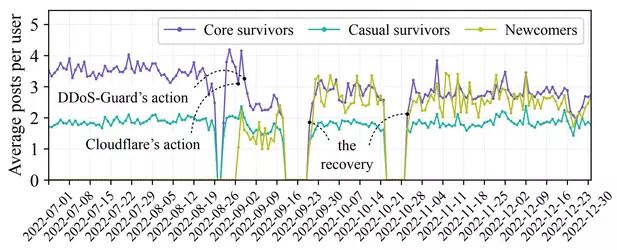

Figure 8: Number of average posts per day of survivors and newcomers, who posted at least once after the disruption.

Figure 8: Number of average posts per day of survivors and newcomers, who posted at least once after the disruption.

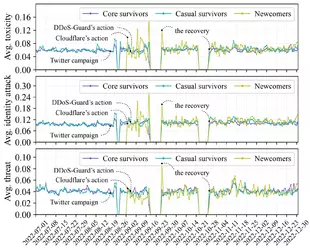

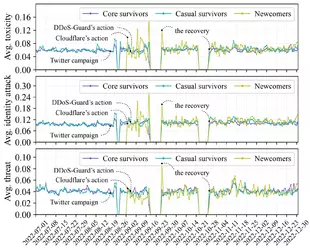

Figure 9: Average toxicity, identity attack, and threat levels of posts made by survivors and newcomers after the event.

Figure 9: Average toxicity, identity attack, and threat levels of posts made by survivors and newcomers after the event.

Posting Activity

Before the takedown, each core survivor made about 3.5 posts per day on average, while it was around 3 afterwards – see Figure 8. The activity of the other survivors appears consistent with the pre-disruption period; their average posts were at around 2 per day before the incident and almost unchanged afterwards. These figures suggest that the decreasing posting volume seen in Figure 4 was mainly due to users leaving the forum, instead of surviving ones largely losing interest – they engaged back quickly after the forum recovered. Newcomers posted slightly less than casual survivors before the forum was completely down on 18 September 2022 (less than 2 posts per day), yet their average posting volume then increased quickly. This suggests that the disruption, besides removing a very large proportion of old casual users, drew in many new users who then became roughly as active as the core survivors.