The viral AI avatar app Lensa undressed me—without my consent

My avatars were cartoonishly pornified, while my male colleagues got to be astronauts, explorers, and inventors.

The viral AI avatar app Lensa undressed me—without my consent | MIT T…

archived 13 Dec 2022 07:43:00 UTC

By Melissa Heikkilä

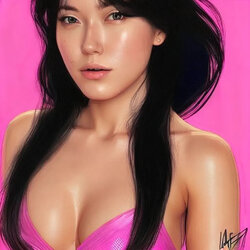

MELISSA HEIKKILÄ VIA LENSA

When I tried the new viral AI avatar app Lensa, I was hoping to get results similar to some of my colleagues at MIT Technology Review. The digital retouching app was first launched in 2018 but has recently become wildly popular thanks to the addition of Magic Avatars, an AI-powered feature which generates digital portraits of people based on their selfies.

But while Lensa generated realistic yet flattering avatars for them—think astronauts, fierce warriors, and cool cover photos for electronic music albums— I got tons of nudes. Out of 100 avatars I generated, 16 were topless, and in another 14 it had put me in extremely skimpy clothes and overtly sexualized poses.

I have Asian heritage, and that seems to be the only thing the AI model picked up on from my selfies. I got images of generic Asian women clearly modeled on anime or video-game characters. Or most likely porn, considering the sizable chunk of my avatars that were nude or showed a lot of skin. A couple of my avatars appeared to be crying. My white female colleague got significantly fewer sexualized images, with only a couple of nudes and hints of cleavage. Another colleague with Chinese heritage got results similar to mine: reams and reams of pornified avatars.

Lensa’s fetish for Asian women is so strong that I got female nudes and sexualized poses even when I directed the app to generate avatars of me as a male.

MELISSA HEIKKILä VIA LENSA

The fact that my results are so hypersexualized isn’t surprising, says Aylin Caliskan, an assistant professor at the University of Washington who studies biases and representation in AI systems.

Lensa generates its avatars using Stable Diffusion, an open-source AI model that generates images based on text prompts. Stable Diffusion is built using LAION-5B, a massive open-source data set that has been compiled by scraping images off the internet.

And because the internet is overflowing with images of naked or barely dressed women, and pictures reflecting sexist, racist stereotypes, the data set is also skewed toward these kinds of images.

This leads to AI models that sexualize women regardless of whether they want to be depicted that way, Caliskan says—especially women with identities that have been historically disadvantaged.

AI training data is filled with racist stereotypes, pornography, and explicit images of rape, researchers Abeba Birhane, Vinay Uday Prabhu, and Emmanuel Kahembwe found after analyzing a data set similar to the one used to build Stable Diffusion. It’s notable that their findings were only possible because the LAION data set is open source. Most other popular image-making AIs, such as Google’s Imagen and OpenAI’s DALL-E, are not open but are built in a similar way, using similar sorts of training data, which suggests that this is a sector-wide problem.

As I reported in September when the first version of Stable Diffusion had just been launched, searching the model’s data set for keywords such as “Asian” brought back almost exclusively porn.

Stability.AI, the company that developed Stable Diffusion, launched a new version of the AI model in late November. A spokesperson says that the original model was released with a safety filter, which Lensa does not appear to have used, as it would remove these outputs. One way Stable Diffusion 2.0 filters content is by removing images that are repeated often. The more often something is repeated, such as Asian women in sexually graphic scenes, the stronger the association becomes in the AI model.

Caliskan has studied CLIP (Contrastive Language Image Pretraining), which is a system that helps Stable Diffusion generate images. CLIP learns to match images in a data set to descriptive text prompts. Caliskan found that it was full of problematic gender and racial biases.

“Women are associated with sexual content, whereas men are associated with professional, career-related content in any important domain such as medicine, science, business, and so on,” Caliskan says.

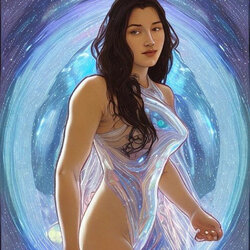

Funnily enough, my Lensa avatars were more realistic when my pictures went through male content filters. I got avatars of myself wearing clothes (!) and in neutral poses. In several images, I was wearing a white coat that appeared to belong to either a chef or a doctor.

But it’s not just the training data that is to blame. The companies developing these models and apps make active choices about how they use the data, says Ryan Steed, a PhD student at Carnegie Mellon University, who has studied biases in image-generation algorithms.

“Someone has to choose the training data, decide to build the model, decide to take certain steps to mitigate those biases or not,” he says.

The app’s developers have made a choice that male avatars get to appear in space suits, while female avatars get cosmic G-strings and fairy wings.

A spokesperson for Prisma Labs says that “sporadic sexualization” of photos happens to people of all genders, but in different ways.

The company says that because Stable Diffusion is trained on unfiltered data from across the internet, neither they nor Stability.AI, the company behind Stable Diffusion, “could consciously apply any representation biases or intentionally integrate conventional beauty elements.”

“The man-made, unfiltered online data introduced the model to the existing biases of humankind,” the spokesperson says.

Despite that, the company claims it is working on trying to address the problem.

In a blog post, Prisma Labs says it has adapted the relationship between certain words and images in a way that aims to reduce biases, but the spokesperson did not go into more detail. Stable Diffusion has also made it harder to generate graphic content, and the creators of the LAION database have introduced NSFW filters.

Lensa is the first hugely popular app to be developed from Stable Diffusion, and it won’t be the last. It might seem fun and innocent, but there’s nothing stopping people from using it to generate nonconsensual nude images of women based on their social media images, or to create naked images of children. The stereotypes and biases it’s helping to further embed can also be hugely detrimental to how women and girls see themselves and how others see them, Caliskan says.

“In 1,000 years, when we look back as we are generating the thumbprint of our society and culture right now through these images, is this how we want to see women?” she says.

Our hot virtual Asian Melissa also wrote this article about Greg Rutkowski:

Last edited: