- Joined

- Jul 6, 2021

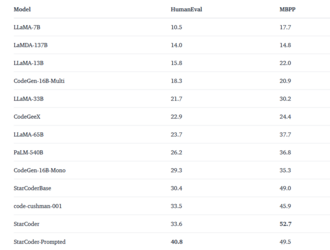

It might be incredibly difficult to run a local coding model on a 1060. It's not that it's impossible but the token output will be around 0.25 tokens / second and to load the model fully you are going to have to cut the context length in half (from 2048 to 1024, which will severely limit the output of the model). Depending on what CPU you have available, you could use a GGML model (which offloads most of the model to the CPU) to load in a 7B model. The best local model I've had experience with for programming related tasks is StarCoder. It seems to score the highest on most tasks compared to other models across HuggingFaceI'm still using a 1060 6GB so not much, it seems that these chatbots require an insane amount of VRAM for some reason.

I would try this first:

1. Download and install text-generation-webui - a frontend for running LLMs

2. Update the webui to the latest version (follow the instructions on the GitHub repo for your OS)

3. Look for a quantitized, 7b GGML model - this HF account has a lot of them in different formats https://huggingface.co/TheBloke - i suggest this one: https://huggingface.co/TheBloke/WizardLM-Uncensored-Falcon-7B-GGML

4. Download the model and then run it to see what type of results you get with your hardware.