If heterogenous architectures are so great, explain why servers aren’t using them. Datacentres are all about cutting down on energy use, its almost as important to them as it is to cellphones, where these architectures are long well established.

There is datacenter heterogeneity, but it's at the node level, not the chip level. You just spin down the entire node when it's not busy.

They are also very different use cases. The typical desktop/laptop use case is a single user, with a single CPU, running lots and lots of different applications, often with many applications open and in the background. Lots of datacenter use cases are a single application running in hundreds or thousands of homogeneous instances. Heterogeneity at the chip level doesn't really buy me anything when every single core is being used by SAP HANA or Ansys Fluent.

Also, in the server, if I need more compute power, I buy more nodes. E-cores aren't just about saving energy, they're also about economizing on die space. An 8E+8P config can do a lot more overall work than a 10P config.

That leaves it up to Intel to find algorithmic ways to assign cores, which demonstrably doesn’t work very well even after years of development.

Disagree - everyone's been pretty successful at deprioritizing background tasks to E-cores. The one thing they're not very good at is homogeneous workloads that just count the number of hardware threads a CPU has and blindly spin out that many software threads, but the programming guides for all these chips, not just Intel's, tell you not to do that.

That leaves it up to Intel to find algorithmic ways to assign cores, which demonstrably doesn’t work very well even after years of development. And while MacBooks are brilliant with Apple Silicon, there’s no indication that’s because the architecture is heterogeneous.

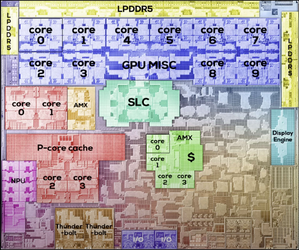

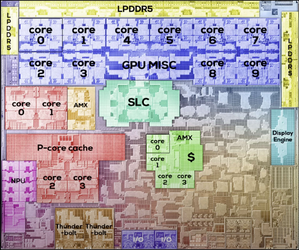

This is an M3 die map. 4 E-cores consume a lot less power than 4 P-cores. There are just fewer transistors to keep powered, besides the fact they're clocked lower. It really does save quite a bit of energy to move background tasks to small cores, which is why everyone does it now.

That's why we got a "Raptor Lake Refresh" that did nothing but shuffle around the allocation of E-cores.

Raptor Lake Refresh exists solely because Intel 4 had yield issues that couldn't be fixed in time to have a desktop version of Meteor Lake. It isn't the culmination of a secret plot hatched in 2018 to trick you.