- Joined

- Aug 28, 2019

Seems pretty high for something that will be landing somewhere between a 7600 XT 16 GB and 7700 XT 12 GB, but not unlikely.AMD could price the 9060XT at $420

Could be, but I think the conversation is going to be about VRAM. AMD gave 16 GB to their "clone", and probably the 9060 XT (I don't trust EEC filing "leaks" but it's almost guaranteed to be the 7600 XT successor and match its VRAM). On Nvidia's side, there will be the 128-bit 4060 Ti 16 GB and it will be pretty funny if/when that outperforms the 5070.So I take it that the 4070 super is now the new baseline for a system, since AMD basically made a clone?

NVIDIA’s GeForce RTX 4090 With 96GB VRAM Reportedly Exists; The GPU May Enter Mass Production Soon, Targeting AI Workloads - This is Chinese souping up the card AFAICT, and the 4 GB GDDR6X modules needed don't appear to exist. The 48 GB version can happen.

Intel’s Panther Lake SoCs Are Rumored To Be Delayed To Mid-Q4 2025; 18A Process Likely To Be The Culprit

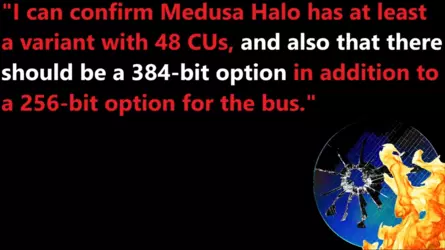

MLID snuck a Medusa Halo leak in his latest podcast:

If they really go to 384-bit, probably requiring a special I/O die, I assume they are going to have to cram 12 memory chips into designs using it. And that would get you to 192 GB at a minimum.