Recently I wanted to try archiving some furries' twitter timelines, as well as their followers and following lists. I went looking around online for tools that would help with this, but Google was interested only in showing me shady shit with price tags upwards of $40 per use. These tools also require you to have a Twitter account and access to Twitter's developer interface, which requires an application and has an approval process, and fuck that noise.

So, I went open source. And lo and behold, I got things to work properly. I'm happy to share with you a guide on how to go full NSA on somebody's public twitter feed, without even needing to be logged into a twitter account. This also will work on any operating system that can support Python, so basically any operating system not developed by a lolcow.

DISCLAIMER: I am not a programmer. This guide covers use of coding tools that I barely know how to use, let alone how to use safely. I am literally a script kiddie playing with scripts. Fuck around with these tools at your own risk.

We'll be covering how to use

Twint, an open-source Twitter scraping tool coded in Python. The developers are working on a desktop app of this, but for now, you need to have Python installed in order to run it.

You can download Python

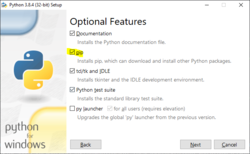

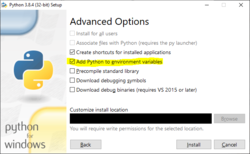

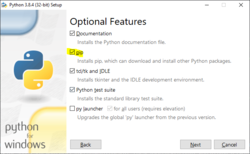

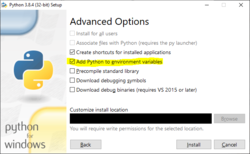

here. If you're installing it on Windows, you'll want to make sure that these two options are checked during install:

The first, pip, lets you download and install Python shit with a single command. The second lets you run Python shit from the command line. Get Python installed, then operate up a command line interface (cmd if you're neurotypical; PowerShell, bash, or god knows what else otherwise) and type in the following command:

If you see a series of messages relating to downloading and installing shit from the internet and System32 doesn't vanish, you've done it right. Wait for the gears to stop whirring, then use the command prompt to navigate to a folder you know how to find again, such as Downloads if you're a heathen who saves everything to Downloads. Then, refer to the documentation in

Twint's github wiki to build a command to harvest the Twitter account of your choice. As a note, this doesn't work on protected Twitters, so you won't be able to trawl somebody's AD timeline. What a shame.

Here's some examples of commands you can use:

Code:

twint -u khordkitty -o khord_tweets.csv --csv

This command will pull everything down from

KhordKitty's Twitter timeline and save it to a comma-separated values (CSV) file. CSV files are wonderful contraptions that can be opened in Excel or another spreadsheet editor, where you can run all manner of analytics on them. See attached for a sample of the results!

Code:

twint -u khordkitty --followers -o khord_followers.csv --csv

This command will rip somebody's follower list from start to finish, saving every follower's username as a nice list.

Code:

twint -u khordkitty --followers --user-full -o khord_followers.csv --csv

This command will rip somebody's follower list from start to finish, saving every follower's name, username, bio, location, join date, and various other shit as a nice list.

WARNING: It also takes a lot fuckin longer to work.

Code:

twint -u khordkitty --following -o khord_following.csv --csv

Same as the command to rip a follower list, except that this time, it collects the list of everybody they're following instead so you can see how many AD twitters they're jerking off to. The

--user-full argument works here too, with the same caveat about taking longer.

That's just a few of the wonderful things you can do with twint. However, as I have learned,

twint is not without its limitations. One such limitation I have observed is that Twitter does not like being scraped and will stop responding to scrapes after 14,400 tweets in succession have been scraped. Once Twitter so lashes out at you, it'll take a few minutes before Twint starts working again.

Twint has some workarounds for this -- such as allowing you to use the

--year argument to only pull tweets from before a given year -- but it's still annoying as hell. I'll have to experiment further with it. Also, as you may have guessed, this only grabs the text of tweets, including image URLs, so if you really want to go full archivist on some faggot you'll need to use some additional code. Thankfully,

@Warecton565 is to the rescue, with some code in

a wonderful post. The code is not

perfect; I did have to make a bit of a change just to get it to work at all:

Python:

import csv

from sys import argv

import requests

import json

tweets = csv.DictReader(open(argv[1], encoding="utf8"))

for tweet in tweets:

pics = json.loads(tweet["photos"].replace("'", '"'))

for pic in pics:

r = requests.get(pic)

open(argv[2] + "/" + pic.split("/media/")[1], "wb").write(r.content)

And I had to make the folder it was going to output to first and run the damn thing in IDLE before it would do anything. However, once you get it working, you can wind up with a folder containing hundreds of furry porn images and fursuit photos in mere minutes. Maybe even a photo of a dong or two.

Have fun!

If you have anything you would like added to this post, please let me know via PM or some other means. Again, I'm just a fucking script kiddie.