- Joined

- Aug 28, 2019

AMD’s Ryzen Threadripper 9000 “Shimada Peak” 64-Core & 32-Core SKUs Spotted In Shipping Manifests

For the people who care about Threadripper, maybe 16/32/64/96-core Zen 5 Threadripper is coming (maybe eventually).

AMD’s Ryzen “Zen 6” CPUs & Radeon “UDNA” GPUs To Utilize N3E Process, High-End Gaming GPUs & 3D Stacking For Next-Gen Halo & Console APUs Expected (archive)

China leaker goes hard. N3E for the Zen 6 CCD was already expected, and would help it get to the previously rumored 12 cores instead of 8 cores (Zen 5 CCD uses N4X). Zen 4 and Zen 5 use the same TSMC N6 I/O die, so moving that to N4C would allow memory controller improvements, probably a newer iGPU and maybe an XDNA1/2 NPU. The N4C node is TSMC's "budget" version of the N5/N4 family, so it's a direct replacement for N6.

UDNA1 desktop GPUs will use N3E, and a flagship is back on the table. That can always be cancelled so don't count on it.

Next-gen Strix Halo (Medusa Halo) could use 3D stacking for CPU and GPU. This probably means 3D V-Cache and 3D Infinity Cache, but could refer to some new packaging technique. PS6 will use 3D stacking while Microsoft is "not sure yet", which tracks with them being less certain about Xbox's future.

Intel Arc B570 “Battlemage” Graphics Cards Review Roundup

Tom's Hardware: Intel Arc B570 review featuring the ASRock Challenger OC: A decent budget option with a few deep cuts

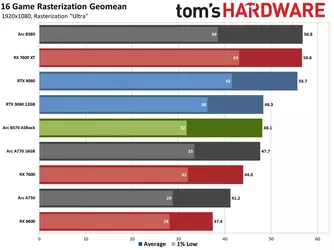

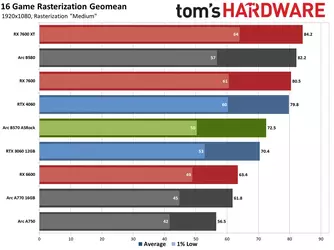

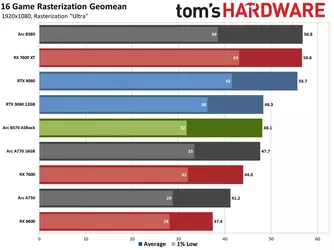

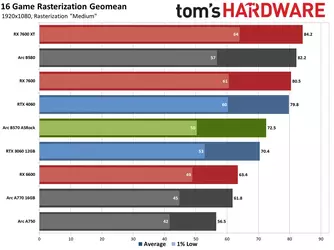

$249 B580 MSRP to $219 B570 MSRP is a 12% price cut, but you lose ~17% VRAM (-2 GB). What about the performance? Raster: -15% (1080p Ultra), -12% (1080p Medium), -18% (1440p Ultra).

B570 is very similar to the RTX 3060's performance in this review. It's +9.3% faster than the 7600 non-XT at 1080p Ultra, but 10% slower at 1080p Medium. 1080p Ultra also sees the 7600 XT (16 GB) 28.6% faster than the 7600 non-XT (8 GB), showing off the benefit of sufficient VRAM.

1% lows not great for the B570/B580 in these geomean charts either.

The B570 price should have been $200, but if the B580 remains unobtainium, I could see grabbing it instead. But it's no better than an RTX 3060, and even 8 GB cards are fine if you lower settings.

Samsung teases next-gen 27-inch QD-OLED displays with 5K resolution

For the people who care about Threadripper, maybe 16/32/64/96-core Zen 5 Threadripper is coming (maybe eventually).

AMD’s Ryzen “Zen 6” CPUs & Radeon “UDNA” GPUs To Utilize N3E Process, High-End Gaming GPUs & 3D Stacking For Next-Gen Halo & Console APUs Expected (archive)

China leaker goes hard. N3E for the Zen 6 CCD was already expected, and would help it get to the previously rumored 12 cores instead of 8 cores (Zen 5 CCD uses N4X). Zen 4 and Zen 5 use the same TSMC N6 I/O die, so moving that to N4C would allow memory controller improvements, probably a newer iGPU and maybe an XDNA1/2 NPU. The N4C node is TSMC's "budget" version of the N5/N4 family, so it's a direct replacement for N6.

UDNA1 desktop GPUs will use N3E, and a flagship is back on the table. That can always be cancelled so don't count on it.

Next-gen Strix Halo (Medusa Halo) could use 3D stacking for CPU and GPU. This probably means 3D V-Cache and 3D Infinity Cache, but could refer to some new packaging technique. PS6 will use 3D stacking while Microsoft is "not sure yet", which tracks with them being less certain about Xbox's future.

Intel Arc B570 “Battlemage” Graphics Cards Review Roundup

Tom's Hardware: Intel Arc B570 review featuring the ASRock Challenger OC: A decent budget option with a few deep cuts

$249 B580 MSRP to $219 B570 MSRP is a 12% price cut, but you lose ~17% VRAM (-2 GB). What about the performance? Raster: -15% (1080p Ultra), -12% (1080p Medium), -18% (1440p Ultra).

B570 is very similar to the RTX 3060's performance in this review. It's +9.3% faster than the 7600 non-XT at 1080p Ultra, but 10% slower at 1080p Medium. 1080p Ultra also sees the 7600 XT (16 GB) 28.6% faster than the 7600 non-XT (8 GB), showing off the benefit of sufficient VRAM.

1% lows not great for the B570/B580 in these geomean charts either.

The B570 price should have been $200, but if the B580 remains unobtainium, I could see grabbing it instead. But it's no better than an RTX 3060, and even 8 GB cards are fine if you lower settings.

Samsung teases next-gen 27-inch QD-OLED displays with 5K resolution

Last edited: