- Joined

- Nov 25, 2018

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

Style variation

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Homelab & Selfhosting Thread - One Day it will be a Home Datacenter, you just gotta believe!

- Thread starter CryoRevival #SJ-112

- Start date

-

🐕 I am attempting to get the site runnning as fast as possible. If you are experiencing slow page load times, please report it.

- Joined

- Jan 3, 2024

- Joined

- Nov 25, 2018

I had 5650's but 5690's are cheap as duck nowWhat are the xeons for

- Joined

- Jan 3, 2024

But what is the purposeI had 5650's but 5690's are cheap as duck now

- Joined

- Nov 25, 2018

Snappy docker web interfacesBut what is the purpose

- Joined

- Sep 21, 2020

My setup from top to bottom-

3x Dell r630s 256GB DDR4 Ea.

1x HP DL360 G9

1x Dell R720 (paper weight)

1x Dell EMC KTN STL3 disk bay

1x Netapp ds 4243 disk bay

I use it to run jellyfin.

Delta Integrale

kiwifarms.net

- Joined

- Aug 1, 2021

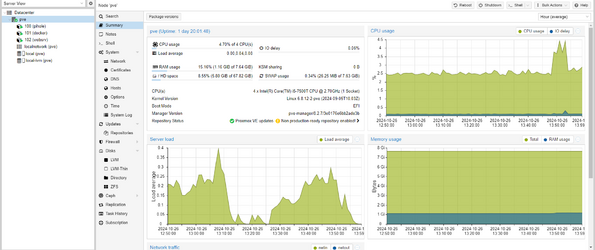

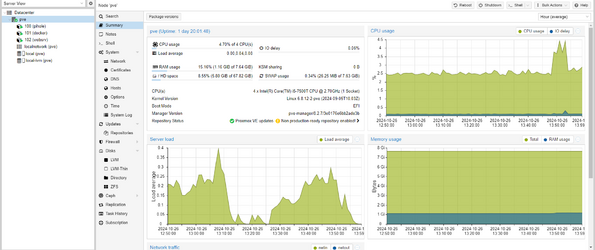

How much is your power bill with all that stuff running constantly?View attachment 6125299

My setup from top to bottom-

3x Dell r630s 256GB DDR4 Ea.

1x HP DL360 G9

1x Dell R720 (paper weight)

1x Dell EMC KTN STL3 disk bay

1x Netapp ds 4243 disk bay

I use it to run jellyfin.

- Joined

- Sep 21, 2020

How much is your power bill with all that stuff running constantly?

I never run the R720 any more. It's simply too weak. I run everything else though.

I've put a lot of effort into underclocking, and I've gotten the rack down to a ~90$/mo power bill. Because of how cold it gets where I am, It only cost 75$/mo in the winter when the heating cost goes down.

- Joined

- Nov 25, 2018

SchloppyI.T.Man

kiwifarms.net

- Joined

- Jul 1, 2024

Just got done tearing my hair out learning that if you mix and match Ethernet over powerline adapters and try to run VLAN access over that, it will more often than not break VLAN access.

But the good news in this is it gets me off my ass to actually wire my house for ethernet!

But the good news in this is it gets me off my ass to actually wire my house for ethernet!

- Joined

- Aug 20, 2022

I built a new server for myself that hosts AI capabilities (basically Ollama and Stable Diffusion with OpenWebUI for now) but I realized I have no idea what to actually do with it.

Squishie PP

kiwifarms.net

- Joined

- May 19, 2023

The obvious answer is to make selfhosted AI waifus that will comfort you while you are lonely c:I built a new server for myself that hosts AI capabilities (basically Ollama and Stable Diffusion with OpenWebUI for now) but I realized I have no idea what to actually do with it.

- Joined

- Dec 17, 2019

I had a bit of flip-flopping in my setup. First, I had a Pi Zero hooked up to my router via USB for Pi-Hole and Radicale CalDAV server. Worked fairly well but it occupied the USB slot of my router which can be useful to hook my smartphone up in case my ISP fucks up to have LTE network wide. Also, because it spoofed itself as a LTE modem, it was very cumbersome to deal with network side.

After the n-th time I fucked it up and got tired of figuring out how to set it up from scratch to work via USB, and got tired of how fucked that ends up in the network topology, I pulled out the secondary PC with an i5-4460 and put Proxmox on it. I quickly found this to be a better solution, much easier to set up, configure, maintain and migrate, and also much faster and snappier. The two major downsides was that it was pulling 20W on idle with barely anything on it, as well as the stock cooler I had to install in it being noisy as shit after >8 years of (ab)use.

I ended up grabbing one of these for the sake of running Proxmox.

i5-7500U, 8GB RAM, 256GB SSD, 7W on idle. Despite that, I still under-utilize the hardware, plus I have no idea what I'm doing.

All three LXC's are Debian 12 based. The Pi-Hole one obviously has Pi-Hole, as well as unbound for DNS resolving and hard set domain names for all the Proxmox stuff.

The "websrv" one only has Apache2 with LibreSpeed on it, pretty much barely used, I seldom use it to test local connection and WireGuard speeds.

The Docker one is the one I really have no idea about what I'm doing. I installed Portainer on it to have a configuration interface, but from what I understand it's much more than that and I don't know how to properly use Docker from the command line. It's running two things: Radicale and Gotify.

I'm not exactly happy with how it's set up. I have no idea about the intricate details of Proxmox, Docker or Portainer, I only ran those helper scripts to set it up and followed whichever tutorials on setting up Docker/Portainer. There is no backup system in place. I feel like the Docker/Portainer setup is excessive and could be solved better, but I don't know how. I tried installing NextCloud on it but it's even more complicated than I thought so I gave up on that. I also don't know what other useful self-hosted thingamajigs I could put on it. Plus, I don't know if I'm doing the right thing relying on LXC's or should I use full KVM's, and if so, when should I use an LXC and when should I use a KVM. Or what the fuck is up with Proxmox's storage management and where can I find what when I SSH into it. I guess I should also learn how to deal with VLAN's so that I can isolate all the services from my LAN, but I don't know shit about how they work or how to set them up under RouterOS. And chances are I'd need to get a managed switch instead of a dumb one like I have right now to figure that out.

Another thing I was thinking about was turning that i5-4460 PC into a NAS server. Thing is, I don't even know how I would get around to doing it. It still eats 20W on idle, I'd need to get some hard drives, and install some NAS software. I have, zero, fucking idea, about all of that. I understand that usually people go with TrueNAS and ZFS. I don't know if I should go with TrueNAS Core and use Proxmox for shit that could be useful like a torrent client, or TrueNAS Scale and use it's virtualization features for that, not sure what would be the best simplicity/reliability tradeoff here. I understand that for the most part you should use TrueNAS for NAS and Proxmox for virtualization, and rely on them in tandem, and I assume I could then set up isolated NFS shares depending on whether or not I want some Proxmox service to have access to a specific directory, like a media library or torrent directory, or if I'd want to have an administrative share to everything when I want to manually access it.

I also have zero idea about how to deal with ZFS, mainly how to set it up so that I won't lose any data. Right now my "backup" system is something that just barely qualifies as one. A 4TB WD Blue drive I seldom plug into my PC to do a file sync of all drives. I have a 1TB OS SSD, 2TB data SSD and a 2TB data HDD, I had to add some exclusions since the 4TB WD Blue was getting full. I was thinking of getting X drives for ZFS to have, idk, 6-8TB of usable storage, and then getting a 6-8TB HDD for a cold backup, kinda like I'm doing it right now, but I don't know how to calculate ZFS eating up however many TB of those drives for it's magic to not overspend or underbuy. Yes, I know this is far from a proper backup, but I don't really have a better option than to have a second copy of the data in the same room, which is still better than no backup. On the plus side, the cheapo case I'm using for that PC has an external 3.5" drive mount on top on which I relied for those cold backups before getting a better ventilated case for my main guts. I would like to get into data encryption as well, but I'm anxious about locking myself out of all my data if I lose a key or fuck something up from my lack of knowledge, so for now I'd prefer to stay as close to raw data as possible.

Ideally I'd love for my PC to be free of mass storage like that, only have SSD's for OS, software and games, have all of it delegated to a NAS server, and have it either serve as a host for certain services if that's viable, or be available for Proxmox via NFS so that I can put actually useful shit like Jellyfin, qBitTorrent, -arr autodownloaders etc but can't right now as I keep all of my data on my PC that runs Windows and doesn't run 24/7. And of course have it set up in a way where I won't lose any data to a drive failure or a power surge, which in my case would be having a reliable offline cold copy lying somewhere in my room like I do it right now. No encryption or non-standard formats, just directly accessible raw data, plug it into any computer and have all the data right there.

In short, I require spoonfeeding in the following topics so that I know what to do next;

-how the fuck do I use Proxmox properly

-how the fuck do I use Docker CLI properly

-should I use Portainer, or pure Docker, or switch to something else for easy service setup

-what benefit would NextCloud give me and how to set it up right under Proxmox

-what the fuck is ZFS and how to set it up to not lose any data

-what drives should I buy for a NAS (dedicated/whatever, same models/mixed models, new/refurbs)

-how should I deal with raw cold backups with ZFS (no remote backups)

-how should I deal with drive encryption with minimal risk of locking myself out

-should I go with TrueNAS Core or Scale and how much should I do in Proxmox and how much in TrueNAS

After the n-th time I fucked it up and got tired of figuring out how to set it up from scratch to work via USB, and got tired of how fucked that ends up in the network topology, I pulled out the secondary PC with an i5-4460 and put Proxmox on it. I quickly found this to be a better solution, much easier to set up, configure, maintain and migrate, and also much faster and snappier. The two major downsides was that it was pulling 20W on idle with barely anything on it, as well as the stock cooler I had to install in it being noisy as shit after >8 years of (ab)use.

I ended up grabbing one of these for the sake of running Proxmox.

i5-7500U, 8GB RAM, 256GB SSD, 7W on idle. Despite that, I still under-utilize the hardware, plus I have no idea what I'm doing.

All three LXC's are Debian 12 based. The Pi-Hole one obviously has Pi-Hole, as well as unbound for DNS resolving and hard set domain names for all the Proxmox stuff.

The "websrv" one only has Apache2 with LibreSpeed on it, pretty much barely used, I seldom use it to test local connection and WireGuard speeds.

The Docker one is the one I really have no idea about what I'm doing. I installed Portainer on it to have a configuration interface, but from what I understand it's much more than that and I don't know how to properly use Docker from the command line. It's running two things: Radicale and Gotify.

I'm not exactly happy with how it's set up. I have no idea about the intricate details of Proxmox, Docker or Portainer, I only ran those helper scripts to set it up and followed whichever tutorials on setting up Docker/Portainer. There is no backup system in place. I feel like the Docker/Portainer setup is excessive and could be solved better, but I don't know how. I tried installing NextCloud on it but it's even more complicated than I thought so I gave up on that. I also don't know what other useful self-hosted thingamajigs I could put on it. Plus, I don't know if I'm doing the right thing relying on LXC's or should I use full KVM's, and if so, when should I use an LXC and when should I use a KVM. Or what the fuck is up with Proxmox's storage management and where can I find what when I SSH into it. I guess I should also learn how to deal with VLAN's so that I can isolate all the services from my LAN, but I don't know shit about how they work or how to set them up under RouterOS. And chances are I'd need to get a managed switch instead of a dumb one like I have right now to figure that out.

Another thing I was thinking about was turning that i5-4460 PC into a NAS server. Thing is, I don't even know how I would get around to doing it. It still eats 20W on idle, I'd need to get some hard drives, and install some NAS software. I have, zero, fucking idea, about all of that. I understand that usually people go with TrueNAS and ZFS. I don't know if I should go with TrueNAS Core and use Proxmox for shit that could be useful like a torrent client, or TrueNAS Scale and use it's virtualization features for that, not sure what would be the best simplicity/reliability tradeoff here. I understand that for the most part you should use TrueNAS for NAS and Proxmox for virtualization, and rely on them in tandem, and I assume I could then set up isolated NFS shares depending on whether or not I want some Proxmox service to have access to a specific directory, like a media library or torrent directory, or if I'd want to have an administrative share to everything when I want to manually access it.

I also have zero idea about how to deal with ZFS, mainly how to set it up so that I won't lose any data. Right now my "backup" system is something that just barely qualifies as one. A 4TB WD Blue drive I seldom plug into my PC to do a file sync of all drives. I have a 1TB OS SSD, 2TB data SSD and a 2TB data HDD, I had to add some exclusions since the 4TB WD Blue was getting full. I was thinking of getting X drives for ZFS to have, idk, 6-8TB of usable storage, and then getting a 6-8TB HDD for a cold backup, kinda like I'm doing it right now, but I don't know how to calculate ZFS eating up however many TB of those drives for it's magic to not overspend or underbuy. Yes, I know this is far from a proper backup, but I don't really have a better option than to have a second copy of the data in the same room, which is still better than no backup. On the plus side, the cheapo case I'm using for that PC has an external 3.5" drive mount on top on which I relied for those cold backups before getting a better ventilated case for my main guts. I would like to get into data encryption as well, but I'm anxious about locking myself out of all my data if I lose a key or fuck something up from my lack of knowledge, so for now I'd prefer to stay as close to raw data as possible.

Ideally I'd love for my PC to be free of mass storage like that, only have SSD's for OS, software and games, have all of it delegated to a NAS server, and have it either serve as a host for certain services if that's viable, or be available for Proxmox via NFS so that I can put actually useful shit like Jellyfin, qBitTorrent, -arr autodownloaders etc but can't right now as I keep all of my data on my PC that runs Windows and doesn't run 24/7. And of course have it set up in a way where I won't lose any data to a drive failure or a power surge, which in my case would be having a reliable offline cold copy lying somewhere in my room like I do it right now. No encryption or non-standard formats, just directly accessible raw data, plug it into any computer and have all the data right there.

In short, I require spoonfeeding in the following topics so that I know what to do next;

-how the fuck do I use Proxmox properly

-how the fuck do I use Docker CLI properly

-should I use Portainer, or pure Docker, or switch to something else for easy service setup

-what benefit would NextCloud give me and how to set it up right under Proxmox

-what the fuck is ZFS and how to set it up to not lose any data

-what drives should I buy for a NAS (dedicated/whatever, same models/mixed models, new/refurbs)

-how should I deal with raw cold backups with ZFS (no remote backups)

-how should I deal with drive encryption with minimal risk of locking myself out

-should I go with TrueNAS Core or Scale and how much should I do in Proxmox and how much in TrueNAS

Anime Lover

kiwifarms.net

- Joined

- Sep 24, 2024

My understanding as a retard that dabbled in it a bit:

Don't really know how Proxmox wraps shit around but in my experience with hosting sonarr etc. on my regular linux PC Docker compose is what i liked for setting docker side up the most and lazydocker to inspect if shit works or not.how the fuck do I use Docker CLI properly

ZFS is a very special file system that requires a bit more of system resources but is the "industry standard" where it comes to reducing data loss. But its very fucking specific with how it works so you just have to dive deep to see what your system can support.what the fuck is ZFS and how to set it up to not lose any data

If data is important to you storing it in 3 places with one of them being off-site is recommended. If you're just torrenting then who really cares.how should I deal with raw cold backups with ZFS (no remote backups)

You're probably gonna run it in some kind of raid for data redunancy so ideally/for convenience you'd want to have them all be the same size (especially if they are hdd). With ZFS you may also like to have an SSD for cashing.what drives should I buy for a NAS (dedicated/whatever, same models/mixed models, new/refurbs)

- Joined

- Dec 17, 2019

It doesn't. Docker on Proxmox means you're setting up either a LXC or a KVM and install Docker in that as if you were to install it on a computer with a bog standard distro. I guess in that regard TrueNAS Scale is better as it has Docker as a part of the main OS and it's control panel, but in case of Proxmox you gotta virtualize it. LazyDocker seems promising though, I wanted to learn Docker CLI to get away with dealing with Portainer which to my understanding isn't just a GUI frontend for Docker and I try to aim for simplicity nowadays, but this looks like it's what I actually wanted.Don't really know how Proxmox wraps shit around

My understanding is that you hook up the drives in a special software RAID array where ZFS handles both the RAID and the file system part. My main worry is not hardware compatibility, but how to adapt to this concept from the standpoint of having a single unencrypted NTFS drive that you just plug in and see the data.But its very fucking specific with how it works so you just have to dive deep to see what your system can support

What if I want to move this array to another machine? Do I just replug the drives to another machine that supports ZFS, set them up as a ZFS array and the software just magically knows this is a ZFS array of this type, this size with this arrangement? Do I have to know the exact arrangement in which to add those drives, or any other information like that?

What if one drive dies, let's say in a RAID-Z2 array. Do I just remove that drive from the system, plug in a fresh one of the same size, and ZFS just magically knows to populate it with the data from the other drive and that's it? Would it be doable live on a consumer system with SATA hot swap?

My main worry is what I have to know about it to operate it, be able to handle it when it fucks up or when I want to migrate my data, and how to not fuck it all up. This whole "tying the file system and drive RAID together" concept has me worried that I'll have to know some very specific information and commands to not accidentally make the entire array unusable because I didn't give it the right drive order, or that I lose all of my data when I try to replace just one dead drive from the array.

Yeah wow, 3-2-1 backup rule, buy out warehouses to put additional NAS servers in for redundancy in case your house gets bombed. Yes that's all wonderful advice I already know. Thing is I don't have the resources nor the funds to do a proper 3-2-1 backup that'll be resilient to house fires, so my threat model is limited to hardware failure from excessive use and electric damage, so IMO having two separate copies of the same data in the same place, one being ran in a live environment and the other being only ran for synchronization is enough of a backup in my case.If data is important to you storing it in 3 places with one of them being off-site is recommended

I guess I have to point this out whenever asking for advice on English speaking sites to set the right expectations, but I am not American. I do not live in America. The average monthly salary in my country is 4-5 times less than that in America, and my current salary is even less than that. I do not have the privilege of having enough disposable income to overbuy hardware. This is why I'm very deliberate in my calculations of what to buy and how to set up my home lab to the best of my abilities and needs. I really don't want to overspend on hardware, so if I were to go the route of a single external hard drive, I'd have to know what size I should have to be able to fit the capacity of my array on it.

For example, buying four 6TB hard drives for a RAID Z-1 array wouldn't be an easy purchase for me, it's easily over half of the minimal wage in Poland. But even if I were to get four 6TB drives, set three of them up in a ZFS array, I have no idea how ZFS assigns that 18TB of storage for it's use and how much I'll have left for use, and if it's going to be more than that 6TB I was aiming for, that means I've overspent and I need smaller capacity drives for the array to reach that parity. This cost optimization is my big concern, and to me ZFS seems too unpredictable to calculate my purchases properly.

That's probably what I really meant by "dealing with cold backups with ZFS", the odd disparities in how ZFS assigns the storage space and also the type of file system I should use for such a single drive cold backup. I need to know how to calculate it for my backup method to work.

1. The same size concept falls apart with ZFS and my intended method of backup as mentioned previously, it doesn't assign them in the same way RAID 1 would, and I need to know how much space I'll have available before I go out on a shopping spree.You're probably gonna run it in some kind of raid for data redunancy so ideally/for convenience you'd want to have them all be the same size (especially if they are hdd). With ZFS you may also like to have an SSD for cashing.

2. The question was more about what I should be really worrying when looking for hard drives for a NAS. The 2TB HDD I'm running right now is a Seagate that's meant for CCTV use. So, how much does shit like the drive designation matter in purchasing choices. Or the brand of the drive in terms of reliability. Or whether or not to mix match the drives to avoid all of them failing at once, and how. Or whether or not to buy them used.

Alternatively I could avoid this entire hassle by not using ZFS and instead opting for a traditional RAID 1 mirror for operational redundancy and an external drive for a backup. Perhaps I don't need ZFS in my use case, and I only want it because everyone else uses it nowadays.

HootersMcBoobies

kiwifarms.net

- Joined

- Jan 7, 2019

how the fuck do I use Docker CLI properly

You can't install Docker in an LXC container. If you want to use Docker, you need to use a KVM (correct me if I'm wrong on this, but I couldn't get it working).It doesn't. Docker on Proxmox means you're setting up either a LXC or a KVM and install Docker

LXC containers are really neat. They're pretty much a full operating system (they start an init process, systemd on most modern distros, or maybe openrc/runit on Gentoo/Alpine/Void), but they're not a full Virtual Machine. They share the Proxmox Kernel. There's almost zero overhead (same in a Docker container). It's just as fast as running it on the host (with a tiny amount of overhead for cgroups/namespaces/filesystem layers/etc.)

A Docker container usually just runs one service (that one service could be supervisord or something that managed multiple little services, but that's generally bad practice).

There is no abstract type of "container" on Linux and both LXC and Docker take different approaches to creating these fancy chroot-on-steroids environments. (Look up chroot and maybe nix to learn more about how these pieces fit together). At their core, they're a mix of cgroups, namespaces, ulimits, virutal networks, and chroots.

what the fuck is ZFS and how to set it up to not lose any data

ZFS is pretty damn awesome. Noramlly if you want to create a /, /home and /var, they're all on different partitions and if you fill up one, you could still have space on the other. ZFS uses a pool and you can split that pool up. They share the same underlying space. I've normally just used ZFS for NAS drives, but zfsbootmenu makes it more practical for the root filesystem on Linux (with ZFS native full disk encryption! No more LUKS containers!).

I'd get a spare spinny hard drive and play around with ZFS. Copy some of your long term data to it and experiement a bit with snapshots, backups and restoring them. When you get comfortable with it and see how powerful it is, I'll bet you're going to move everything to it. Here's a cheat sheet:

Code:

# Let's setup a spinny hard drive for ZFS with encryption. I'm going to call him bender:

zpool create -o ashift=12 -O compression=lz4 -o feature@encryption=enabled -O encryption=on -O keyformat=passphrase bender /dev/disk/by-partuuid/881343fe-aaaaa-bbbbb-ccccc-62133342576f

# I'm going to use Bender for tv and movies:

zfs create -o mountpoint=/media/tv bender/tv

zfs create -o mountpoint=/media/movies bender/movies

# We have bender now. Say we have another hard drive called fry (same `zpool create` as above). Say I just want to backup my movies to fry. We can use snapshots!

zfs snapshot bender/movies@2024-10-27

zfs send bender/movies@2024-10-27 | zfs recv fry/movies

# oh you want a progress bar?! ship it through pv

zfs send bender/movies@2024-10-27 | pv | zfs recv fry/movies

# but I don't want to send the entire drive each backup! Well use incremental sends!

zfs send -i bender/movies@2022-02-09 bender/movies@2022-09-07 | pv | zfs recv fry/movies

# Okay I'm done with fry. How the hell do I pull him out of my NAS?

zpool export fry

# Now you can safely pull fry. `zpool import` can be used when you put him back in. You might need `zfs load-key fry` if you've rebooted and the encryption key isn't in memeory.On proxmox, it uses ZFS behind the scens to create slices for each LXC. It's a bit more complicated, but if you learn how a regular storage drive works, the what proxmox does it should just make sense.

- Joined

- Oct 7, 2020

Use a calculator like https://www.raidz-calculator.com/but I don't know how to calculate ZFS eating up however many TB of those drives for it's magic to not overspend or underbuy

- Joined

- Dec 17, 2019

Actually, you can. I did just that. Create a Debian 12 LXC container, run Docker installation commands as if it was running on bare metal. It just works. And I assume it's the go-to way of setting up Docker under Proxmox. I just feel like I shouldn't have done it via Portainer, too much bloat/overhead for my needs and there are better, lighter ways to easily manage Docker.You can't install Docker in an LXC container.

I never thought of running Linux on ZFS, it always seemed like something you set up separately from the OS to create a reliable NAS server pool. That's what I want to use it for, but I don't believe wrapping it around in Proxmox would be a good idea so a separate TrueNAS machine would be the way to go.ZFS is pretty damn awesome.

To be fair, I should lay off all the ZFS topics and start with basics like these. How exactly does Proxmox deals with storage? For example, I'd like to set up a Syncthing LXC to have an always online sync anchor in the network, but also have it sync to a bog standard exFAT USB stick, not to the containerized storage that I have no idea how to work on outside of a running container in case of an emergency.what proxmox does

Speaking of, this is the main thing I wanted to reach out about by that initial word salad. Any suggestions for useful self-hosted programs in my current setup? Suggestions for programs that rely on NAS storage are also welcome for the future, but for now I'd like to utilize that little Dell a little bit more than just a DNS resolver and a CalDAV server.

So in short, each RAID-Z level serves double the space of an individual drive? I still wonder if you should go with RAID-Z though, and if basic RAID 1 ZFS would be more than enough for redundancy and data safety. Again, no idea how ZFS works, and it's probably best I leave learning it for later once I get around to building a NAS.Use a calculator like https://www.raidz-calculator.com/

- Joined

- Mar 30, 2023

I'm running the following on my server:

with docker images:Intel Xeon E5-2696 v3 with 18 cores (with hyperthreading)

64gb ram (soon to be 128gb)

Intel Arc A310

Nvidia Quadro k6000

4x 14tb sata drives

500gb NVMe os drive

1tb NVMe working/download drive

Ubuntu Server minimal 24.04

what useful tools would be good to add to it? I'm trying to look into image generation (that may be used for, but won't only be used for, porn) but I have some difficulty understanding all of the different options and how to set it uphomeassistant

readarr

qbittorrent

romm

mkvtoolnix

handbrake

nzbget

romm-db

sonarr

sonarrtv

lidarr

radarr

jellyfin

prowlarr

bazarr

filebrowser

metube

organizr

yacht

npm

nextcloud-aio-mastercontainer (plus sub-containers)

watchtower

SchloppyI.T.Man

kiwifarms.net

- Joined

- Jul 1, 2024

That's uh pretty gross bro. Pretty sure there's a gooning forum out there for you somewhere.that may be used for, but won't only be used for, porn