I doubt there is any actual procedural generation going on at all. Procedural generation means that the objects (outposts, POIs, rocks, anything) would be placed based on artist defined rules and using a random seed, so for example don't place chairs unless they are close to a table, don't place a table unless its in a room, etc etc.

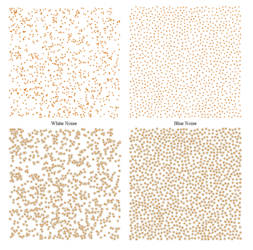

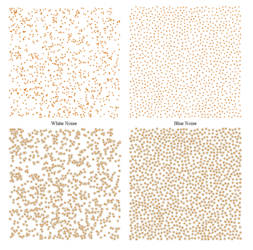

What I've seen from Starfield is that the wilderness cells place objects based on a biome using a blue noise distribution, for those who don't know, blue noise is a type of random noise that is used for its ability to generate uniformly, where as fully random ends up clumping together due to the bell curve of probability.

Blue noise is pretty useful for random things that need a uniform coverage, its used for raytracing sampling due to this, and I've seen it used for basic forest tree distribution, but it needs a high enough density or else it looks very fake, but it seems like Bethesda just used it without any thought and as a result all the distributed random rocks and trees are very artificially assembled because they are low density. Probably because the engine would throw a fit if it has too many references in a cell.

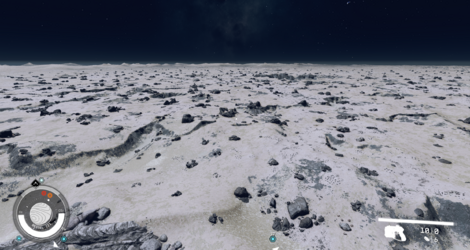

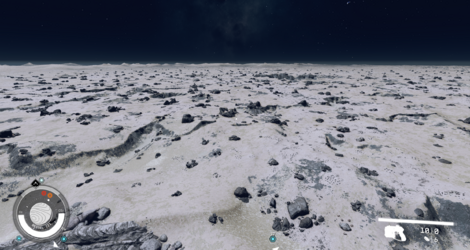

I don't see any procedural rules at play here, you can see a few different assembly types being spread evenly, close up its ok, but at a distance it becomes very obvious. It seems like the POIs that get distributed are poorly blended onto the heightmap with a basic blur or something, probably 1 per cell and thats why they are so far apart. A proper procedural game would have layers to each POI, for example, Star Citizen generates the layout of a station using artist defined parameters and hand placed layouts, then they run another generator on that basic layout and it fills in kitbash details based on defined rules and shapes, like place doorways in doorways, add supports to overhangs, etc, then they run another detail pass on top of that and can then do hand placed details if required. Because its all systems layered non destructively they can change the random seed for each one and get different results but still keep the same underlying level. I think there are videos on the Star Citizen channel that cover this stuff, and its a major part of Houdini, Horizon Zero Dawn's entire world was built with artist driven systems like this, and Death Stranding by extension. This is a very standard workflow these days with how detailed games and CGI are, its not possible for an artist to assemble that much by hand. Blender added this sort of workflow with Geometry nodes a few years ago, Unreal just got tools for it in 5.3, Houdini has been around for years.

Starfield does not do any of this, probably because the engine would throw a fit, just look at the hacked together system for the Skyrim Hearthfire houses, and the dungeons are not assembled on a grid like Fallout 4, so they can't use the settlement stuff for it. So they are probably incapable of any of these layered random systems and just drop premade cells into noise heightmaps and call that procedural generation. This is further proven by the placement of everything being the exact same between the same POIs.

I see a lot of people saying to stop blaming the engine, and that Bethesda upgraded it and that 'gamers dont know how engines work', but all of this, the lack of procedural generation, the world size limits, the loading cutscenes, the awful space cells, the cell loading entirely, its all because the engine literally cannot do any of these things, so Bethesda cheats and half asses everything. The entire game was held back by the engine, so much of what they wanted to do is just impossible, but they will never actually change anything because they are complacent.