- Joined

- Nov 15, 2021

Saying "people have always complained about optimization" is a cop-out.

They literally have, though.

Games today look no better than their predecessors a decade ago.

This is because lots of AAA games continue to be designed for the PS4, which came out a decade ago. The reason Black Ops 6 runs at 100 fps on a low-end card today is it's a PS4 game at heart. Consequently, low settings run fine on 10-year-old hardware, while ultra settings do quite well even on low-end hardware from 2024. Do you know what the new Indiana Jones and Star Wars games have in common? No PS4 version. Minimum GPU for Indiana Jones is an RX 6600, or roughly the same GPU the PS5 has. For Star Wars, it's an RX 5600, which is only a year older than the PS5. This isn't a coincidence.

In other words, it's starting to be time for PC gamers to upgrade their machines to be at least as good as consoles. Or join the Playstation Master Race, I suppose, if you can't afford a current-gen GPU.

So what you get is the same looking shit at much worse performance. And things like RTX compound this where the graphical "improvements" do not outweigh the massive hit to performance over baked lighting.

So put the textures on low/medium and turn off RTX if it doesn't make any difference instead of complaining that your card can't handle ultra textures & raytracing.

And it goes beyond graphics too. In the case of Battlefield 2042, it has worse physics, less interaction, less props, worse destruction, etc. than it's predecessors.

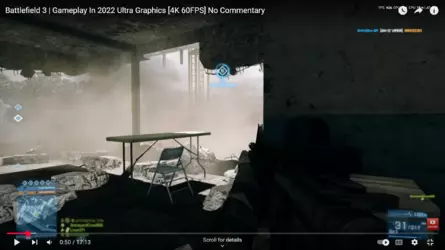

This is Battlefield 3:

Note the low-poly concrete clutter with lighting that doesn't match the interior. Games don't look like this any more. The game's held up incredibly well over 14 years, showcasing diminshing returns in graphics, but asset fidelity has improved a lot in that amount of time.

Last edited: