Stuff from the

AMD Computex keynote:

Desktop CPUs

AMD claims a +16% IPC increase for

Ryzen 9000 (Zen 5), launching in July 2024. That figure may be biased towards AVX-512, which could have gigantic uplifts from possibly having a full 512-bit implementation.

Base clocks

regressed for the 6-core 9600X (4.7 -> 3.9 GHz), 8-core 9700X (4.5 -> 3.8 GHz), 12-core 9900X (4.7 -> 4.4 GHz), and 16-core 9950X (4.5 -> 4.3 GHz). I am comparing them to the 7600X, 7700X, 7900X, and 7950X. There's no 3D V-Cache on these particular CPUs, so it will be interesting to hear AMD's explanation for this. It may not affect real world performance at all if most people have the cooling needed to hit the higher clocks. The 9900X drops to 120W TDP / 162W PPT from the 7900X's 170W / 230W, matching the 7900X3D's lower power limits. So it's likely that Zen 5 is going to be very power efficient.

Turbo clocks went up for the 6-core 9600X (5.3 -> 5.4 GHz) and 8-core 9700X (5.4 -> 5.5 GHz).

AMD is now committed to supporting the AM5 socket through 2027+, and is in fact

launching two "5000XT" CPUs for the AM4 socket in July. We can infer this means that Zen 6 is definitely coming to AM5.

New X870/X870E motherboards will all supposedly support USB 4, PCIe 5 for graphics/NVMe, and higher memory clocks. However they may be lying about this for X870, so take the whole thing with a grain of salt.

Mobile APUs

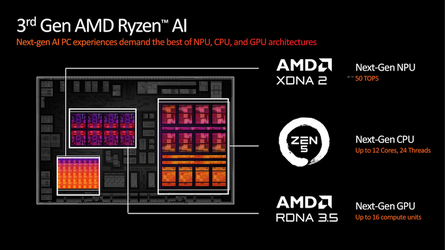

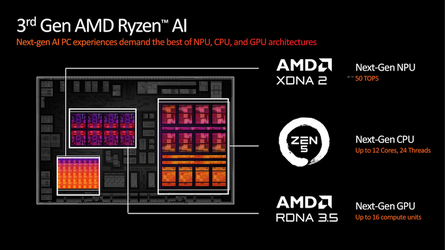

Strix Point mobile APUs are officially announced, with up to 12 cores (4x Zen 5 + 8x Zen 5c), 16 CUs RDNA3.5 (Radeon 890M), XDNA2 at 50 TOPS INT8.

AMD adopted a GayI naming scheme for at least two Strix Point SKUs, which were the only ones they announced: the 12-core Ryzen AI 9 HX 370 and 10-core Ryzen AI 9 365. The 10-core disables two of the Zen 5c cores and has 12 CUs (Radeon 880M).

L3 cache is 24 MB, but it's going to be split between the Zen 5 and Zen 5c clusters. The Zen 5 cores get 16 MB, the Zen 5c cores get 8 MB. So that shouldn't make much difference from Phoenix/Hawk which had 16 MB for all cores, but maybe there's an argument to be made here about giving the big cores more breathing room. We'll have to see if it makes any difference in testing.

They have a 15-54W TDP range, indicating that the U and H(S) chip lineups may be merged now. Maybe a good idea at first glance, but it could confuse consumers when they get a 15W thin-and-light laptop model and expect it to perform like the unchained 54W models.

Lisa Su joked on stage with the Microsoft guy that the XDNA2 NPU is taking up a lot of die space. If this image is drawn to scale, then by my quick estimate the NPU is taking up about 59-60% of the die area of the integrated graphics:

"We could have had 24 CUs!" screamed the Gamer, forgetting that the APU is stuck on the usual 128-bit memory bus. You could argue it makes the chip more expensive. Blame Microsoft, they are the company practically forcing AMD, Intel, and Qualcomm to add 40+ TOPS NPUs to everything.

XDNA2 adds support for "Block FP16", which supposedly has the performance of INT8 but the accuracy of FP16, without quantization. So it also has a full 50 TOPS of this new Block FP16 performance, not just INT8.

Just as we say Hawk Point raise clock speeds to hit 16 TOPS with XDNA1, we can expect different implementations to have different performance. Strix Halo is now rumored to hit 60-70 TOPS with the NPU. Maybe there will be lower chips like Kraken Point that only hit 40-45.

Other

Turin Epyc CPUs using Zen 5/5c will have up to 192 cores (Zen 5c). AMD showed some comparisons of the 128-core version (Zen 5) against Intel. They drop into the same SP5 socket.

AMD saw Nvidia switch to an annual cadence for their AI GPU releases, and said "Aw fuck, follow the leader!" So now their roadmap has the

Instinct MI325X accelerator this year, which simply adds 50% more memory (from 192 to 288 GB) and slightly faster memory clocks for ~13% more bandwidth.

The MI350 (coming 2025) will be based on CDNA4, with up to the same 288 GB of HBM3E. AMD made a claim of a

35x performance uplift from the MI300X, which is certainly some bullshittery exploiting FP4 precision and the larger pool of memory. But from what I hear these lower precision data types are becoming perfectly fine for many models, so the speedup could be real for some scenarios.

AMD Slims Down Compute With Radeon Pro W7900 Dual Slot For AI Inference

Stable Diffusion 3 (the Medium-sized model) will be

available on June 12 (discuss here).